Updated May 2021. This research builds on the first edition of this article, which was published in February 2020.

Serverless has gained traction among organizations of all sizes, from cloud-native startups to large enterprises. With serverless, teams can focus on bringing ideas to the market faster—rather than managing infrastructure—all while paying for only what they use. In this report, we examined millions of functions run by thousands of companies to understand how serverless is being used in the real world.

From short-running tasks to user-facing applications, serverless powers a wide range of use cases. AWS Lambda is the most mature and widely used function-as-a-service (FaaS) offering, but we also see impressive growth in adoption of Azure Functions and Google Cloud Functions. Today, the serverless ecosystem has grown beyond FaaS to include dozens of services that help developers build faster, more dynamic applications. A quarter of Amazon CloudFront users have embraced serverless edge computing and organizations are also leveraging AWS Step Functions to manage application logic across various distributed components.

Read on for more insights and trends from the serverless landscape.

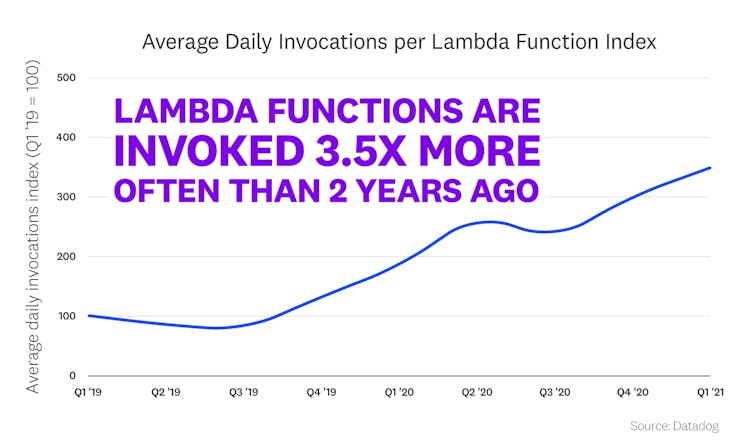

Lambda functions are invoked 3.5 times more often than two years ago

AWS Lambda enables developers to innovate faster by building highly scalable applications without worrying about infrastructure. Today, teams are not merely experimenting with serverless, but making it a critical part of their software stacks. Indeed, our research indicates that companies that have been using Lambda since 2019 have significantly ramped up their usage. On average, functions were invoked 3.5 times more often per day at the start of 2021 than they were two years prior. Additionally, within the same cohort of Lambda users, each organization's functions ran for a total of 900 hours a day, on average.

“Datadog’s 2021 The State of Serverless report highlights developers accelerating their adoption of serverless architectures to address new, more advanced business challenges. We’re excited to see organizations benefit from the agility, elasticity, and cost efficiency of adopting serverless technologies like AWS Lambda, and are here to support this growing, diverse developer community.”

—Ajay Nair, GM of Lambda Experience, Amazon Web Services

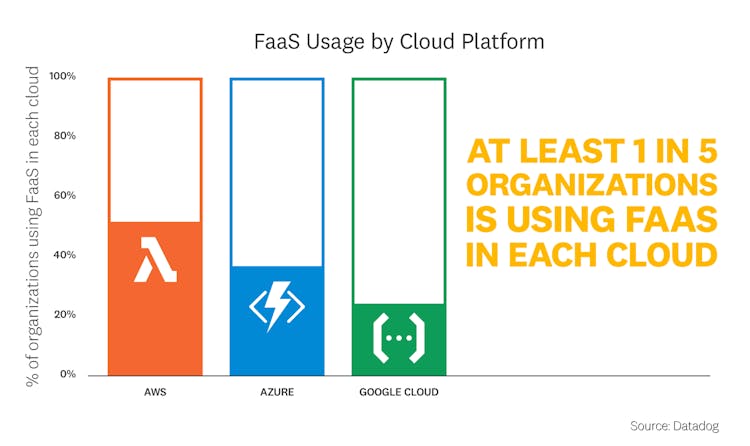

Azure Functions and Google Cloud Functions are gaining momentum

AWS Lambda may have kickstarted the serverless movement, but it is not the only game in town. Azure Functions and Google Cloud Functions have both seen growth in adoption within their respective cloud platforms. In the past year, the share of Azure organizations running Azure Functions climbed from 20 to 36 percent. And on Google Cloud, nearly a quarter of organizations now use Cloud Functions. Although Cloud Functions was the last of the three FaaS offerings to launch, serverless is not a new concept in Google Cloud—the cloud platform introduced Google App Engine, its first fully serverless compute service, back in 2008. But today, we see momentum shifting toward Google's newer serverless offerings, namely Cloud Functions and Cloud Run.

“Regardless of the framework, language, or cloud you’re using, serverless can help you build and iterate with ease. Two years ago, Next.js introduced first-class support for serverless functions, which helps power dynamic Server-Side Rendering (SSR) and API Routes. Since then, we’ve seen incredible growth in serverless adoption among Vercel users, with invocations going from 262 million a month to 7.4 billion a month, a 28x increase.”

—Guillermo Rauch, Vercel CEO and Next.js co-creator

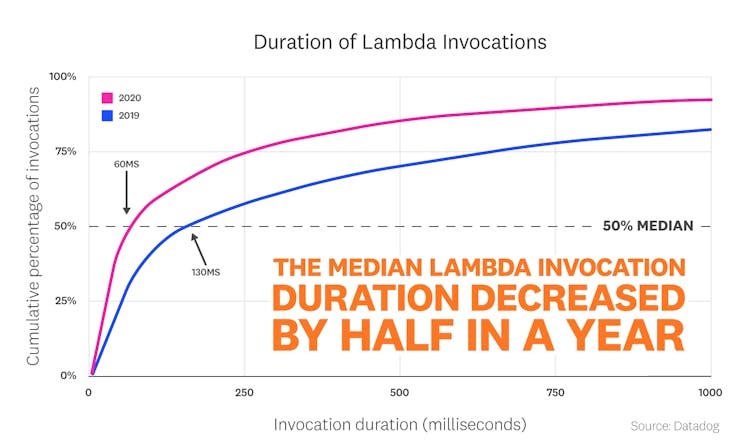

Lambda invocations are much shorter today than a year ago

Lambda is increasingly used to power customer-facing applications that require low latency. In 2020, the median Lambda invocation took just 60 milliseconds—about half of the previous year's value. One possible explanation is that more organizations are following Lambda best practices and designing functions to be highly specific to their workloads, which helps reduce the duration of invocations. We also noted that the tail of the latency distribution is long, which suggests that Lambda is not just powering short-lived jobs, but also more computationally intensive use cases.

Step Functions power everything from web applications to data pipelines

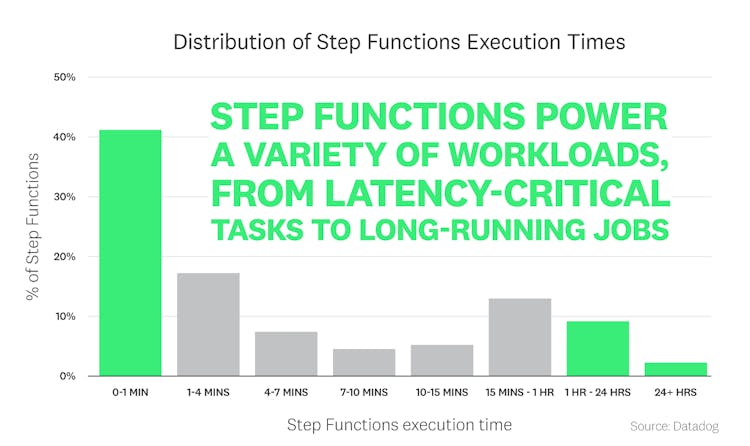

AWS Step Functions enables developers to build event-driven workflows that involve multiple Lambda functions and AWS services. Within these workflows, Step Functions coordinates error handling, retries, timeouts, and other application logic, which helps reduce operational complexity as serverless applications scale. Our research indicates that the average Step Functions workflow contains 4 Lambda functions—and we see this number growing month over month.

Step Functions offers two types of workflows: Standard and Express. We noted that over 40 percent of workflows execute in under a minute, indicating that organizations are likely using Express Workflows to support high-volume event processing workloads. But while many workflows execute quickly, others run for over a day. In fact, the longest Step Function workflows run for over a week. Step Functions workflows can include activity workers that run on Amazon ECS or EC2 instances, for example, which means that they are able to execute longer than the Lambda function timeout of 15 minutes. This allows Step Functions to support a vast array of use cases, from latency-critical tasks like web request handling to complex, long-running ones like big data processing jobs.

“We use AWS Step Functions extensively throughout our fully serverless architecture. It allows us to design and execute reliable workflows that process a high volume of transactions on our B2B trading platform, while reducing our operational complexity.”

—Zack Kanter, CEO, Stedi

1 in 4 CloudFront users has embraced serverless edge computing

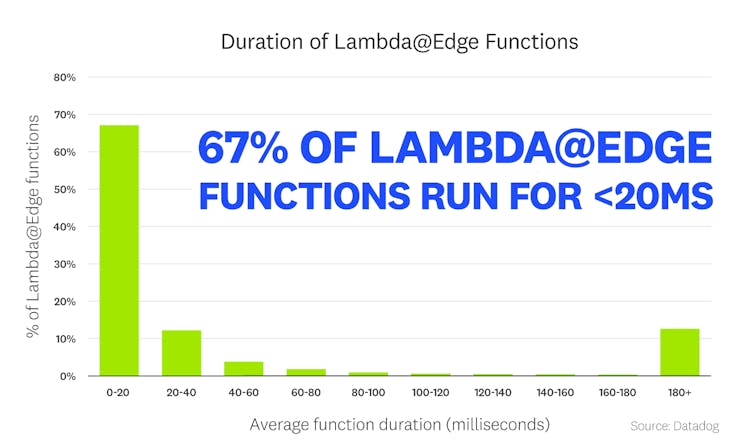

Edge computing has generated quite a lot of buzz for its promise of faster data processing. Today, a quarter of organizations that use Amazon CloudFront are taking advantage of Lambda@Edge to deliver more personalized experiences for their global user base. For instance, Lambda@Edge can dynamically transform images based on user characteristics (e.g., device type) or serve different versions of a web application for A/B testing.

By leveraging CloudFront's network of edge locations, Lambda@Edge lets organizations execute functions closer to their end users, without the complexity of setting up and managing origin servers. Our data shows that 67 percent of Lambda@Edge functions run in under 20 milliseconds, which suggests that serverless edge computing has enormous potential to support even the most latency-critical applications with minimal overhead. As this technology matures, we expect to see more organizations relying on it to improve their end-user experience.

“Developers are increasingly moving parts of their applications to the edge. The ability to dynamically fetch and modify data from the CDN edge means you can deliver faster end-user experiences. Lambda@Edge made this possible in 2017, and now CloudFront Functions makes it simpler and more cost-effective for developers to run full JavaScript applications closer to their customers.”

—Farrah Campbell, Sr. Product Marketing Manager of Modern Applications,

Amazon Web Services

Trend Number 6 Organizations are overspending on Provisioned Concurrency for a majority of functions

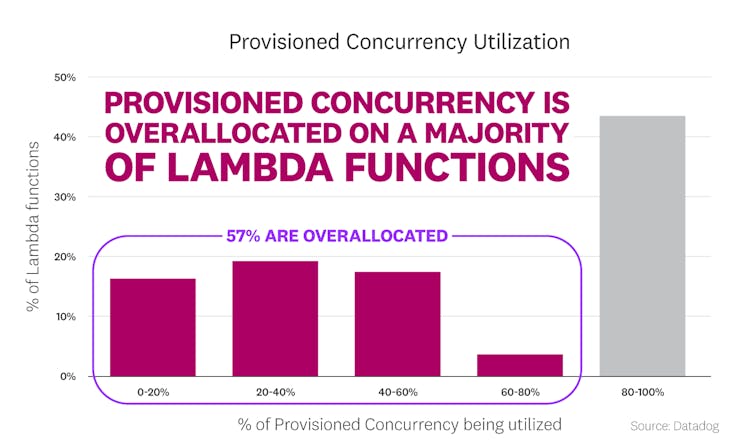

When a Lambda function is invoked after a period of inactivity, it experiences a brief delay in execution, known as a cold start. For applications that require millisecond-level response times, cold starts can be a non-starter. At the end of 2019, AWS introduced Provisioned Concurrency to help Lambda users combat cold starts by keeping execution environments initialized and ready to respond to requests.

Based on our data, it appears that configuring the optimal amount of Provisioned Concurrency for Lambda functions remains a challenge for users. Over half of functions use less than 80 percent of their configured Provisioned Concurrency. Meanwhile, over 40 percent of functions use their entire allocation, which means that they may still encounter cold starts and would benefit from even more concurrency. Application Auto Scaling offers one way to work around these issues by allowing users to automatically scale Provisioned Concurrency based on utilization.

We also see that Provisioned Concurrency is used more often with Java and .NET Core functions, which typically have slower startup times than Python or Node.js, due to the inherent nature of those runtimes. Java, for instance, needs to initialize its virtual machine (JVM) and load a wide range of classes into memory before it can execute user code.

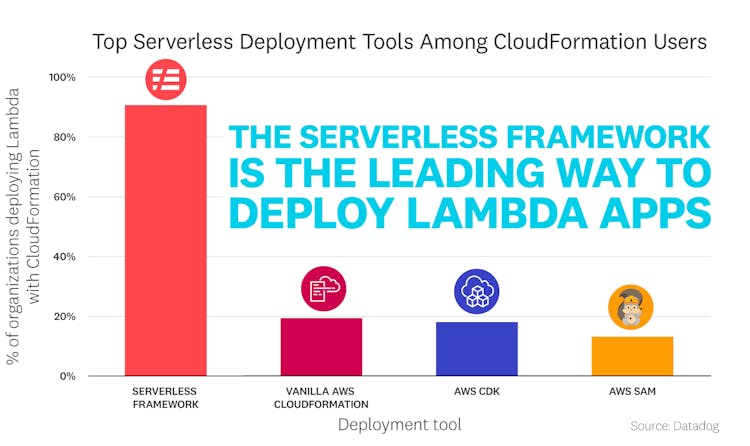

The Serverless Framework is the leading way to deploy Lambda applications with AWS CloudFormation

As serverless applications scale, manually deploying Lambda functions and other resources can quickly become cumbersome. AWS CloudFormation lets developers provision AWS infrastructure and third-party resources in collections (known as stacks), and is the underlying deployment mechanism for frameworks like AWS Cloud Development Kit (CDK), AWS Serverless Application Model (SAM), and the Serverless Framework.

Among these tools, the open source Serverless Framework is by far the most popular—today, it is used by over 90 percent of organizations that manage their serverless resources with AWS CloudFormation. Aside from the Serverless Framework, 19 percent of organizations use vanilla CloudFormation, 18 percent use AWS CDK, and 13 percent use AWS SAM. Note that since each organization may use multiple deployment tools, these values add up to more than 100 percent.

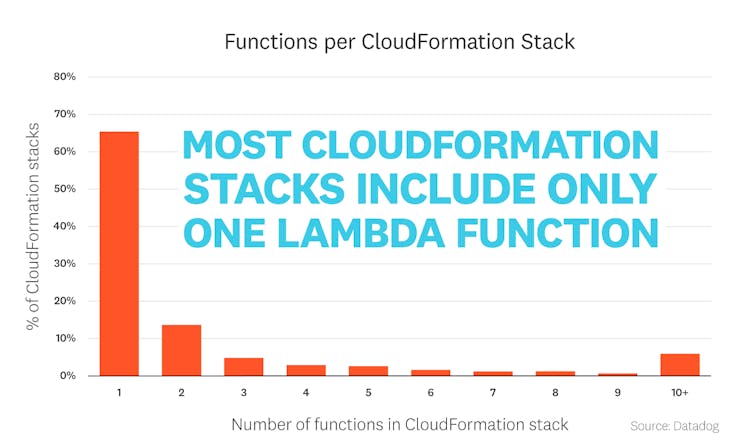

Among the CloudFormation stacks used in serverless applications, 65 percent contain only one Lambda function. Furthermore, over half of all functions—57 percent—are not deployed with CloudFormation. This suggests that many organizations are still in the early stages of automating and optimizing their serverless workflows with infrastructure as code. But just as orchestrators like Kubernetes and Amazon Elastic Container Service (ECS) have become essential for managing large fleets of containers, we expect to see infrastructure-as-code tools take on a more critical role in deploying serverless applications at scale.

“With developers and enterprises building more advanced applications that leverage serverless technologies, they need more powerful tools to help them reliably compose, test, deploy, and manage their services. This was the catalyst for open source infrastructure-as-code projects like the Serverless Framework and AWS CDK. The Serverless Framework alone saw downloads grow from 12 million in 2019 to 25 million in 2020, so we expect to see accelerating adoption and sophistication of these tools as developers continue to build more with serverless.”

—Jeremy Daly, GM of Serverless Cloud, Serverless Inc.

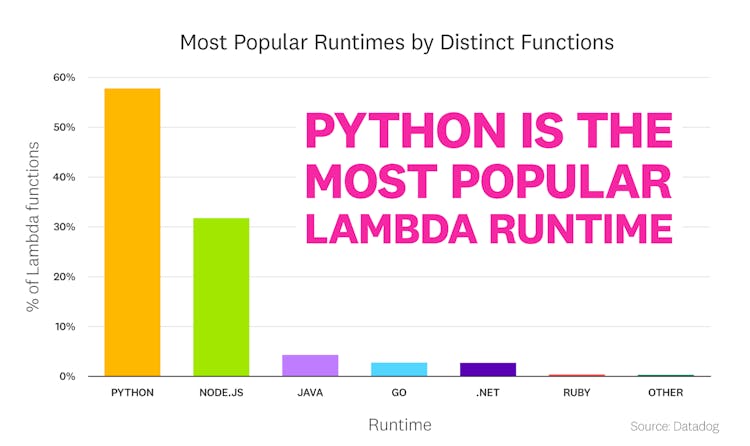

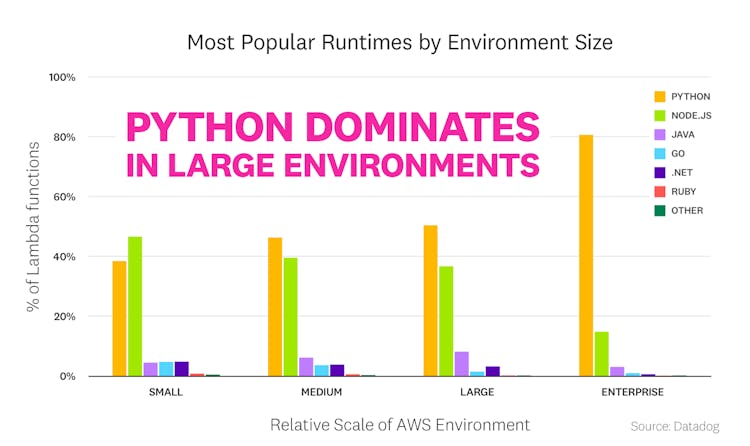

Python is the most popular Lambda runtime, especially in large environments

Since 2018, Lambda has offered support for six runtimes: Node.js, Python, Java, Go, .NET Core, and Ruby. However, Python and Node.js continue to dominate among Lambda users, accounting for nearly 90 percent of functions. Fifty-eight percent of all deployed Lambdas run Python (up 11 points from a year ago), with another 31 percent running Node.js (an 8-point dip compared to last year).

When we examined the breakdown of runtime usage by environment size, an interesting trend emerged: while Node.js edges out Python in small AWS environments, Python becomes increasingly popular as the size of the environment grows. Among organizations with the largest AWS footprints, Python is used four times more often than Node.js.

As of March 2021, the top runtimes by version are:

- Python 3.x

- Node.js 12

- Node.js 10

- Python 2.7

- Java 8

- Go 1.x

- .NET Core 2.1

- .NET Core 3.1

Among functions written in Python, over 90 percent use Python 3, with Python 3.8 being the most popular version. Python 2.7 is down 25 percentage points from a year ago, as users increasingly migrate to Python 3. AWS has announced plans to drop support for Node.js 10 in May 2021, so we expect to see more usage of Node.js 12 as well as the newly supported Node.js 14. Java 8 is five times more popular than Java 11 among Lambda users, though support for the latter has been available since late 2019.

Sign up to receive serverless updates

eBooks | Cheatsheets | Product Updates

METHODOLOGY

Population

For this report, we compiled usage data from thousands of companies in Datadog's customer base. But while Datadog customers cover the spectrum of company size and industry, they do share some common traits. First, they tend to be serious about software infrastructure and application performance. And they skew toward adoption of cloud platforms and services more than the general population. All the results in this article are biased by the fact that the data comes from our customer base, a large but imperfect sample of the entire global market.

FaaS adoption

In this report, we consider a company to have adopted AWS Lambda, Azure Functions, or Google Cloud Functions if they ran at least five distinct functions in a given month. The Datadog Forwarder function, which ships data such as S3 and CloudWatch logs to Datadog, was excluded from the function count.

Cloud provider usage

We consider a company to be using a cloud provider (i.e., AWS, Google Cloud, or Microsoft Azure) if they ran at least five distinct serverless functions or five distinct virtual machines (VMs) in a given month. In this way, we can capture a cloud provider user base comprising companies that are exclusively running VMs, exclusively running serverless functions, or running a mix of both.

Scale of environments

To estimate the relative scale of a company's infrastructure environment, we examine the company's usage of serverless functions, containers, physical servers, cloud instances, and other infrastructure services. Although the boundary between categories (such as “medium” and “large”) is necessarily artificial, the trend across categories is clear.

Fact #1

To analyze trends in long-term Lambda usage, we limited our investigation to organizations that have been using Lambda since the start of 2019. For this cohort of organizations, we randomly sampled usage data and computed the average daily invocations per function for each quarter from 2019 to the start of 2021. We then plotted an index chart by making the first quarter of 2019 the base quarter, setting its index to 100, and normalizing the values for each subsequent quarter to this base index.

Fact #6

To analyze the under/overallocation of Provisioned Concurrency for each function, we computed the average utilization across randomly sampled days in 2020 and graphed them as a distribution to generate a representative picture of usage across a diverse set of workloads.

Fact #7

Our investigation focuses only on CloudFormation usage, which means that it excludes Lambda functions deployed manually through the AWS console or with a different infrastructure-as-code tool like Terraform.