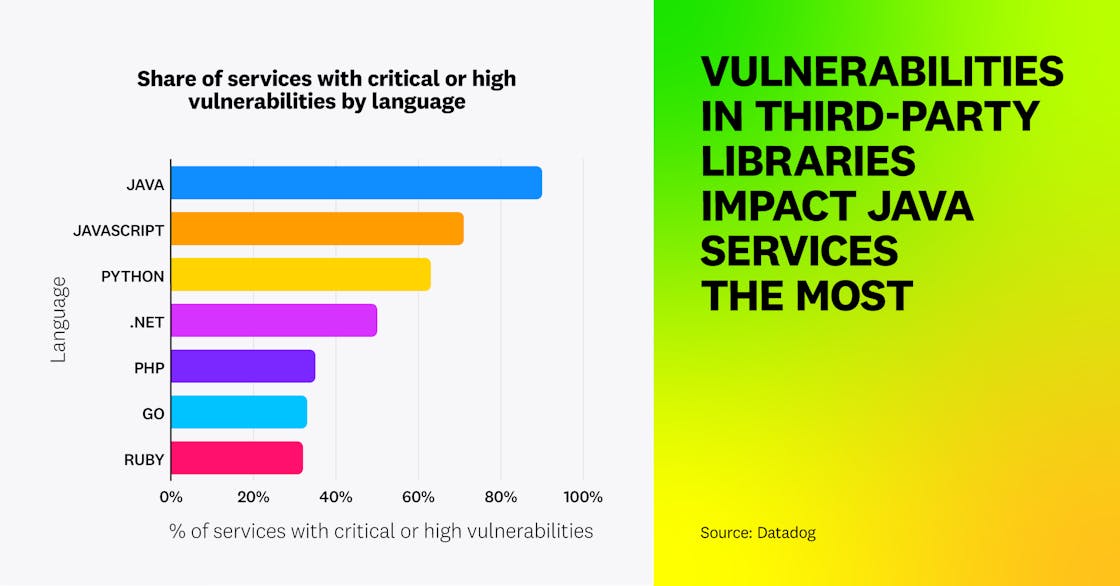

Java services are the most impacted by third-party vulnerabilities

By analyzing the security posture of a range of applications, written in a variety of programming languages, we have identified that Java services are the most affected by third-party vulnerabilities: 90 percent of Java services are vulnerable to one or more critical or high-severity vulnerabilities introduced by a third-party library, versus an average of 47 percent for other technologies.

Java services are also more likely to be vulnerable to real-world exploits with documented use by attackers. The US Cybersecurity and Infrastructure Security Agency (CISA) keeps a running list of vulnerabilities that are exploited in the wild by threat actors, the Known Exploited Vulnerabilities (KEV) catalog. This continuously updated list is a good way to identify the most impactful vulnerabilities that attackers are actively exploiting to compromise systems. From the vulnerabilities in that list, we found that Java services are overrepresented: 55 percent of Java services are affected, versus 7 percent of those built using other languages.

The pattern of overrepresentation holds true even when focusing on specific categories of vulnerabilities—for example, 23 percent of Java services are vulnerable to remote code execution (RCE), impacting 42 percent of organizations. The prevalence of impactful vulnerabilities in popular Java libraries—including Tomcat, the Spring Framework, Apache Struts, Log4j, and ActiveMQ—may partly explain these high numbers. The most common vulnerabilities are:

- Spring4Shell (CVE-2022-22965)

- Log4Shell (CVE-2021-45046 and CVE-2021-44228)

- An RCE in Apache ActiveMQ (CVE-2023-46604)

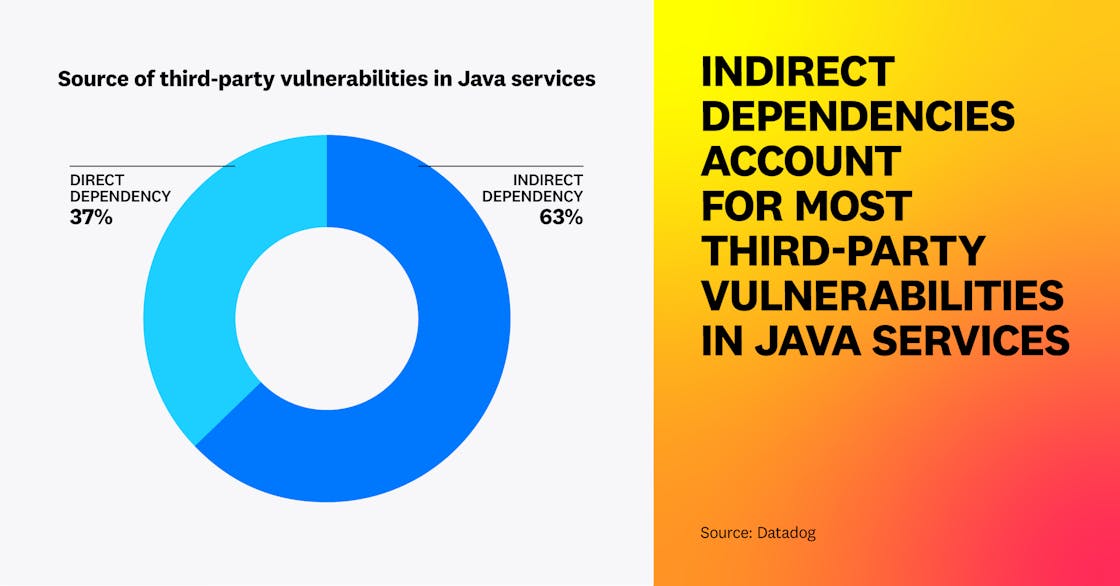

The hypothesis is reinforced when we examine where these vulnerabilities typically originate. In Java, 63 percent of high and critical vulnerabilities derive from indirect dependencies—i.e., third-party libraries that have been indirectly packaged with the application. These vulnerabilities are typically more challenging to identify, as the additional libraries in which they appear are often introduced into an application unknowingly.

It’s therefore essential to consider the full dependency tree, not only direct dependencies, when scanning for application vulnerabilities. It’s also essential to know whether any new dependency added to an application is well-maintained and frequently upgrades its own dependencies. Frameworks such as the OpenSSF Scorecard are helpful to quickly assess the health of open source libraries.

“The attack surface of your organization isn't just the public-facing code you've written—it’s also your applications’ dependencies, both direct and indirect. How are you tracking vulnerabilities in these dependencies? Furthermore, are you able to alert early upon compromise? How do you limit the damage? While most vulnerabilities aren't worth prioritizing, those vulnerabilities with strong exploitation potential are often found in overprivileged applications with long-lived credentials, increasing their potential severity.”

Founder at Rogue Valley Information Security and SANS Instructor

Attack attempts from automated security scanners are mostly unactionable noise

We analyzed a large number of exploitation attempts against applications across various languages. We found that attacks coming from automated security scanners represent by far the largest number of exploitation attempts. These scanners are generally open source tools that attackers attempt to run at scale, scanning the whole internet to identify vulnerable systems. Popular examples of these tools include Nuclei, ZGrab, and SQLmap.

We identified that the vast majority of attacks performed by automated security scanners are harmless and only generate noise for defenders. Out of tens of millions of malicious requests that we identified coming from such scanners, only 0.0065 percent successfully triggered a vulnerability. This shows that it’s critical to have a strong framework for alert prioritization in order to enable defenders to effectively monitor raw web server logs or perimeter web application firewall (WAF) alerts. Integrating threat intelligence and application runtime context into security detections can help organizations filter for the most critical threats.

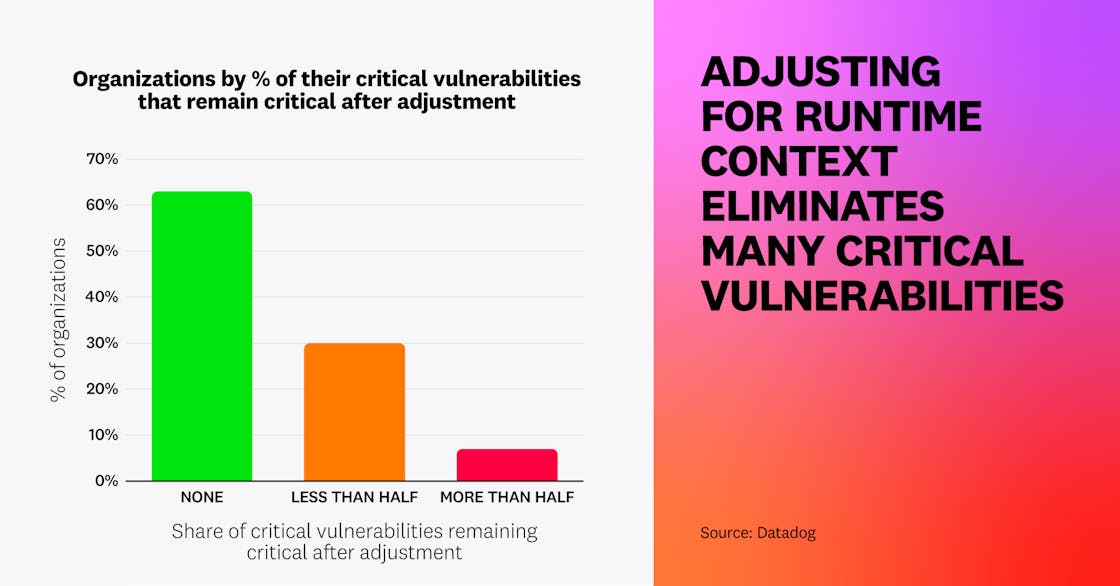

Only a small portion of identified vulnerabilities are worth prioritizing

In 2023, over 4,000 high and 1,000 critical vulnerabilities were identified and inventoried in the Common Vulnerabilities and Exposures (CVE) project. Through our research, we’ve found that the average service is vulnerable to 19 such vulnerabilities. However, according to past academic research, only around 5 percent of vulnerabilities are exploited by attackers in the wild.

Given these numbers, it’s easy to see why practitioners are overwhelmed with the amount of vulnerabilities they face, and why they need prioritization frameworks to help them focus on what matters. We analyzed a large number of vulnerabilities and computed an “adjusted score” based on several additional factors to determine the likelihood and impact of a successful exploitation:

- Is the vulnerable service publicly exposed to the internet?

- Does it run in production, as opposed to a development or test environment?

- Is there exploit code available online, or instructions on how to exploit the vulnerability?

We also considered the Exploit Prediction Scoring System (EPSS) score, giving more weight to vulnerabilities that scored higher on this metric. We applied this methodology to all vulnerabilities to assess how many would remain critical based on their adjusted score. We identified that, after applying our adjusted scoring, 63 percent of organizations that had vulnerabilities with a critical CVE severity no longer have any critical vulnerabilities. Meanwhile, 30 percent of organizations see their number of critical vulnerabilities reduced by half or more.

When determining which vulnerabilities to prioritize, organizations should adopt a framework that enables them to consistently evaluate issue severity. Generally, a vulnerability is more serious if:

- The impacted service is publicly exposed

- The vulnerability is running in production

- There is exploit code publicly available

While other vulnerabilities might still carry risk, they should likely be addressed only after issues that meet these three criteria.

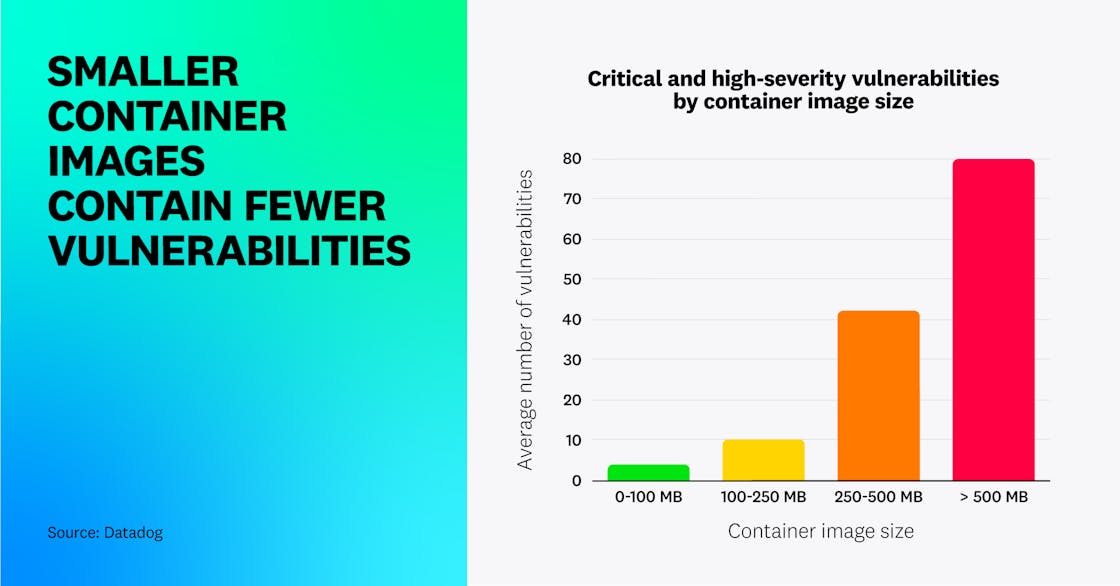

Lightweight container images lead to fewer vulnerabilities

In software development and security alike, less is often more. This is particularly true in the context of third-party dependencies, such as container base images. There are typically multiple options for choosing a base image, including:

- Using a large image based on a classic Linux distribution, such as Ubuntu

- Using a slimmer image based on a lightweight distribution, such as Alpine Linux or BusyBox

- Using a distroless image, which contains only the minimum runtime necessary to run the application—and sometimes, nothing other than the application itself

By analyzing thousands of container images, we identified that the smaller a container image is, the fewer vulnerabilities it is likely to have—likely because it contains fewer third-party libraries. On average, container images smaller than 100 MB have 4.4 high or critical vulnerabilities, versus 42.2 for images between 250 and 500 MB, and almost 80 for images larger than that.

This demonstrates that in containerized environments, using lightweight images is a critical practice for minimizing the attack surface, as it helps reduce the number of third-party libraries and operating system packages that an application depends on. In addition, thin images lead to reduced storage needs and network traffic, as well as faster deployments. Finally, lightweight container images help minimize the tooling available to an attacker—including system utilities such as curl or wget—which makes exploiting many types of vulnerabilities more challenging.

“When I’m playing the role of the threat actor in container environments like Kubernetes, container images built on distroless make my day harder.”

CEO at consulting firm InGuardians

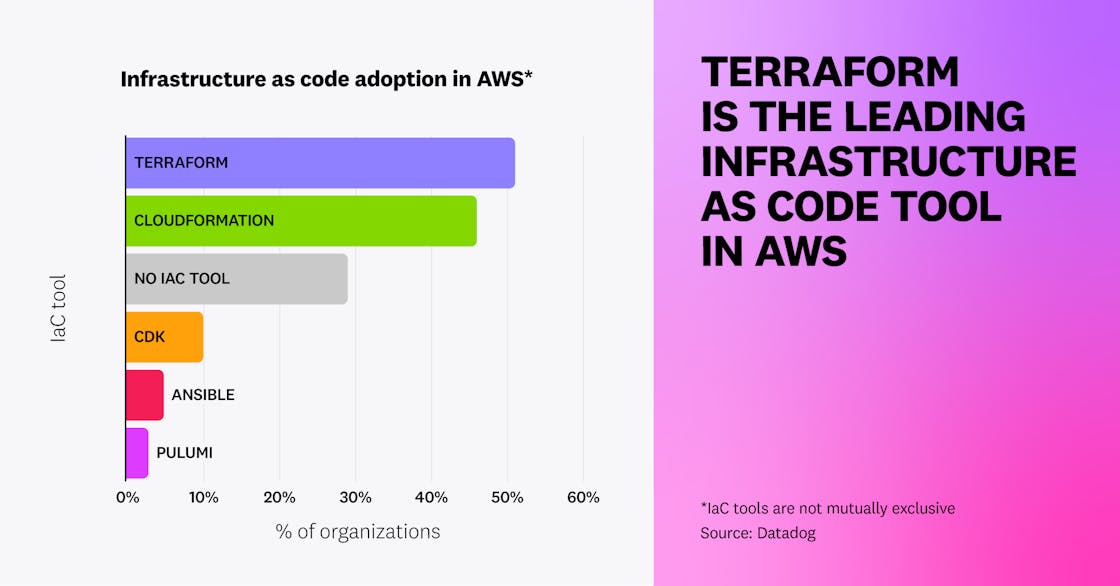

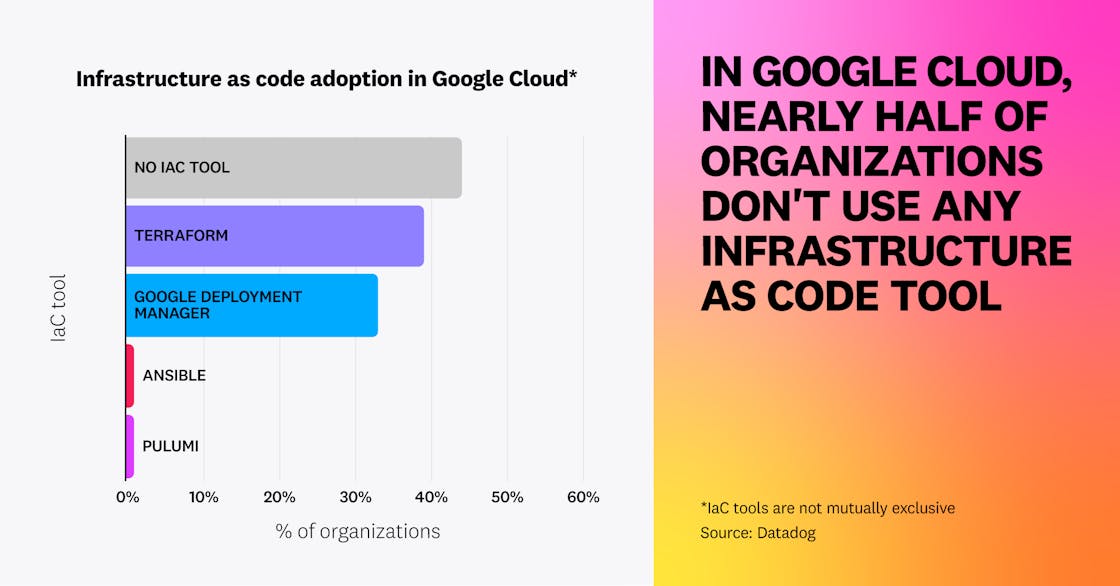

Adoption of infrastructure as code is high, but varies across cloud provider

The concept of infrastructure as code (IaC) was initially introduced in the 1990s with projects like CFEngine, Puppet, and Chef. After public cloud computing gained popularity, IaC quickly became a de facto standard for provisioning cloud environments. IaC brings considerable benefits for operations, including version control, traceability, and reproducibility across environments. Its declarative nature also helps DevOps teams understand the desired state, as opposed to reading neverending bash scripts that describe how to get there.

IaC is also considered a critical practice when securing cloud production environments, as it helps ensure that:

- All changes are peer-reviewed

- Human operations have limited permissions on production environments, since deployments are handled by a CI/CD pipeline

- Organizations can scan IaC code for weak configurations, helping them identify issues before they reach production

We identified that in AWS, over 71 percent of organizations use IaC through at least one popular IaC technology such as Terraform, CloudFormation, or Pulumi. This number is lower in Google Cloud, at 55 percent.

Note that we cannot report on Azure, since Azure Activity Logs don’t log HTTP user agents.

Across AWS and Google Cloud, Terraform is the most popular technology, coming just before cloud-specific IaC tools, namely CloudFormation and Google Deployment Manager.

Manual cloud deployments are still widespread

Humans, thankfully, are not machines—and this means we are bound to make mistakes. A major element of quality control, and by extension security, is to automate repetitive tasks that can be taken out of human hands.

In cloud production environments, a CI/CD pipeline is typically responsible for deploying changes to infrastructure and applications. The automation that takes place in this pipeline can be done with IaC tools or through scripts using cloud provider–specific tooling.

Automation ensures that engineers don’t need constant privileged access to the production environment, and that deployments are properly tracked and peer-reviewed. The opposite of this best practice—taking actions manually from the cloud console—is often referred to as click operations, or ClickOps.

By analyzing CloudTrail logs, we identified that at least 38 percent of organizations in AWS had used ClickOps in all their AWS accounts within a 14-day window preceding the writing of this study. According to our definition, this means that these organizations had deployed workloads or taken sensitive actions manually through the AWS Management Console—including in their production environments—during this period of time.

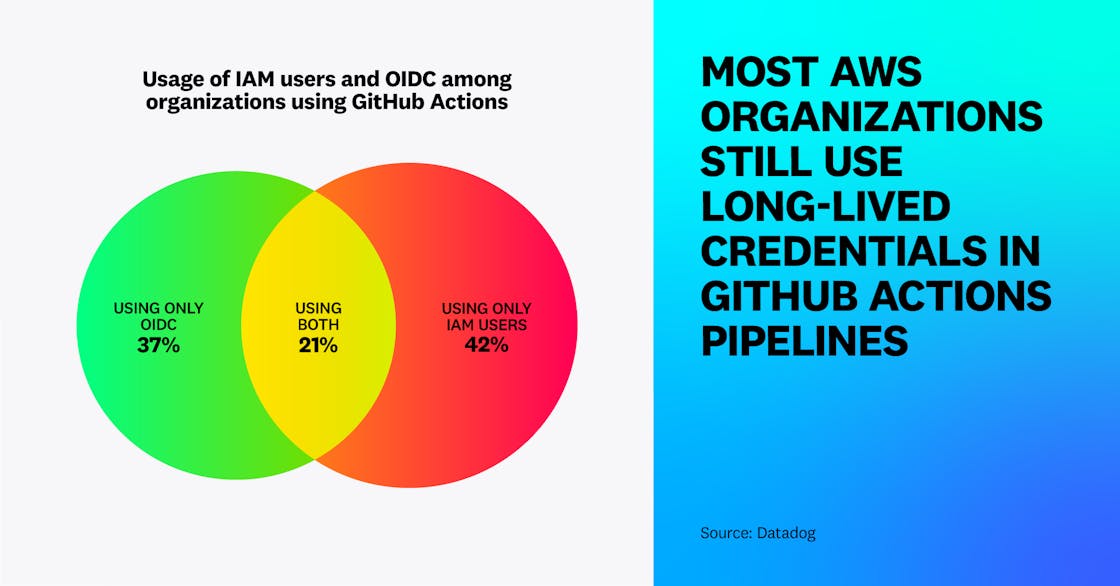

Usage of short-lived credentials in CI/CD pipelines is still too low

In cloud environments, leaks of long-lived credentials are one of the most common causes for data breaches. CI/CD pipelines increase this attack surface because they typically have privileged permissions and their credentials could leak through excessive logging, compromised software dependencies, or build artifacts—similar to the codecov breach. This makes using short-lived credentials for CI/CD pipelines one of the most critical aspects of securing a cloud environment.

However, we identified that a substantial number of organizations continue to rely on long-lived credentials in their AWS environments, even in cases where short-lived ones would be both more practical and more secure. Across organizations using GitHub Actions—representing over 31 percent of organizations running in AWS—we found that only 37 percent exclusively use “keyless” authentication based on short-lived credentials and OpenID Connect (OIDC). Meanwhile, 63 percent used IAM users (a form of long-lived credential) at least once to authenticate GitHub Actions pipelines, while 42 percent exclusively used IAM users.

Using keyless authentication in CI/CD pipelines is easier to set up and more secure. Once again, this shows that good operational practices also tend to lead to better security outcomes.