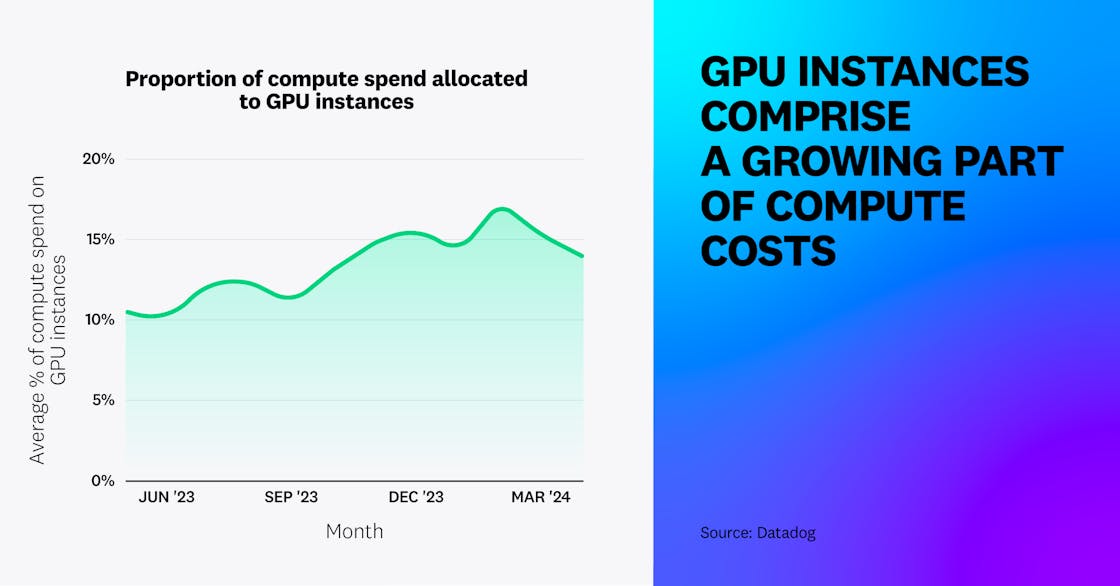

Spending on GPU instances now makes up 14 percent of compute costs

Organizations that use GPU instances have increased their average spending on those instances by 40 percent—from 10 percent of their EC2 compute costs to 14 percent—in the last year. GPUs’ capacity for parallel processing makes them critical for training LLMs and executing other AI workloads, where they can be more than 200 percent faster than CPUs.

GPU-based EC2 instance types generally cost more than instances that don’t use GPUs. But the most widely used type—the G4dn, used by 74 percent of GPU adopters—is also the least expensive. This suggests that many customers are experimenting with AI, applying the G4dn to their early efforts in adaptive AI, machine learning (ML) inference, and small-scale training. We expect that as these organizations expand their AI activities and move them into production, they will be spending a larger proportion of their cloud compute budget on GPU.

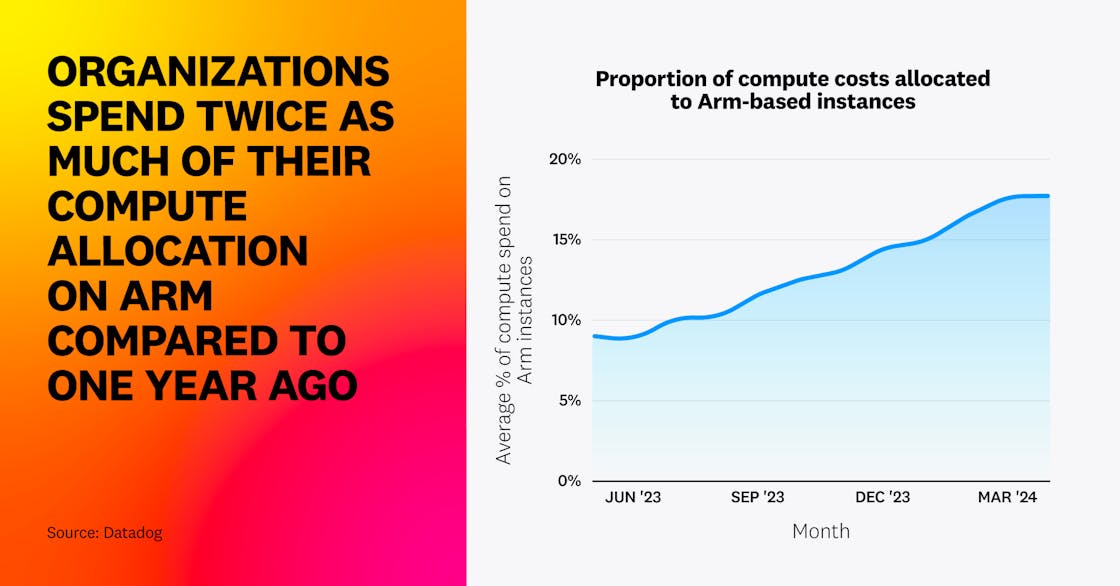

Arm spending as a proportion of compute costs has doubled in the past year

We observed that on average, organizations that use Arm-based instances spend 18 percent of their EC2 compute costs on them—twice as much as they did a year ago. Instance types based on the Arm processor use up to 60 percent less energy than similar EC2s and often provide better performance at a lower cost.

The most common type of Arm-based instance we see in use is T4g, which is used by about 65 percent of organizations. These instances are powered by Graviton2 processors and provide up to 40 percent better price performance than their x86-64-based T3 counterparts.

Arm-based instances still account for only a minority of EC2 compute spending, but the increase we’ve seen over the last year has been steady and sustained. This looks to us as if organizations are beginning to update their applications and take advantage of more efficient processors to slow the growth of their compute spend overall.

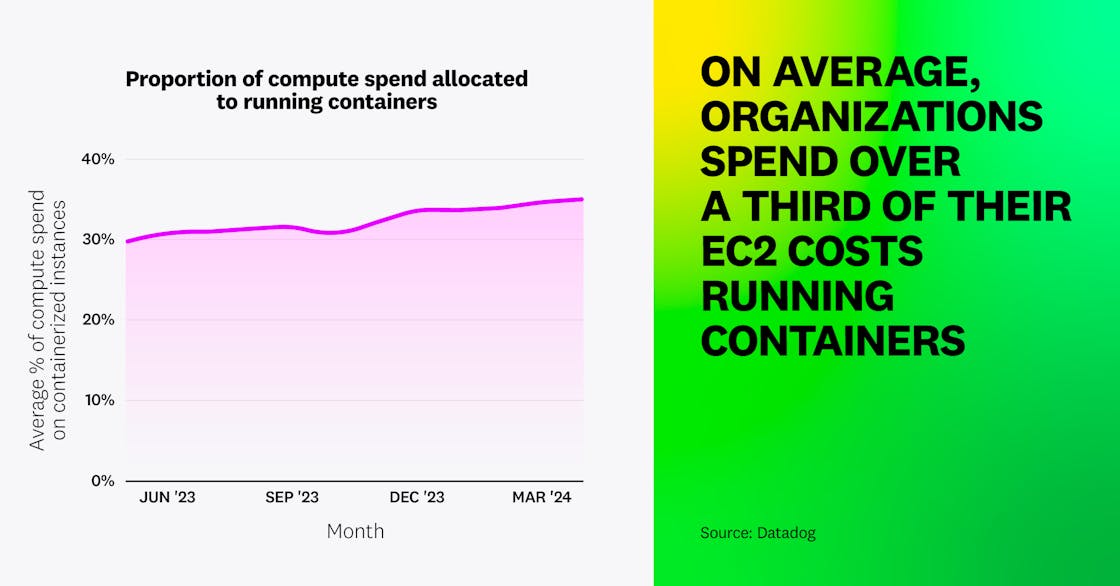

Container costs comprise one third of EC2 spend

Organizations use about 35 percent of their EC2 compute spend for running containers, up from 30 percent a year ago. This includes EC2 instances deployed as Kubernetes control or worker nodes in self-managed clusters, as well as instances that run in ECS and EKS clusters. Across all of the customers we analyzed, about one quarter allocate more than 75 percent of their EC2 spend to run containers.

We expect to see continued growth in the proportion of cloud spend allocated to containers as organizations increasingly benefit from the associated efficiencies, including streamlined deployments, improved dependency management, and more efficient use of infrastructure. But they’ll also be challenged to manage the added complexity of attributing costs based on ephemeral, shared infrastructure and provisioning container infrastructure in a cost-efficient way.

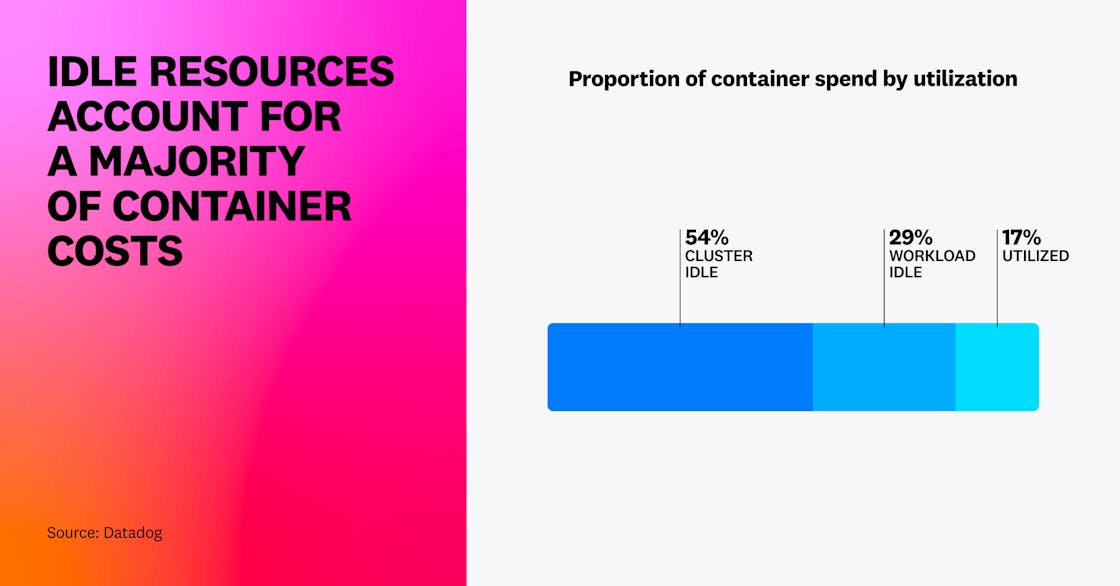

More than 80 percent of container spend is wasted on idle resources

Our research shows that 83 percent of container costs are associated with idle resources. About 54 percent of this wasted spend is on cluster idle, which is the cost of overprovisioning cluster infrastructure. The remaining 29 percent is associated with workload idle, which comes from resource requests that are larger than their workloads require.

We don’t expect that wasted container spend can be eliminated entirely. It’s difficult for development teams to accurately forecast each new application’s resource requirements, and this makes it difficult to efficiently allocate those resources. And resource needs often change based on the nature and utilization of the workloads. Organizations can autoscale their cluster infrastructure and individual workloads, but autoscaling is complex—teams can optimize scaling parameters based on workload traffic patterns, but efficiency improvements are often marginal and elusive.

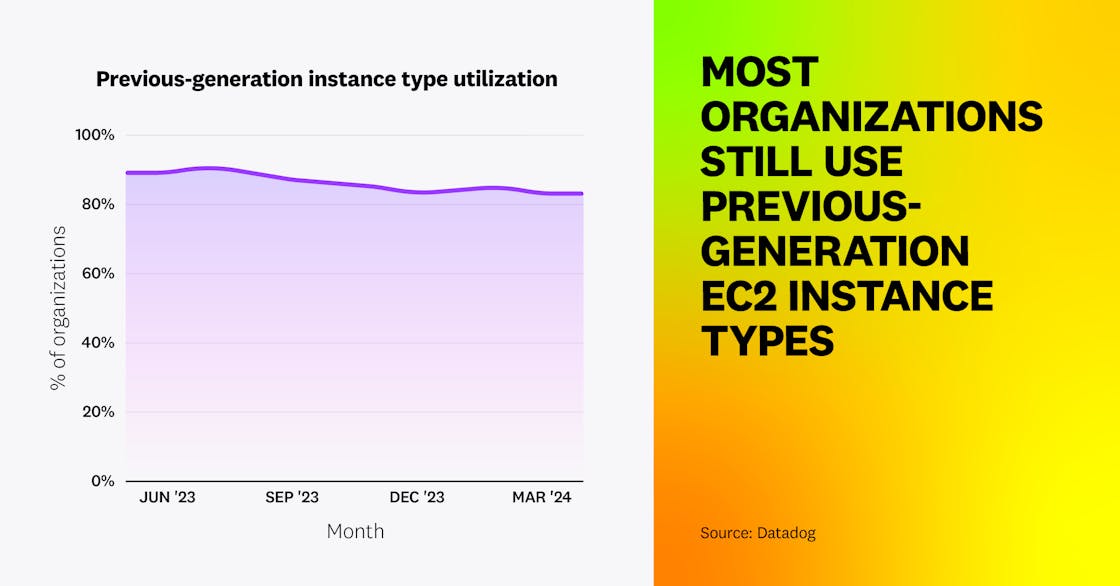

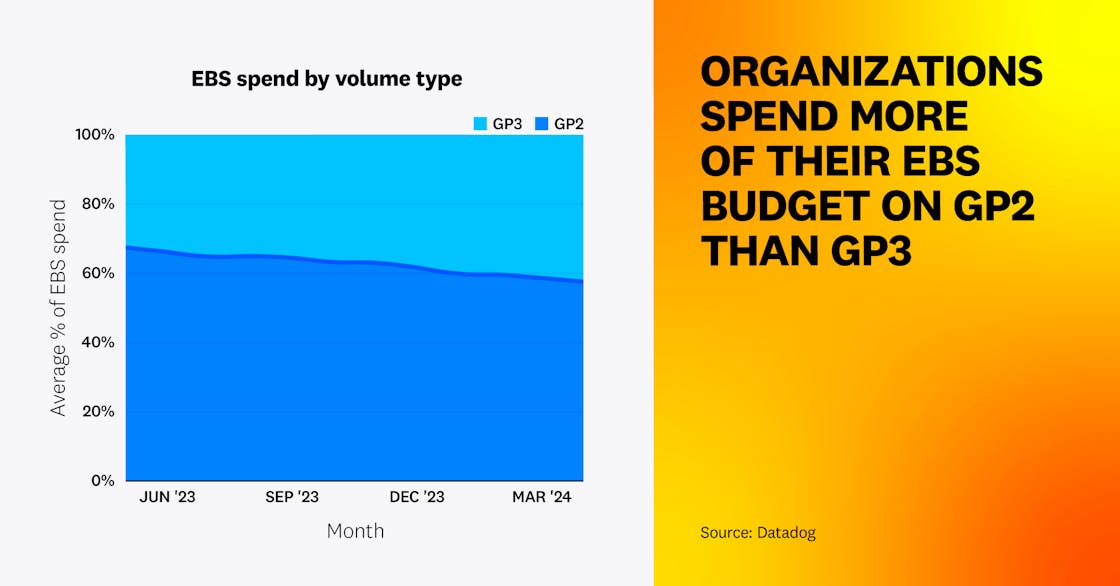

Previous-generation technologies are still widely used

AWS’s current infrastructure offerings commonly both outperform their previous-generation versions and cost less. However, our data shows that—while organizations are making efforts to modernize—in the case of EC2 instance types and EBS volume types, the older technologies still have a significant presence in many of their environments.

We found that 83 percent of organizations still use previous-generation EC2 instance types, which is down from 89 percent one year ago. These organizations spend on average about 17 percent of their EC2 budget on them.

In the case of EBS, the current generation of volumes—gp3—cost about 20 percent less than gp2 volumes, but organizations still spend more on the older volumes. The costs of gp2 volumes represent 58 percent of the average organization’s EBS spend, decreased from 68 percent a year ago.

While we expect to continue to see gp2 volumes in use for the near future, we predict that organizations will gradually decrease their reliance on them over time. The challenges of migrating—including the complexity of moving large volumes of data, the required cross-team collaboration, and the difficulty predicting how workloads will perform on newer generation technologies—all contribute to the slow rate of adoption. However, the cost reductions and performance gains offered by newer EC2 and EBS versions—and indeed even newer technologies in the future—will continue to be a motivation to migrate.

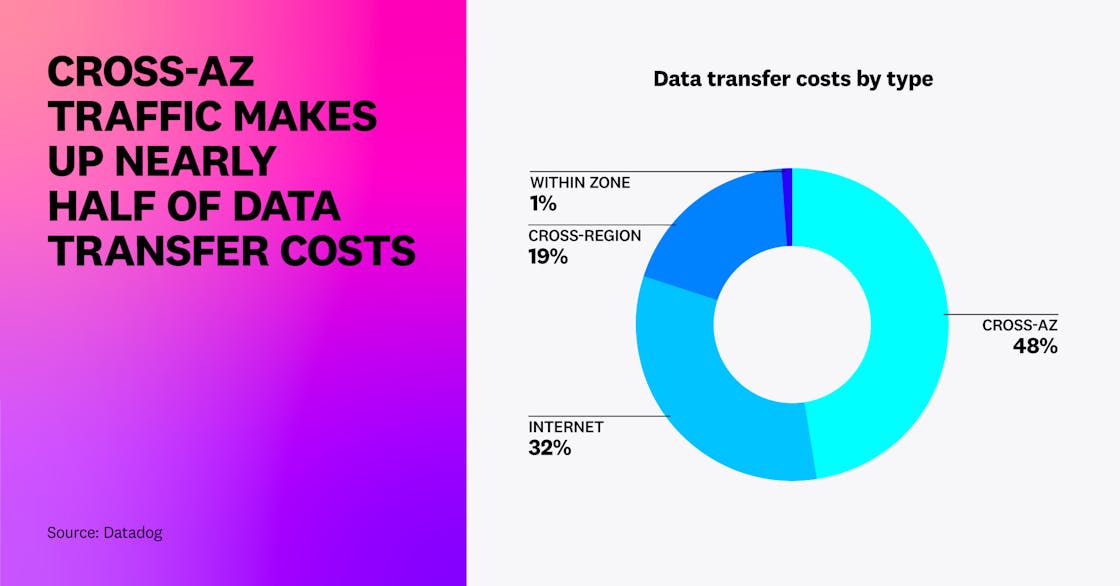

Cross-AZ traffic makes up half of data transfer costs

Our research found that, on average, organizations spend almost as much on sending data from one availability zone (AZ) to another as they do on all other types of data transfer combined—including VPNs, gateways, ingress, and egress. Cross-AZ traffic may be unavoidable in some scenarios, such as when an application’s high-availability architecture requires that instances be deployed in more than one AZ. It may also be an inevitable side effect of organizational changes that come as teams, services, and applications scale.

Wherever the costs come from, their impact is substantial: 98 percent of organizations are affected by cross-AZ charges. This may indicate a near-universal opportunity to optimize cloud costs, such as by colocating related resources within a single AZ whenever availability requirements allow.

In some cases, cloud providers have stopped charging for certain types of data transfer. It’s difficult to predict how these changes might evolve, but if providers relax data transfer costs further, future cross-AZ traffic may become less of a factor in cloud cost efficiency.

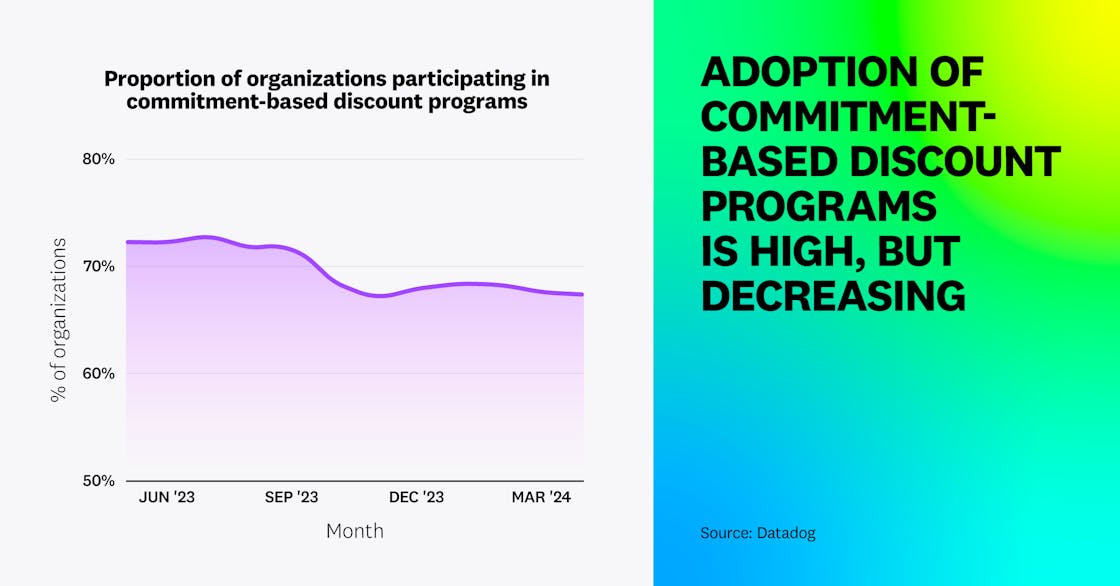

A decreasing percentage of organizations use commitment-based discounts

Cloud service providers offer discounts on many of their services—for example, AWS has discount programs for Amazon EC2, Amazon RDS, Amazon SageMaker, and others. Most organizations opt in to these programs, committing to a certain amount of future spend or usage of the service. But our data shows a decreasing proportion of organizations participating—67 percent, compared to 72 percent last year.

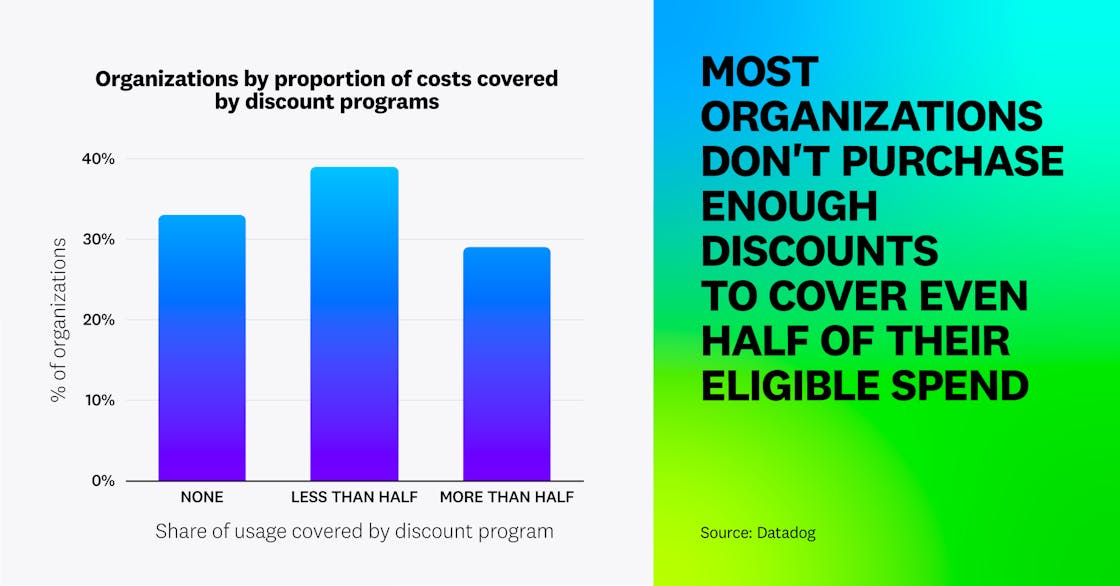

Further, we see relatively low engagement in these discount programs—only 29 percent of organizations purchase enough discounts to cover more than half of their eligible cloud spend. This underutilization of discounts suggests that organizations are hesitant to commit up front to a specific amount of usage or spend, possibly due to difficulty in forecasting their resource needs confidently enough to commit to ongoing usage. They may also face difficulty making discount-purchasing decisions due to lack of clarity on which teams are responsible for these decisions and who owns the affected resources. We see an opportunity for optimization, where most organizations can leverage discounts to improve cost efficiency as they gain a fuller understanding of the usage patterns behind their cloud costs.

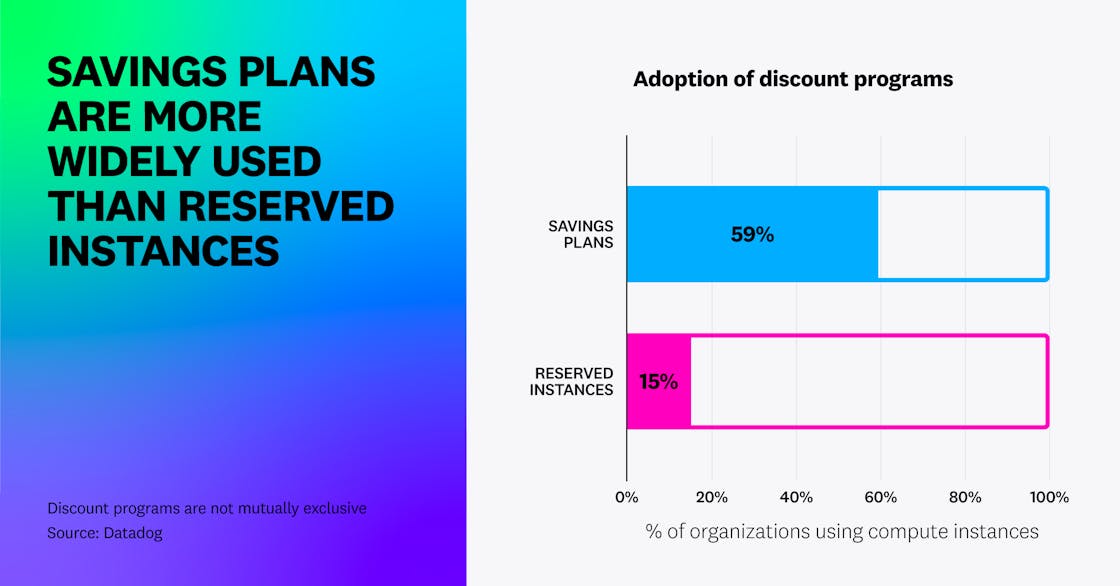

More than four times as many organizations use Savings Plans vs. Reserved Instances

AWS users have two options for discounting their EC2 costs: Savings Plans—in which customers commit to a certain amount of EC2 spend, and Reserved Instances—in which they commit to an amount of usage of a specific instance type in a specific availability zone. Savings Plans are more flexible, and we found that most organizations—59 percent—take advantage of this and apply Savings Plans to at least some of their EC2 costs. Far fewer organizations use Reserved Instances—just 15 percent. This could suggest that organizations are more confident in knowing how much they’ll need to spend on EC2 than they are about which instance types they’ll need to deploy and where.