What Is Cross Browser Testing?

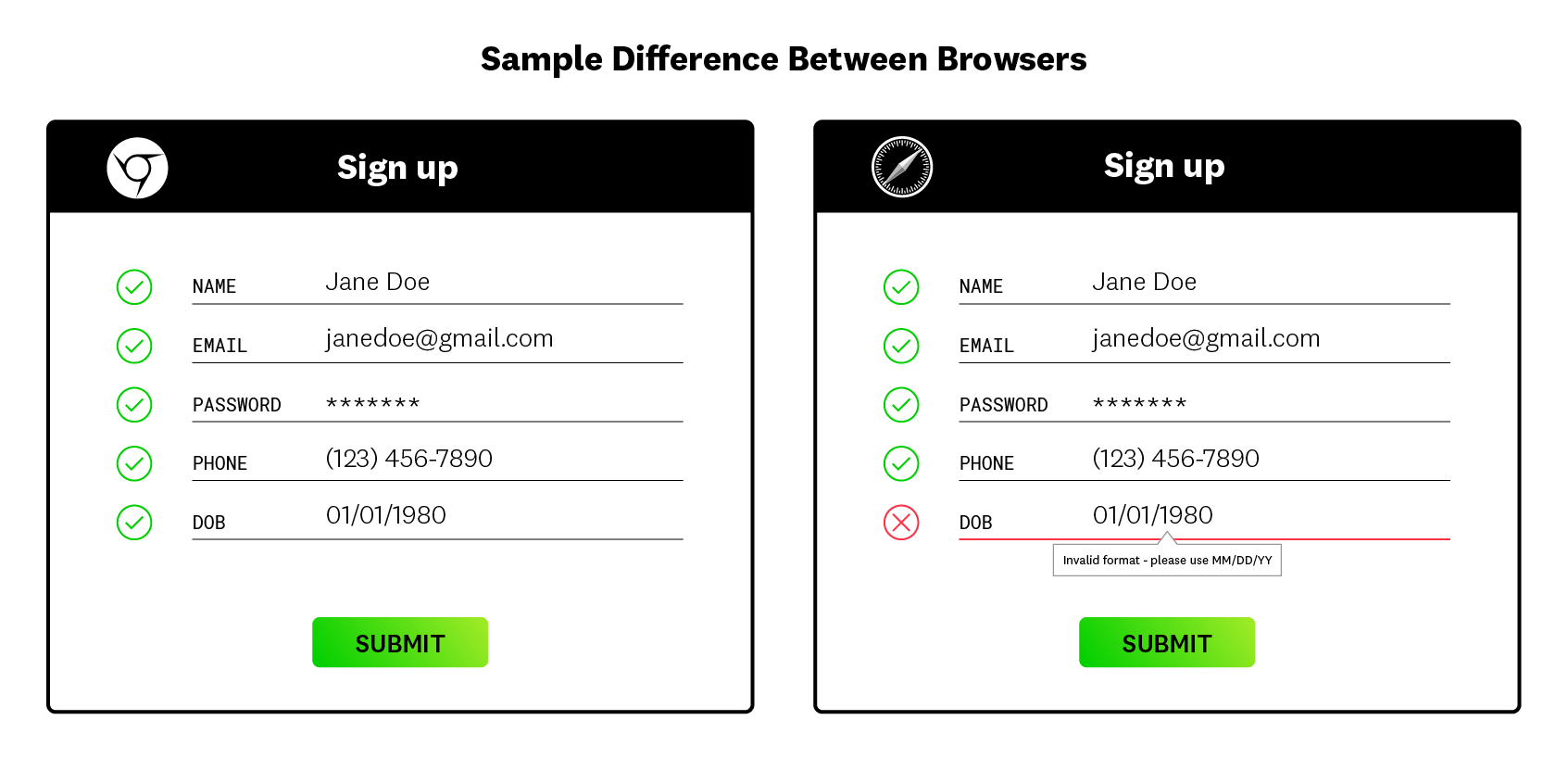

Cross browser testing refers to the practice of verifying that web applications work as expected across many different combinations of web browsers, operating systems, and devices. Though all web browsers support the common web standards (including HTML and CSS) developed by the World Wide Web Consortium (W3C), browsers can still render code in different ways. These disparities in appearance and function can arise from various factors, such as:

Differences in the default settings on a browser or operating system (for example, the default font used by a browser).

Differences in user-defined settings, such as screen resolution.

Disparities in hardware functionality, which can lead to differences in screen resolution or color balancing.

Differences in the engines used to process web instructions.

Variations among clients in the version support for recent web standards, such as CSS3.

The use of assistive technologies, such as screen readers.

In this article, we’ll explain how cross browser testing works to detect such differences and ensure that they don’t negatively impact application performance. We’ll also look at its benefits and challenges and describe how you can get started with cross browser testing.

How Cross Browser Testing Works

For simple websites and applications, teams can implement cross browser testing by manually noting differences in functionality on various web clients or manually running test scripts on different clients. Many organizations, however, require some type of automated cross browser testing to meet their needs for scale and replicability. But regardless of how organizations choose to perform cross browser testing, its purpose is the same: to expose errors in frontend functionality on specific web clients before real users encounter them.

Key Metrics Measured With Cross Browser Testing

Teams that perform cross browser testing can use metrics to evaluate different aspects of the user experience. Specific metrics may vary depending on how you implement cross browser testing and which platform you use. For each workflow or user journey that you test, you can compare how these metrics stack up across different browsers and devices:

- Duration

The time it takes to execute the entire user transaction or interaction on the web page, such as creating an account.

- Step Duration

The time it takes to execute a single action, such as clicking a button, within the larger workflow.

- Time to Interactive

The time it takes for all elements on a page to fully load.

- Largest Contentful Paint (LCP)

The time it takes for the largest element of a web page (considering only content above the fold) to load.

- Cumulative Layout Shift (CLS)

This metric measures the movement of content on a web page when certain elements like videos or images load later than the rest of the page. These unexpected movements can cause users to lose their place on a page or click the wrong button.

The last two of these metrics (LCP and CLS), along with a third usage metric called First Input Delay (FID), are especially useful for measuring a website’s performance. Together, they are called Core Web Vitals, a Google-determined rating that measures user experience quality on a specific webpage. To track Core Web Vitals yourself, you first need to install some APIs on your website. Note that some of these APIs are only supported by Chromium browsers—Chrome, Edge, Opera, and Samsung Internet. LCP and CLS, for example, cannot be measured on Firefox, Safari, or Internet Explorer.

Benefits and Use Cases of Cross Browser Testing

The main benefit of cross browser testing is that it helps create a positive user experience on your website, no matter which browser or device visitors use to connect. Without cross browser testing, your website’s appearance and functionality may fail to meet quality expectations on some web clients and leave users on those platforms with a negative impression. As an additional benefit, creating a better user experience can result in higher conversion rates, which translates to more revenue for your business.

Not only does better frontend performance help drive customer conversion, but it also makes your website or application more likely to be found in the first place. This benefit of improved discoverability arises because user experience impacts how high a site ranks in search engine results. If your website doesn’t function well on mobile or on specific Chromium browsers, its Core Web Vitals score could decline. A low score can drag down the website’s ability to rank well in organic search engine results, leading to lost opportunities and ultimately lower revenue for the business.

One might expect consistent performance across channels to be a key goal and benefit of cross browser testing, but this isn’t normally the case. It’s not always possible to deliver the same exact experience across all types of web clients. For example, some older browsers don’t support CSS3, which means that interactive features of a website, such as the ability to zoom in and out, may not work. Through cross browser testing, you can understand how your site performs on different channels and proactively notify customers of features that are only supported on certain browsers.

Once you run your cross-browser tests and understand differences across web clients, you can update your code. However, simply updating your code to resolve a problem with one client might introduce new problems for other clients. Instead, engineers usually need to fork the code to address client-specific problems so that different code paths run on specific browsers or devices.

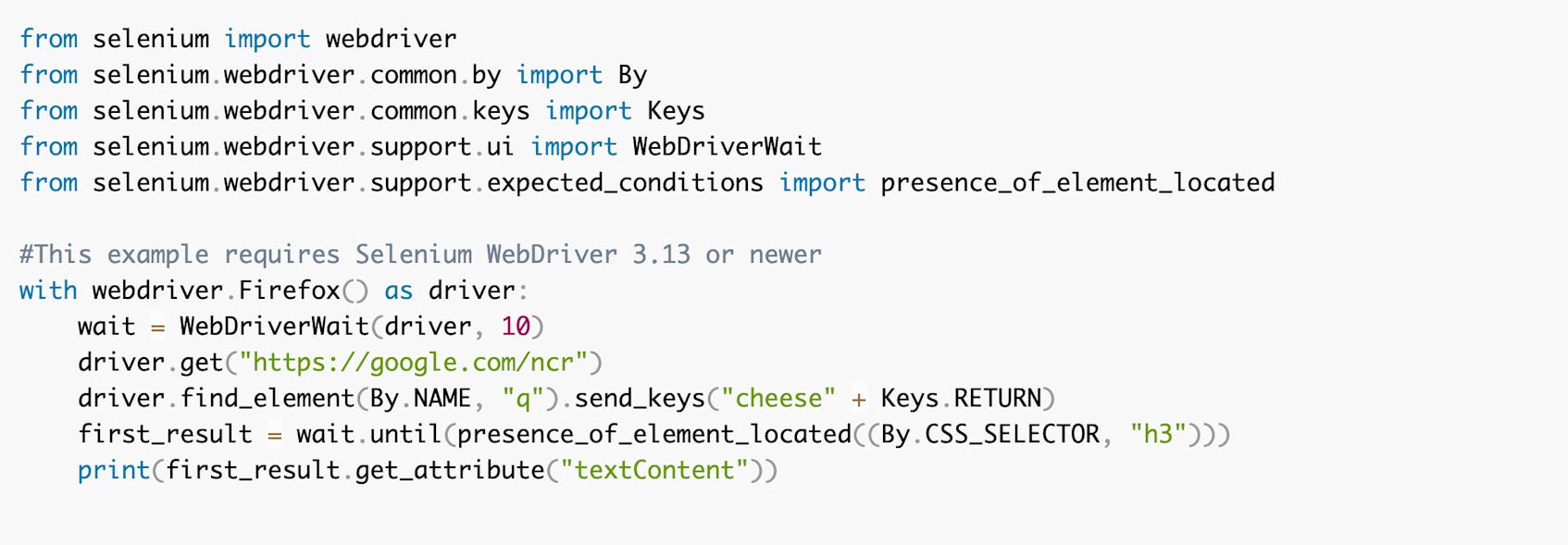

Cross Browser Testing With Script Automation

One common way to automate cross browser testing is through scripting. With this method, developers write scripts that periodically load their website in multiple browsers. These scripts can simulate user visits to a website or application and perform checks on various transactions and user pathways. For instance, you can determine whether signing up for an account triggers a welcome email or whether clicking a button successfully prompts an image to appear. Developers may also add assertions that check whether specific text or other elements appear on a page, or subtests to verify multi-step workflows. Scripts can be executed from remote servers across the globe to determine whether a website or app works optimally for users in different locations.

Challenges of Cross Browser Testing With Scripts

Automated test scripts offer a much more scalable and robust alternative to manual testing, but they pose challenges of their own:

- Need to learn a scripting language

Writing test scripts to automate your cross browser tests can save time, but it requires knowledge of a scripting language. This requirement means nontechnical team members cannot write the tests on their own.

- Unsuitable for testing complex user journeys

Test scripts work well when testing short steps like filling out an account-creation form. If you want to test longer user journeys (such as first creating an account, then receiving an email confirmation with a discount code, then applying that code to items in a cart, and finally completing your purchase), you’ll likely require several scripts. In this case, the overhead required to write and support these scripts takes engineers’ time away from performing their core function of building features.

- Traditional tests require infrastructure

Traditional forms of cross browser testing require organizations to invest in procuring and maintaining servers. If you are testing containerized applications, you will also need to spin up containers to run your tests. And the more test data you’d like to store, the higher your storage costs will be.

- Updating scripts consumes engineering time

Test scripts often break because of small UI changes, such as moving a button or changing its color. However, with modern application development workflows, team members might push these types of minor updates several times per day. As a result, engineers need to spend time updating scripts and fixing broken tests. This time could be better spent delivering features to market.

- Ambiguity about why tests fail

Traditional test automation tools don’t provide end-user screenshots or other clues about the causes of test failures. For example, is an element on a website missing because of a JavaScript bug, or because of a server issue? To answer this question, a scripted test might require you to follow up by reviewing code line by line.

Above all, cross-browser testing through scripts tends to add significant time and complexity to web development. In particular, creating and updating test scripts becomes very difficult to do on a large scale.

Cross Browser Synthetic Testing with Datadog

Modern Cross Browser Testing

To improve ease of use, simplify workflows, and allow nontechnical team members to create and run cross browser tests, some organizations use modern browser testing platforms. These tools replace scripts with user-friendly web recorders that mimic the user’s experience of a website or web application. Without having to write any scripts or code, you can record a variety of user journeys. At the end of a simulated visit, the tool will sequentially display each step of the user interaction, capture metrics, and show you where any errors occurred.

In many organizations today, developers also collect real usage data to monitor performance across browsers and devices. Once code hits production, teams can review bugs reported by real users to surface discrepancies in performance across different web clients. The practice of monitoring real usage data is called Real User Monitoring (RUM), and it’s often used in conjunction with synthetic browser tests.

Getting Started With Cross Browser Testing

Modern organizations need a form of cross browser testing that can fit seamlessly into their development pipelines and operate well at scale. Manual testing and scripts often come up short in modern organizations where developers may push updates to code multiple times per day.

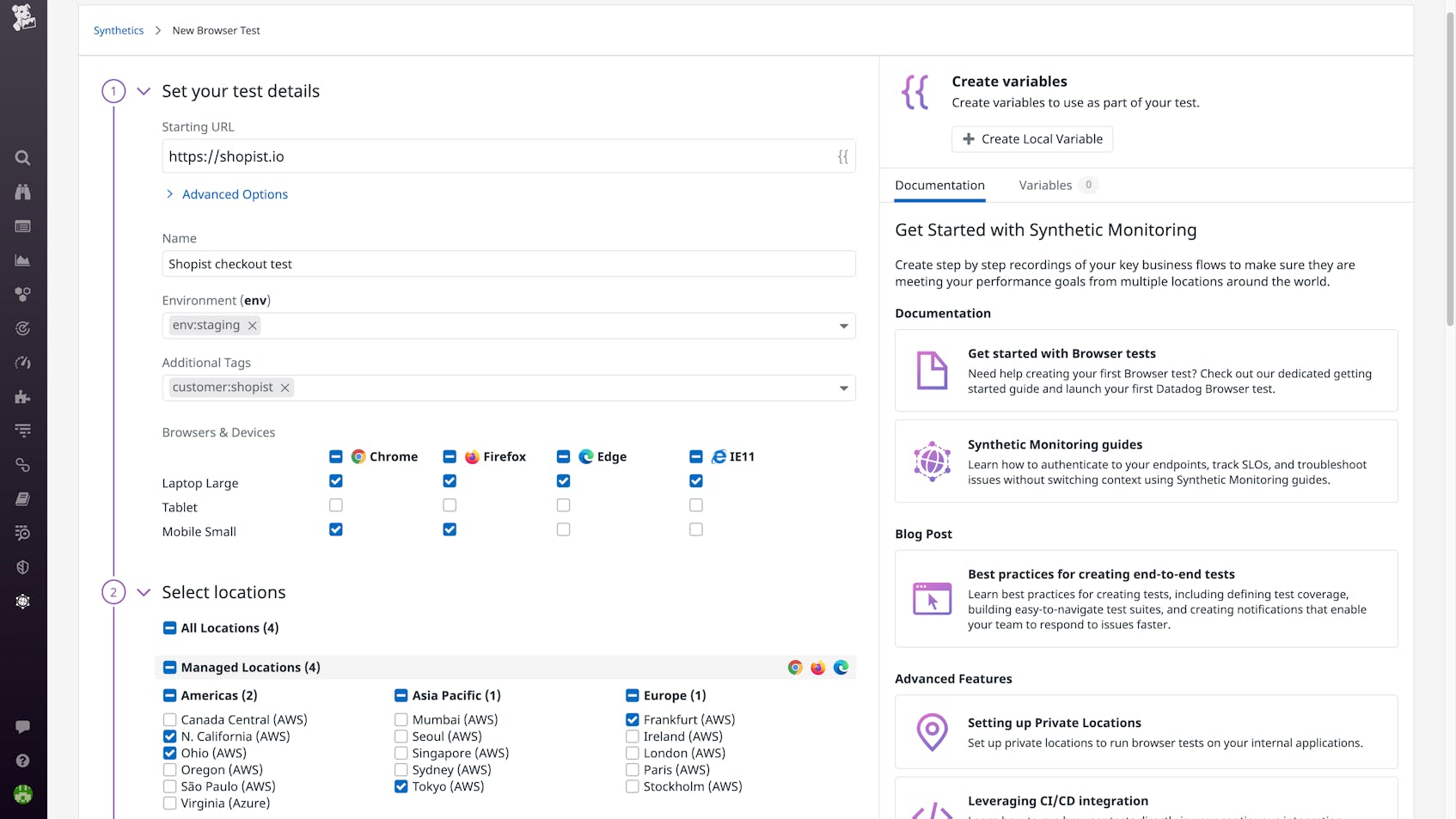

Datadog Synthetic Monitoring is an automated, fully-hosted cross-browser testing platform that makes it easy to test all of your applications. Anyone on your team can create Datadog cross browser tests with the ease of a code-free web recorder. Synthetic Monitoring can be used to collect performance metrics of key user journeys, such as account signup and checkout, across multiple browsers and devices. Using assertions and subtests, you can even test complex, multi-step workflows with just a few clicks.

Datadog browser tests will intelligently self-adapt to minor UI changes, so teams can focus on building features instead of managing brittle tests. Without configuring any infrastructure, tests can be run from managed locations across the globe, private locations, or continuous integration/continuous development (CI/CD) pipelines.

Once a test is complete, Datadog displays screenshots from the user’s perspective and includes links to related traces and logs, so it’s easy to determine the cause of a test failure and address it. With our accompanying synthetic API tests and Real User Monitoring (RUM), Datadog provides end-to-end visibility into user experience across your web properties.