December 2018更新

Containerization is now officially mainstream. A quarter of Datadog's total customer base has adopted Docker and other container technologies, and half of the companies with more than 1,000 hosts have done so.

As containers take a more prominent place in the infrastructure landscape, we see our customers adding automation and orchestration to help manage their fleets of ephemeral containers. Across all infrastructure environments, our data shows increased usage of container orchestration technologies such as Kubernetes and Amazon Elastic Container Service (ECS). The companies running these technologies tend to have larger, far more dynamic deployments than companies running unorchestrated containers.

Now that all three major cloud providers offer a hosted Kubernetes service, we see significant usage of Kubernetes in AWS, Azure, and Google Cloud Platform. But, as you'll see below, Amazon ECS and the newly launched Amazon Fargate service remain the most popular choice in AWS. Read on for more insights and trends gathered from the real-world usage data of tens of thousands of organizations, running close to half a billion distinct containers.

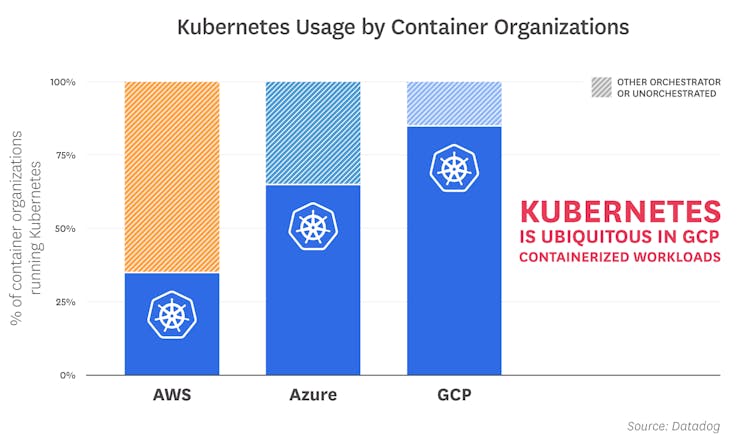

Kubernetes orchestrates most GCP and Azure container environments

Over the past few years, we've seen Kubernetes steadily gaining in usage on every infrastructure platform, but nowhere has it been more popular than on Google Cloud Platform. Not only was Kubernetes originally developed at Google, but GCP has long offered a hosted Kubernetes service, now known as Google Kubernetes Engine (GKE). Of the organizations running containers in GCP, more than 85 percent orchestrate workloads with Kubernetes. In the Azure cloud, which has offered a managed Kubernetes service for about two years, roughly 65 percent of organizations using containers also run Kubernetes.

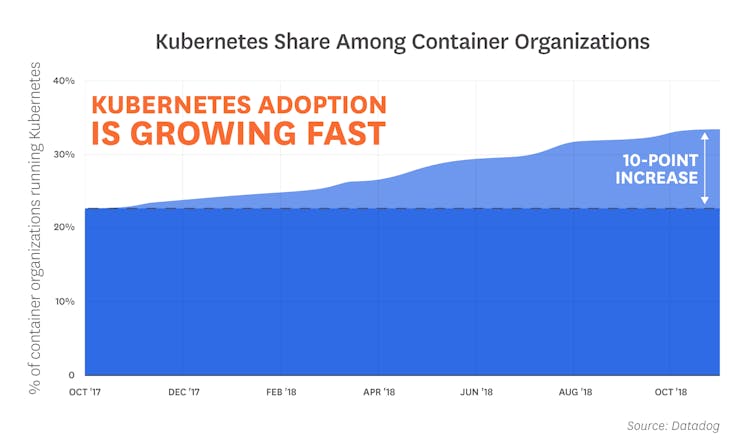

Kubernetes continues its steady rise in container environments globally

Kubernetes is everywhere. Not only do our customers run their own Kubernetes clusters in diverse infrastructure environments, but all three major cloud providers now offer a managed Kubernetes service as well. One third of our customers using containers now use Kubernetes, whether in self-managed clusters, or through a cloud service like Google Kubernetes Engine (GKE), Azure Kubernetes Service (AKS), or the new Amazon Elastic Container Service for Kubernetes (EKS). The graph below tracks Kubernetes usage across Datadog's entire customer base, whether in on-prem, public cloud, or private cloud environments.

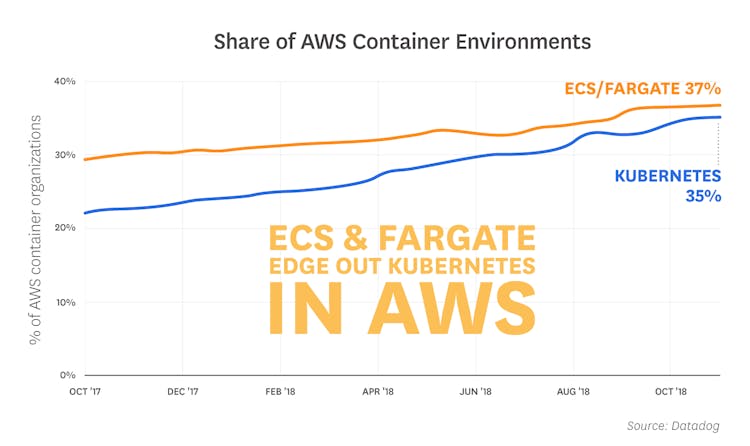

ECS and Fargate hold a slight edge over Kubernetes in AWS environments

ECS has long been the first choice for container orchestration in the AWS cloud, and over the past year it has continued to see increased usage among companies running containers in AWS. With the recent launch of EKS, however, Amazon users now have more choice when it comes to managed services for their container infrastructure. For the first time, our data shows that Kubernetes is nearly as popular in the AWS cloud as ECS (including usage of AWS Fargate). The availability of EKS appears to be contributing to that trend—in the months after EKS launched, we saw a significant uptick in the number of AWS container organizations running Kubernetes.

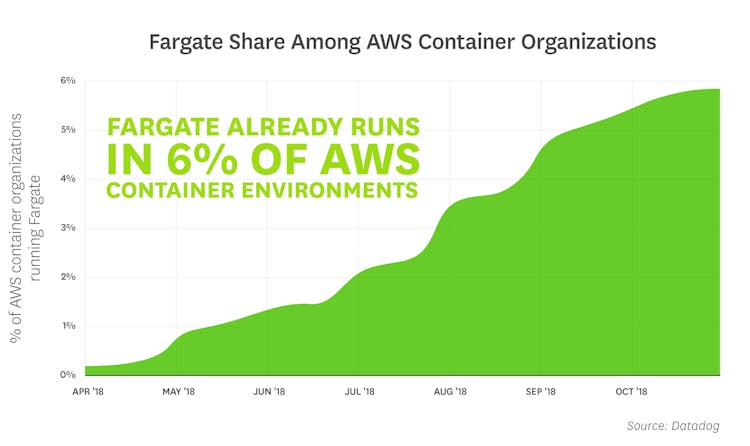

Fargate already runs in 6% of AWS container environments

AWS Fargate allows customers to run containerized applications without having to manage a cluster of EC2 instances. In other words, Fargate applies the serverless model to container orchestration. In the past several months, as Fargate expanded to more AWS regions and added features, the service rapidly gained in usage among Datadog customers, and has already been adopted by 6 percent of AWS organizations using containers.

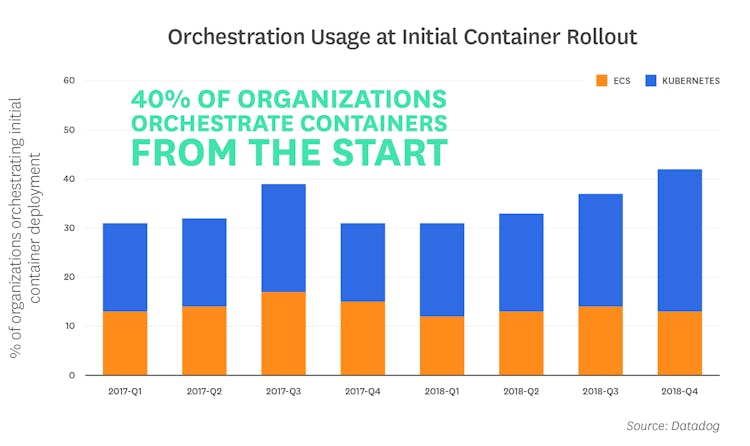

Increasingly, organizations orchestrate their containers from the start

More and more, orchestration is being considered an essential feature of a container deployment. Half of all container organizations now run one or more orchestration technologies, and a significant number of these companies included orchestration in their initial container deployment. Our data shows that more than 40 percent of organizations run Kubernetes or ECS when they first start using containers, with smaller numbers of organizations deploying containers with Fargate, Nomad, or Mesos from the start.

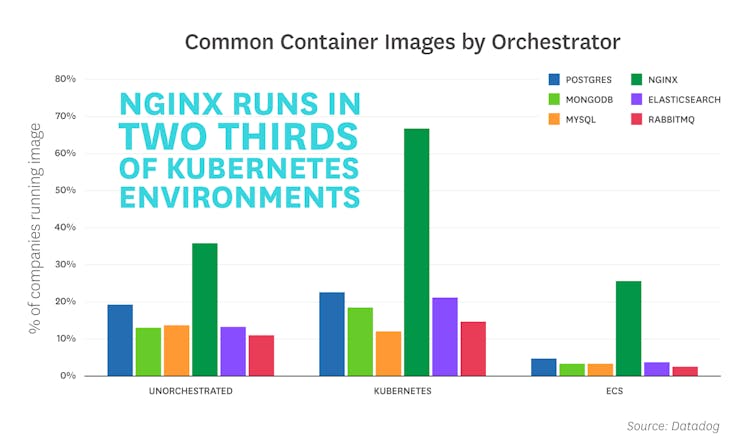

Kubernetes users run far more NGINX and other off-the-shelf images

In Kubernetes clusters, we see widespread deployment of container images for common infrastructure technologies like NGINX, Postgres, and Elasticsearch, with NGINX appearing in two thirds of Kubernetes environments. These same images tend to appear in unorchestrated environments as well, albeit in smaller numbers. But in ECS clusters, our research shows very little adoption of common, publicly available container images: only NGINX appears in more than 10 percent of ECS environments. We hypothesize that many AWS users rely on services such as Amazon Relational Database Service and Simple Queue Service instead of running containerized infrastructure components such as Postgres or RabbitMQ. Therefore, containers running in ECS may be more narrowly focused on business logic rather than infrastructure.

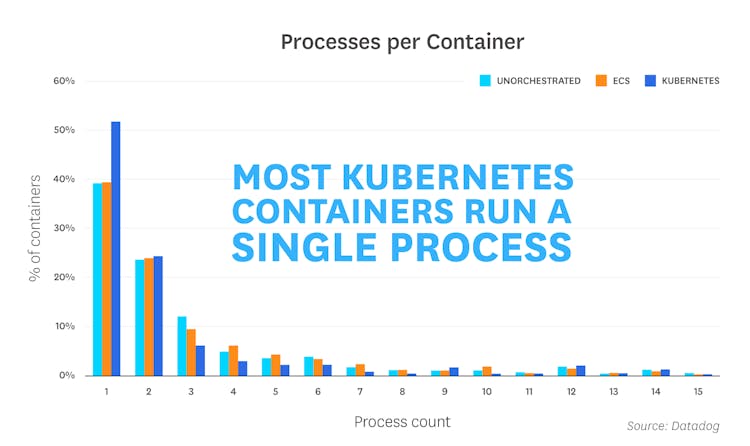

Most Kubernetes containers run a single process

The official Docker best practices guide notes that running one process per container is a "good rule of thumb" to ensure modularity, but is not always practical or attainable. For instance, containers can be spun up with an additional init process, and some technologies such as Apache server spawn multiple processes by design.

When we examined the number of processes running in each container, we observed a clear difference between Kubernetes workloads and containers running in ECS or without orchestration. Whereas most ECS or unorchestrated containers run multiple processes, a slight majority of Kubernetes containers run only one process.

This fact, along with the fact that we see more usage of off-the-shelf container images in Kubernetes clusters, indicates that Kubernetes users tend to build their infrastructure from standard, highly modular components rather than from more customized container images. We see a similar, though subtler, trend in the number of containers in each deployed unit of work—our data shows that Kubernetes pods tend to run slightly fewer containers than ECS or Fargate tasks.

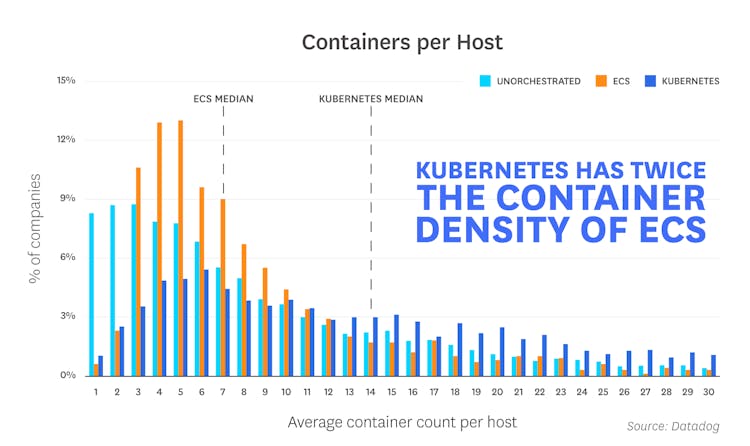

Kubernetes hosts run twice as many containers as ECS hosts

At the median Kubernetes organization, each host runs about 14 containers over a one-hour sampling window, versus just seven containers in the median ECS organization. This difference in container density may indicate a tighter "bin packing" of containers on hosts in Kubernetes. It is also likely related to the difference in process density within the containers themselves, as outlined above. Taken together, these facts suggest that Kubernetes nodes tend to run large numbers of single-process containers, whereas ECS nodes run fewer containers, some of which run multiple processes.

調査方法

Population

For this report, we compiled usage data from thousands of companies and nearly 500 million containers, so we are confident that the trends we have identified are robust. But while Datadog's customers span most industries, and run the gamut from startups to Fortune 100s, they do have some things in common. First, they take their software infrastructure seriously. And they skew toward public and private cloud usage more than the general population. All the results in this article are biased by the fact that the data comes from our customers, a large but imperfect sample of the entire global market. Since the first edition of this report, we have expanded the scope of the analysis to incorporate a larger swath of customer infrastructure, so some of the percentages have changed, but the overall trends have not.

Population

When we present average numbers within our customer base (for example, the average container density) we are not actually referring to the mean value across the population. Rather we compute the average for each customer individually, and then report the median customer’s number. We found that when we took a true average, results were unduly skewed by unusual practices employed by relatively few companies. For example, some customers run hundreds or even thousands of containers simultaneously on a single host.

Counting

In this investigation, we exclude containers that are unrelated to the actual workload, such as DNS containers; the Datadog Agent; the ECS Agent; and Kubernetes pause containers, system containers, and cluster-management components (addon-resizer, kubernetes-dashboard, etc.).