Santiago Gómez Sáez

This guest blog post is by Santiago Gómez Sáez, who is a principal cloud architect at dx.one - Volkswagen Group and a Datadog Ambassador. At dx.one - Volkswagen, Santiago develops architectures that use standardization and automation to optimize the performance of engineering teams.

The adoption of Datadog in large enterprises typically goes beyond integrating metrics, traces, and logs to unify observability. These enterprises must implement and use Datadog in a compliant and standard way across divisions, teams, and projects to enhance data security, comply with regulations, manage costs, and increase operational efficiency.

In this blog post, I will introduce best practices for management and governance of Datadog at scale in highly distributed cloud environments. These recommendations come from my experience of centrally providing and using Datadog in multiple divisions in the Volkswagen Group. These divisions run customer-facing workloads across more than 2,000 AWS accounts and include more than 500 Datadog users worldwide.

Use Datadog organizations to apply the appropriate structure

Datadog organizations are logical groups of users, configurations, and telemetry data that have a parent-child relationship. Child organizations are associated with a parent organization. Typically, a single-organization model is the simplest way to set up Datadog. Small and medium-sized Datadog customers usually take this approach to provide unified, real-time observability across the organization.

Large enterprises that have multiple divisions, such as Volkswagen, need to fulfill legal requirements and ensure isolation among divisions. These obligations demand a more sophisticated multi-organization setup. With the multi-organization feature, enterprises can manage multiple child organizations from one parent organization. A multi-organization setup provides the following main benefits:

- Billing data and contract data are consolidated in the parent organization.

- Data and configurations are logically isolated among child organizations.

- Configurations can vary among child organizations or can be centrally governed.

- Users can belong to multiple child organizations and can switch between the child organizations.

The multi-organization feature offers the flexibility to have different structural organization models in Datadog. In order from most consolidated to most distributed, the following structural models are possible:

- Single-organization model: One organization receives all the observability data.

- Stage dependent multi-organization model: One child organization receives observability data for all pre-production stages, such as testing, and one child organization receives observability data for all production stages.

- Multi-division multi-organization model: One child organization exists for each legal entity in an enterprise group. You also can have a separate organization for each department or project in the enterprise group.

- Data sensitivity multi-organization model: Data can be classified according to its sensitivity (for example, internal, confidential, payment). This setup consists of one child organization for each sensitivity level of the data processed by the monitored workload. For example, you can have one child organization to monitor payment processes. You can have another child organization for workloads that process personally identifiable information (PII) or vehicle data.

Given the possible models, which one should you choose? The choice depends on your organizational requirements. From my experience, I recommend the following actions:

- Analyze your situation with Datadog Technical Account Managers or Customer Success Managers to determine the model that is the best fit.

- Keep the number of child organizations as low as possible. Having one organization for all workloads maximizes your end-to-end observability while reducing configuration effort.

- If legal requirements limit the observability data that you can share among divisions, consider creating Restricted Datasets to limit what users can see. If you need total isolation, create one child organization for each legal domain or division.

- If you choose multi-organization configurations, use the parent organization only for central management and billing, not for integrating observability. This practice provides a clean separation of tasks and prevents human errors (for example, deletion of users) that can scale to multiple child organizations.

Configure single sign-on

After the organization structure is defined, it's time to integrate Datadog with your environment and automate the configuration and governance of Datadog. You start the process by integrating Datadog with your identity provider’s single sign-on (SSO).

Datadog supports SAML 2.0 with custom domains and just-in-time provisioning or SCIM provisioning. You can configure provisioning by using the Datadog Web App, Datadog API, or Datadog Terraform provider.

To standardize access, you should deactivate password authentications and Google authentications.

Automate provisioning of users and child organizations

The next step occurs when a division, team, or project requests a Datadog environment in a self-service landing zone. An automated mechanism to provision new users or child organizations and configure them in minutes will save you time and trouble. With automation, you can increase the adoption of Datadog and prevent human error during the multi-organization setup process while implementing governance at scale.

It's ideal to automate the following repetitive steps:

- Define and keep ownership details of child organizations (for example, the name and email address of the owners of a child organization).

- Create a child organization or users (supported by the Datadog Organizations API and the datadog_child_organization resource in Terraform).

- Configure SAML 2.0 with a custom domain and just-in-time domains (supported by the Datadog Organizations API and the datadog_child_organization resource in Terraform).

- Create standard roles to achieve role-based access control for metrics and logs (supported by the Datadog Roles API and the datadog_role resource in Terraform).

- Create tag-based log restriction queries and assign them to the standard roles (supported by the Datadog Logs Restriction Queries API and Data Access Control). Log restriction queries limit which logs a user can read.

- Create standard log indexes (supported by the Datadog Logs Indexes API and the datadog_logs_index resource in Terraform). With log indexes, you can apply filters to categorize and manage logs to meet compliance and cost requirements.

- Create alerts for usage of standard tags (supported by the Datadog Monitors API and the datadog_monitor resource in Terraform). These alerts enforce that the standard tags are present for the log restriction queries.

- Create credentials that provide users with access to the Datadog API.

The preferred implementation method for the previous tasks is to use Terraform to orchestrate and maintain a state for each organization. For example, you can orchestrate the creation of organizations, credentials, and monitors in a standardized way to automate your onboarding processes. By using Terraform, you can implement a step-by-step process that you can roll back if failures occur.

Standardize integrations with your cloud environment

When Datadog users and organizations are provisioned and ready, development teams will continuously integrate cloud environments (for example, AWS accounts) with Datadog. These teams need a standard architecture and automated process for this integration.

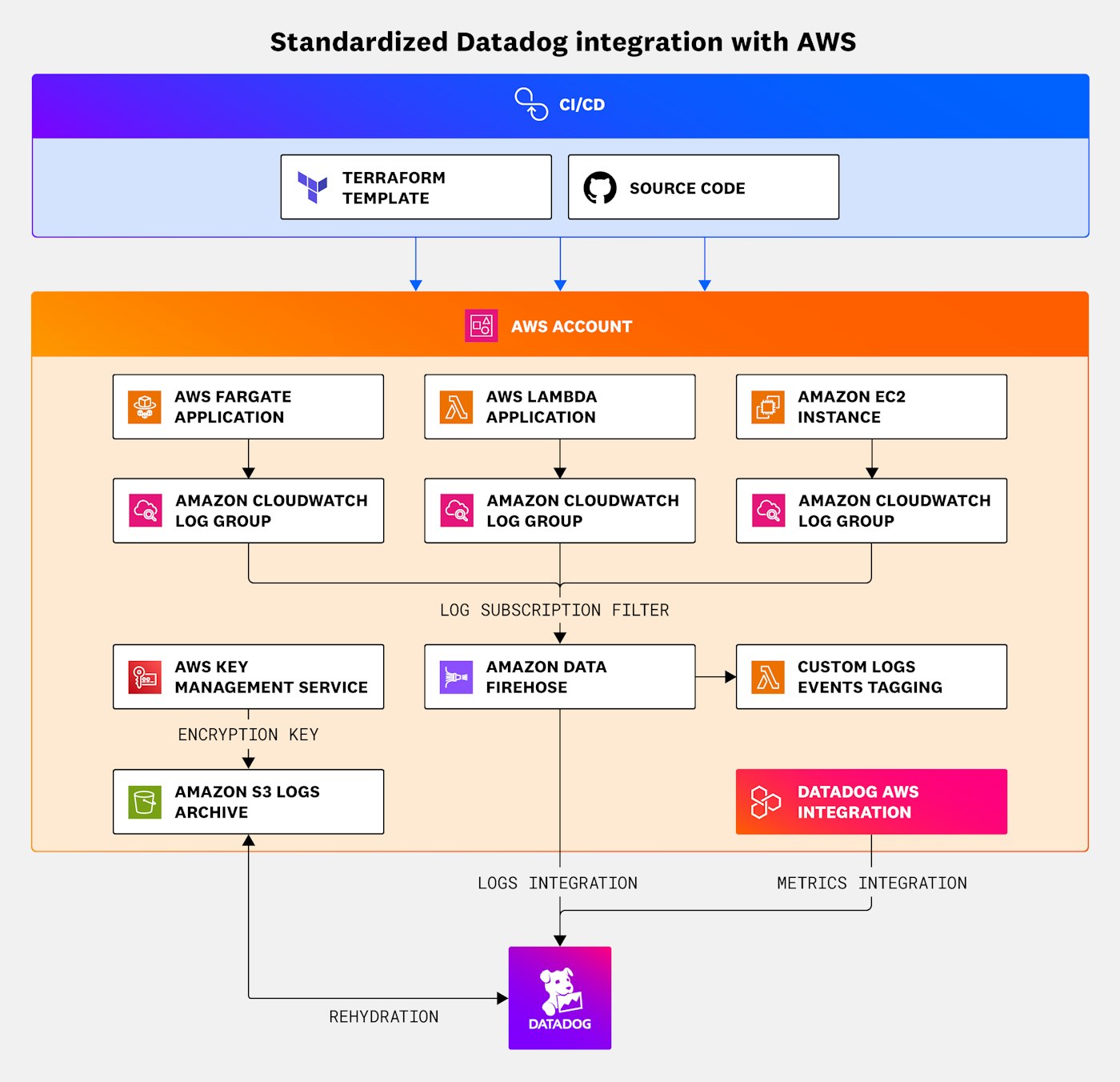

The following reference architecture provides an automated process that uses Terraform and GitHub Actions to configure the native Datadog integration and provision a standard logging funnel toward Datadog. The architecture uses the Datadog AWS integration for metrics and includes a stack in AWS for storage of logs in Amazon S3. The architecture also includes a logging funnel that uses Amazon Data Firehose and AWS Lambda to transmit tagged events to Datadog.

Implement continuous governance

As usage of Datadog scales within your teams, continuous governance becomes more important. To promote consistent and compliant usage of Datadog across your development teams, implement the following best practices:

- Enforce tag hygiene: Configure monitors that provide alerts when metrics or logs don’t use standard tags that are defined in your organization.

- Detect cost spikes: Configure service-specific monitors or Cloud Cost monitors to detect cost increases (for example, to detect when a team unnecessarily integrates hosts with Datadog).

- Detect sensitive data in logs: Activate Datadog Sensitive Data Scanner and configure standard alerts. Define a compliance process to remediate noncompliant log events.

- Monitor core Datadog integrations: Configure monitors for critical Datadog integrations (for example, the Datadog AWS integration or the log archives in Amazon S3).

You should centrally manage and automatically roll out these configurations to all child organizations at regular time intervals. You can use automation processes that interact with the Datadog API to configure and reconfigure child organizations. You can implement these processes as Lambda step functions or define them as Terraform templates to orchestrate a standard set of governance rules.

Key takeaways

Automating the provisioning and management of Datadog organizations at scale can help increase Datadog adoption while maximizing compliance and minimizing misconfigurations. Large enterprises can achieve these benefits by applying the aforementioned best practices and reference architecture. In summary, the following steps are particularly useful:

- Integrate Datadog access with your enterprise’s SSO.

- Automate the creation of Datadog users and organizations. Additionally, automate standard configurations.

- Standardize how development teams integrate Datadog with their cloud environments. Give these teams self-service automated processes that provision Datadog integration artifacts (for example, use Amazon Data Firehose as a standard funnel for logs toward Datadog).

- Automate the enforcement of compliance rules and alerts (for example, alerts for cost increases and tagging hygiene), and keep the configurations up to date.