Ben Michel

UX smoke testing is the process of determining whether critical user journeys in your app are usable at a basic level before you move on to more advanced stages of development and testing. This kind of testing is critical for maintaining user experience standards over time.

However, the amount of effort required to manually create and monitor UX smoke tests makes ensuring system-wide coverage a daunting task, especially because you need to make sure that key user journeys perform well not just in local testing environments but, more importantly, in every region you’re deploying in. Additionally, the metrics that teams typically monitor when smoke testing their UX—such as Core Web Vitals and the length of tasks on the main thread—are useful for getting a quick view into what your user experience looks like in the average session, but they don’t tell the whole story when you test for them in isolation. You’ll also need to dedicate time to continually updating your integration and end-to-end tests by hand whenever UI changes are made, as well as verifying user journeys manually. Taken together, these challenges often make smoke testing a considerable time sink that distracts from critical work.

Making synthetic monitoring a core element of your UX smoke testing process can address these pain points by helping you analyze user journeys across your deployments via change-resilient automated tests. In this post, we’ll explore how to:

- Design effective synthetic smoke tests

- Analyze the results of your smoke tests

- Increase the efficiency of your synthetic tests over time

Configuring synthetic tests for effective UX smoke testing

The goal of smoke testing is to surface major issues in your system early in the development or deployment process. This helps you determine whether your current app is functional at the most basic level before you move on to more granular testing and development stages. When scoped to your UX, smoke testing helps you determine whether users can perform actions critical to basic flows within your app. In addition to surfacing broken components, UX smoke tests highlight areas of your UI that may need further usability testing or optimizations to support specific user journeys.

Synthetic monitoring takes on the challenges of smoke testing your UX by running health checks in your automation and bringing the test results together in one place. This enables you to easily access functional, performance, API, and visual monitoring to make sure your UX performs well in each production and staging environment across all of your deployments.

To break this down a bit, let’s explore how some of the common types of UX smoke testing are handled by synthetic monitoring, as compared to the conventional tools:

| Smoke Testing Type | Synthetic Monitoring | Conventional Alternatives |

|---|---|---|

| Functional UI verification | Browser, API/Multistep API, and HTTP tests | Selenium, Cypress, Playwright |

| User journey and flow consistency | Browser tests and session replay | Cypress, TestCafe, Ghost Inspector |

| UX performance and load testing | Browser tests and individual session views | Lighthouse, Browser Performance Profilier, WebPageTest, k6, JMeter |

| Visual regression testing | Session screenshots and layout change alerting | Percy, Applitools, Chromatic |

| API reliability | API uptime monitoring and failure detection | Postman, REST Assured, WireMock |

| Cross-browser and platform testing | Regional multi-browser and mobile device testing on real mobile devices | BrowserStack, Sauce Labs, LambdaTest |

| Alerting and CI/CD | Integrates with monitoring, CI/CD, and alerting tools | Jenkins, GitHub Actions, CircleCI |

As this table shows, synthetic monitoring handles each of the common UX smoke testing types with only a handful of test methods. Additionally, performing synthetic testing within a UX monitoring platform enables you to take advantage of other features to understand your results, such as session replays, dashboards, and alerts.

Let’s dive deeper to see how using synthetic monitoring helps solve three major challenges of implementing efficient UX smoke testing solutions:

- Recreating real user behavior

- Scaling your tests and making them resilient

- Automating your testing workflow

Recreating real user behavior for more accurate UX tests

When initially designing their apps, most organizations use a combination of business goals and operational best practices to define key user journeys. However, users often interact with apps in unexpected ways. To ensure that the basic functionality of your app is usable, you need to be able to compare your ideal user journeys with simulations of actual user behavior. You can then work on refining your journeys to ensure that they’re realistic while still meeting your organization’s key objectives.

Many teams start developing their smoke testing suite by defining key user journeys as browser tests that assess the basic functionality of their apps from a user’s perspective. Then, they layer in API and HTTP tests to cover integration and end-to-end testing. By going with this approach and making synthetic tests for desktop and mobile browsers the foundation of your smoke testing strategy, you can easily see how well your app can handle realistic user interactions across real devices in different deployment regions. This enables you to determine whether critical app flows—such as logins, carts, and checkouts—actually work before you develop more supportive features. You can easily record these tests and play them back across regions for location-specific testing.

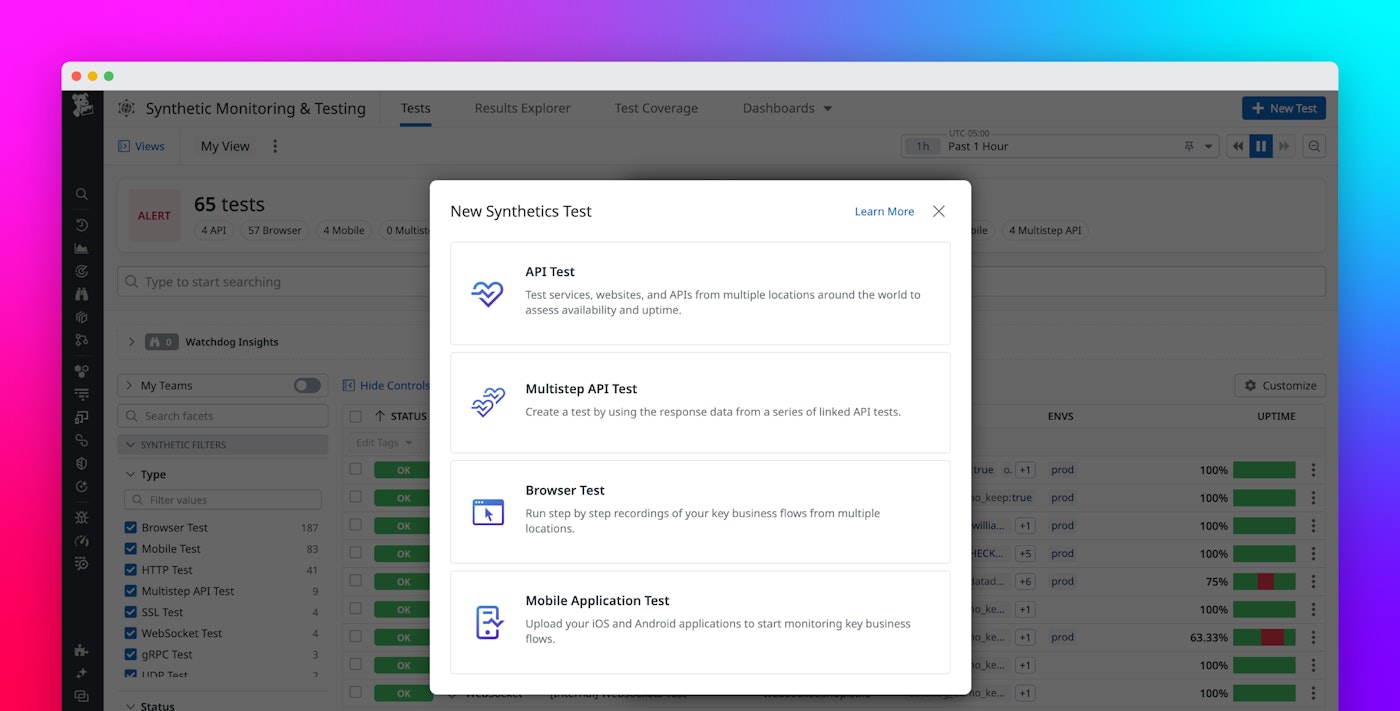

To test the critical user journeys that you’ve defined, you can start by installing Datadog’s test recorder extension—either manually or from the Chrome Web Store—and recording a browser test in Datadog Synthetic Monitoring for each journey. You can also use browser test templates to design tests for your specific use cases. Then, you can navigate to the Results Explorer within Synthetic Monitoring to analyze any failed tests and determine what went wrong. To investigate deeper, you can view the initial load latencies and Core Web Vitals scores for each problematic session in Datadog RUM.

Designing resilient UX tests that scale

The next challenge is to create durable smoke tests that scale along with your app’s updates. Because UX smoke testing begins early in the development process, these tests are especially vulnerable to UI changes—of which there will likely be many as you tweak your app to better accommodate real user behavior. Creating your smoke tests manually entails tedious updates every time page elements are moved, new components are added, or other changes are made that alter the DOM in ways your tests didn’t anticipate.

By contrast, some synthetic monitoring approaches include strategies for automatically detecting—or even adapting to—changes in your app. One method is to use locators to easily identify components even as major layout shifts occur, enabling you to keep track of UI elements from your early design stages all the way to deployment. For example, Datadog synthetic tests are built to be self-healing, automatically detecting app updates and adjusting themselves accordingly. To do this, these tests incorporate customizable locators that enable you to dynamically track elements throughout your app. When you need to make small changes—like adjusting the URL your API tests point to—low-code and no-code platforms such as Datadog make these updates as simple as possible.

Additionally, having your self-healing smoke tests hosted within a centralized UX monitoring solution enables you to scale your testing to accommodate app updates with as little friction as possible. You can easily integrate different test types into your UX smoke testing suite as your requirements evolve, such as cross-browser testing as you expand support for your app and load testing as you increase its audience.

To illustrate this, here are some examples of how each of Datadog’s synthetic test types can help you handle common UX smoke testing concerns:

| UX Smoke Test Concern | Browser Test | API Test | Multistep API Test | Mobile Test |

|---|---|---|---|---|

| Functional UI Verification | ✅ Simulates UI interactions | ✅ Verifies API responses | ✅ Checks API sequence reliability | ✅ Tests mobile UI elements |

| User Flow Consistency | ✅ Automates end-to-end UX flows | ✅ Monitors API health | ✅ Ensures API steps function together | ✅ Validates multi-step mobile navigation |

| UX Performance and Load Testing | ✅ Measures page speed & rendering | ✅ Tracks API latency & timeouts | ✅ Tests chained API request performance | ✅ Checks mobile load speed and responsiveness |

| Visual Regressions | ✅ Screenshots for UI changes | ❌ Not directly related | ❌ Not directly related | ✅ Captures mobile UI screenshots |

| API Reliability | ✅ Detects UI failures due to API issues | ✅ Monitors API availability | ✅ Ensures API step consistency | ✅ Ensures mobile app resilience to API failures |

| Cross-Browser Testing | ✅ Runs in different browsers & regions | ✅ Tests API latency globally | ✅ Checks API delays across regions | ✅ Validates UX across devices |

| Alerts and CI/CD | ✅ UI failure alerts in CI/CD | ✅ API failure alerts | ✅ API flow alerts in CI/CD | ✅ Detects mobile UI issues before deployment |

Integrating synthetic tests into your automated workflows

To integrate your UX tests into your delivery workflows, many traditional smoke testing strategies require you to write custom test scripts that run in your CI and CD automation. For example, to test a user journey from end to end, you would likely need to write some scripted commands for a particular testing framework—such as Cypress—to perform the journey for you. In this script, you would:

- Describe the user journey, such as:

Login, Navigate to Item, and Add Item to Cart - Create a test case definition that asserts whether all the user actions in this journey executed successfully

- Clear the environment of any cookies and local storage

- Simulate each part of the journey using a series of test actions provided by the library, such as:

login: visit(‘/login’),navigate to a product: get('[data-test="shop-link"]').click(),and add a product to the user’s cart: get('[data-test="add-to-cart-button"]').click()You would then need to build another script like this one for every user journey that your team identified as important enough to regularly smoke test.

Conversely, by replacing these handwritten tests with more flexible tests from synthetic monitoring, you can automatically handle the granular steps that you would otherwise be required to write yourself, like clearing the cache, checking if specific content exists on the page, and having to explicitly define your assertions in exactly the right order. Additionally, this approach saves you from having to manually update your pipeline configuration files.

With Datadog Synthetic Monitoring, the only real coding step that’s required is to set up your CI and CD to run the synthetic tests you create—after which you’ll most likely just be defining and updating your UX tests in Datadog as you go.

Setting this up is typically a matter of:

- Installing the datadog-ci module

- Connecting your synthetic tests to your CI/CD environment

- Configuring your CI and CD processes to run the tests

For more details on how to integrate synthetic tests into your CI/CD pipelines like this, you can read our blog post.

Analyzing your UX smoke tests with synthetic monitoring

Well-designed UX smoke tests should highlight critical failures in your user journeys first and foremost. However, understanding the cause of these failures can be a challenge in and of itself. For example, a poor Largest Contentful Paint (LCP) score can be due to issues ranging from server-side hardware limitations to loading complex, process-heavy animations into your UI that block main thread execution for too long. As your test results accrue, failures (and failure patterns) will emerge in your UX performance metrics that point to exactly what needs to be fixed.

Let’s say you’re running a test that fails on a checkout journey. Looking at the error message, you see that an expected UI element was missing. A 202 status code was returned when the app tried to fetch the resources it needed for the user flow to work, and one of the resources it asked for was never returned. You decide to look over a session replay of the failed flow, which shows that the app timed out while attempting to load the missing element and triggered a user-facing error message. From there, you can also see that this same error is occurring in multiple locations when your smoke tests run. This helps you determine that this is a pervasive UX issue with far-reaching impacts on your users.

Since your synthetic tests can be connected to your RUM traces, backend traces, and logs, finding the root cause is often as simple as diving into performance profiles of individual user sessions. To investigate the issue from the prior example, you first look over the traces for the failed checkout journey and immediately see the issue is coming from the HTTP calls being made to the backend checkout service.

You then review the logs that are directly related to those traces in the monitoring UI. In doing so, you discover that your app’s checkout service is rejecting transaction requests that exceed its API rate limit, causing failures when traffic is high. To mitigate this, you begin by reassessing how your HTTP library handles these requests to ensure better scalability, as pushing this issue to production could result in revenue loss. You then increase the transaction API’s rate limit as a temporary fix while your team works on a long-term solution.

Increasing the efficiency of your synthetic tests over time

Setting up comprehensive and fully distributed UX smoke testing in the near term is key for maintaining user satisfaction in the long run. But how do you identify and eliminate the blind spots in your smoke tests over time? How do you know what questions you’re not asking about how customers are using your app today? Uncovering these gaps can help you maintain the relevance of your smoke tests by showing you new or common user journeys that aren’t covered yet.

Correlating the observability data you’re generating in your synthetic tests with actual user behavior from real user monitoring solutions is a highly effective way to accomplish this. In Datadog, you can use RUM and Synthetic Monitoring together to identify common user journeys that aren’t obvious—and set up new synthetic tests to represent them.

This helps you identify your test coverage of the real actions your users are performing and see exactly where your blind spots are based on the actions you’re not currently testing for synthetically. With a clear view of which untested actions your real users perform the most, you can prioritize testing the most valuable of those actions moving forward.

To complete your UX smoke testing life cycle, it’s important to determine and establish benchmarks for measuring the effectiveness of your synthetic tests. From there, you can then decide how you should optimize your UX smoke testing coverage.

Measuring the impact and success of your UX smoke tests

You’ve got your synthetic smoke tests up and running, and you’ve integrated them into your automation. How do you begin to think about measuring their impact and success?

Measuring impact

First, let’s consider some of the key ways that the impact of smoke testing your UX is typically measured:

Test coverage and effectiveness: Coverage and effectiveness metrics help you ensure that all of your critical user flows and components are covered by smoke tests. Test coverage and effectiveness are usually measured by calculating the percentage of your critical UX flows that are covered by UX smoke tests, their pass and fail rates, and how many of these flows are giving false positives (i.e., how many journeys pass despite being broken or difficult to navigate). Some of the conventional tools often used to measure this are Cypress, Playwright, Selenium, and Jest.

Time-to-detect (TTD) and time-to-resolve (TTR): These metrics measure how quickly your UX issues are detected and resolved. A faster TTD reduces user impact, and a shorter TTR means your smoke tests are more efficient in helping you resolve UX issues as they arise. Conventional tools often used to measure these are PagerDuty, Opsgenie, Jira, and GitHub Issues.

Production incident reduction: This measurement shows how effectively your UX smoke testing process prevents real issues from reaching production. It’s continually tracked in order to identify a percentage of reduction in UX-related production incidents, with a lower number indicating that you’re catching issues early. Conventional tools often used to track production incident reduction are Sentry, Fullstory, and Hotjar.

User experience: UX metrics validate that your fixes are actually improving your application’s UX when you smoke test it. These metrics are typically measured using Core Web Vitals, alongside bounce and conversion rates in production to verify that the issues which surfaced in your smoke tests have been resolved. Conventional tools for measuring these are Google Lighthouse, browser performance profilers (e.g., the profiler in Chrome DevTools), and Hotjar.

Cost and efficiency: This category of metrics measures how much effort you spend on testing versus the value you deliver. These measurements are represented by the amount of time saved in manual UX testing, your reductions of hotfixes, and the number of emergency deployments you perform. These metrics can also include the cost reduction in customer support due to your ongoing UX issue mitigation. Conventional tools used for these are Jira metrics, Zendesk, and Intercom.

Test reliability and stability: Reliability and stability metrics ensure that your UX smoke tests provide consistent and accurate results. To attain these metrics, you typically measure your flaky test rate and overall smoke testing execution time. This helps you determine whether all of the tests you run frequently in your CI/CD are optimized and fast. Conventional tools often used to measure these are Cypress dashboards and the Playwright Trace Viewer.

There are a variety of ways to collect these measurements across the spectrum of synthetic monitoring platforms that are commonly used today, especially since most of these solutions integrate well with the conventional testing tools mentioned above. In some cases, teams choose to cross-reference data across their toolset—for example, they may compare delivery benchmarks from their automation with issue resolution timestamps from their incident management system. On the other hand, some platforms consolidate, generate, and correlate these kinds of metrics into one place. To see this in action, let’s consider how Datadog Synthetic Monitoring helps you measure the overall impact of your UX smoke testing:

| Measurement type | Key metrics | Goal | Synthetic monitoring function | How it helps |

|---|---|---|---|---|

| Test coverage and effectiveness | Percentage of critical UX flows tested, pass/fail rate | Ensure major UX flows are covered | Browser tests, API tests, and multistep API tests | Test results are available in test list, monitored by alerts, and visible in dashboards |

| Time-to-Detect (TTD) and Time-to-Resolve (TTR) | TTD, TTR | Reduce issue detection and resolution time | Synthetic monitoring alerts and test history | Provides real-time alerts when a UX test fails and logs historical failures for debugging trends |

| Production incident reduction | Percentage of reduction in UX incidents, severity of issues | Prevent major UX issues from reaching users | Browser and API tests with RUM correlation | Detects issues before users experience them by combining synthetic tests with real user monitoring data |

| User experience | Core Web Vitals, bounce rate, conversion rate | Validate that UX smoke testing improves real UX | Performance metrics in browser tests | Monitors page speed, LCP, INP, CLS, and interaction delays, helping track UX degradation over time that correlate to bounce and conversion rates |

| Cost and efficiency | Time saved in manual QA, hotfix reduction, support cost reduction | Ensure efficient testing without excessive cost | CI/CD integration and synthetic testing automation | Runs tests automatically in CI/CD pipelines, reducing manual QA work, demonstrably preventing costly hotfixes from occurring |

| Test reliability and stability | Flaky test rate, test execution time | Ensure fast, stable, and reliable tests | Synthetic test history (in aggregate) | Analyzes test stability over time, helping to detect flaky tests and optimize execution time |

As you can see, coverage is just the start—and there are many ways to dissect the ongoing impact of your smoke testing process with synthetic monitoring platforms. Ultimately, though, an impactful UX smoke testing process is one with a comprehensive collection of tests that accurately represent real user journeys, ensure every API operates together as intended, and addresses critical business concerns as they grow and evolve.

Measuring success

Observing the success of your UX smoke testing process is most readily achieved through the ongoing use and curation of dashboards that bring ongoing visibility to each of the core measurements identified above. At the very least, you’ll want to maintain a dashboard that focuses on key testing concerns—such as test reliability and coverage—and another that gives your team visibility on the impact that your smoke testing has on your UX performance and business goals.

These dashboards help you measure the success of your smoke testing strategy by bringing together synthetic data from across all your testing regions. To monitor the effectiveness and reliability of the tests themselves, you can track metrics such as the number of flaky tests generated over time, test durations, and the pass/fail rates of your tests. To look at business and user impact, you’ll want to focus on the user experience signals that directly impact your audience the most—like Core Web Vitals—and the metrics from crucial events that directly impact the viability of your application, including initial load times, bounce rates, and checkout failures.

Most importantly, these dashboards are where you can continually set up monitor alerts to ensure the average scores of those vital metrics stay in check, and bring them back to where they should be when they fall beyond an acceptable threshold. You can also use these dashboards to validate your SLOs by tracking their current statuses and burn rates for critical journeys. For example, if you’re using a user journey test to monitor a checkout flow, you can create an SLO to track your application’s uptime based on the test result and add that to your business KPI and UX performance widgets on your dashboards.

Optimizing your UX smoke testing coverage

As a final consideration, you’ll want to think about how your smoke testing process can become more refined as the tools that are available to you evolve.

Here are a few ways in which you can continue to do that:

- Set up an alert for your team that lets you know when new untested user actions and journeys appear in your test coverage dashboard widget.

- Continually improve your API test coverage whenever it’s needed.

- If you’re using Datadog Synthetic Monitoring, you can also use the tools that are available to you in Datadog Product Analytics, like Pathway diagrams and heatmaps, to see which aspects of your app’s design and implementation are causing your users to choose their most-used routes.

- Consider trying new updates to your synthetic monitoring platform when they come—for example, continuous testing parallelization in Datadog reduces the time it takes to execute your synthetic tests, thereby bringing down your smoke testing time as well.

As the smoke clears

Making synthetic monitoring a central part of your UX smoke testing process is more than a matter of replacing your existing smoke tests with something else. When your synthetic user journeys are indistinguishable from the real ones, your tests scale with your app, updates don’t halt your existing smoke testing process (or make your automation fail), and your smoke tests quickly report the current state of your app’s UX across all regions.

Smoke testing your UX efficiently with a synthetic monitoring solution increases your UX testing accuracy, decreases the time it takes to perform your integrations and deployments, and continually improves your app’s value by helping it meet—and exceed—your users’ expectations.

To get started with Datadog Synthetic Monitoring, you can use our documentation. With our synthetic testing templates, you can easily design UX smoke tests that are built for your specific use cases. If you’re new to Datadog, you can sign up for a 14-day free trial.