Azure OpenAI Service is Microsoft’s fully managed platform for deploying generative AI services powered by OpenAI. Azure OpenAI Service provides access to models including GPT-4o, GPT-4o mini, GPT-4 Turbo with Vision, DALLE-3, and the Embeddings model series, alongside the enterprise security, governance, and infrastructure capabilities of Azure.

Organizations running enterprise-scale LLM applications with Azure OpenAI Service can monitor and troubleshoot application performance across their entire stack using Datadog’s extensive Azure integration. With full visibility into over 60 related Azure services, including Azure AI Search, Azure CosmosDB, Azure Kubernetes Service, and Azure App Service, Datadog makes it easier to deliver optimal performance across both internal and customer-facing LLM applications.

We are pleased to announce Datadog LLM Observability’s native integration with Azure OpenAI Service, with out-of-the-box auto-instrumentation for tracing Azure OpenAI applications. This enables Azure OpenAI Service customers to use Datadog LLM Observability for:

- Enhanced visibility and control, with real-time metrics that provide insights into Azure OpenAI Service models’ performance and usage

- Streamlined troubleshooting and debugging, with granular visibility into LLM chains via distributed traces

- Quality and safety assurance, with out-of-the-box evaluation checks

In this post, we will discuss how these features within Datadog LLM Observability help AI engineers and DevOps personnel maintain performant, safe, and secure applications using Azure OpenAI at enterprise scale.

Track Azure OpenAI usage patterns

In enterprise-scale Azure OpenAI environments tackling complex use cases, it’s crucial to monitor requests, latencies, and token consumption effectively. You can monitor many of these metrics by using LLM Observability’s out-of-the-box Operational Insights dashboard, which provides a comprehensive view of application performance and usage trends across your organization. The dashboard includes detailed operational performance metrics, including trace- and span-level errors, latency, token consumption, model usage statistics, and any triggered monitors.

This bird’s-eye view of your Azure OpenAI app performance enables you to quickly spot potential issues, such as high token consumption leading to excessive cost, resource exhaustion leading to increased latency, and failed prompts caused by application errors. Then, it becomes easier to determine where to focus your investigation further.

Troubleshoot issues in your Azure OpenAI application faster with end-to-end tracing

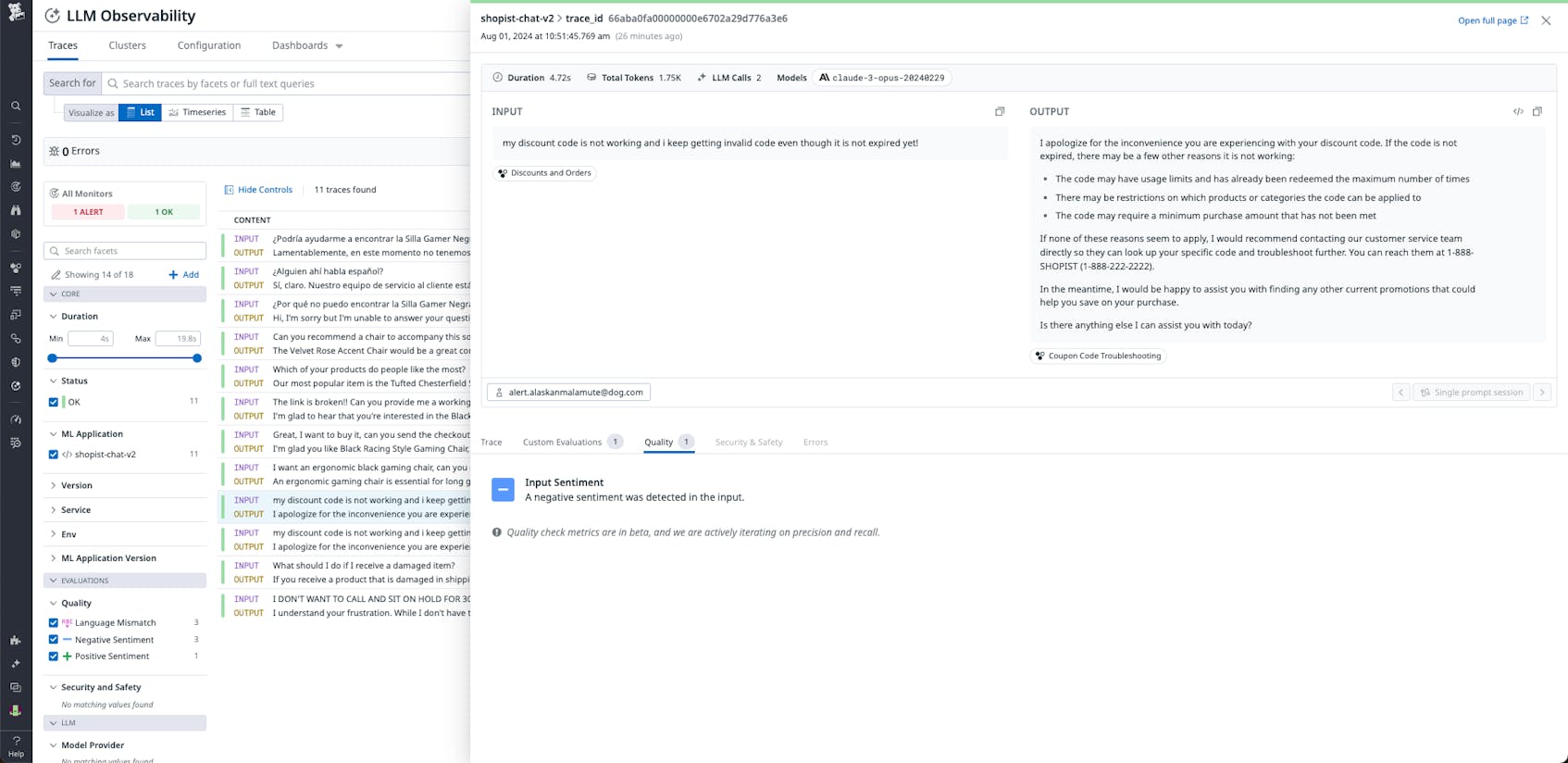

Optimizing the performance of your Azure OpenAI application requires granular visibility into your LLM service’s execution across the chain. Datadog LLM Observability provides detailed traces you can use to spot errors and latency bottlenecks at each step of the chain execution.

By tracking down sources of latency, you can determine which of your Azure infrastructure resources need to be scaled to improve performance, or investigate whether token optimization strategies would make prompting more efficient. LLM Observability lets you inspect all related context—such as information retrieved via retrieval augmented generation (RAG), or information removed during a moderation step—for any prompts that execute in a session, so you can understand how the system prompts are being formed and look for optimizations.

By facilitating smoother troubleshooting, tracing your Azure OpenAI apps with LLM Observability makes it easier to ensure that critical errors and latency issues are resolved quickly and limit their customer impact.

Evaluate your Azure OpenAI application for quality and safety issues

Azure OpenAI is committed to responsible AI practices that limit the potential for malicious exploits, harmful model hallucinations, misinformation, and bias. These concerns are paramount for large enterprises whose AI tools can easily become the target of frequent attacks and attempts at manipulation. Datadog LLM Observability supports this by providing out-of-the-box quality and safety checks to help you monitor the quality of your application’s output, as well as detect any prompt injections and toxic content in your application’s LLM responses.

The trace side panel allows you to view these quality checks, which include metrics like “Failure to answer” and “Topic relevancy” to assess the success of responses. Additionally, checks for “Toxicity” and “Negative sentiment” are included to indicate potential poor user experiences. By leveraging these features, you can ensure your LLM applications operate reliably and ethically, addressing both performance and safety concerns.

LLM Observability also uses Sensitive Data Scanner to scrub personally identifiable information (PII) from prompt traces by default, in order to help you detect when customer PII was inadvertently passed in an LLM call or shown to the user.

Optimize your Azure OpenAI applications with Datadog

Azure OpenAI Service makes it easier for organizations to build and support generative AI at enterprise scale. By monitoring your Azure OpenAI applications with Datadog LLM Observability, you can form actionable insights about their health, performance, cost, security, and safety from a single consolidated view.

LLM Observability is now generally available for all Datadog customers—see our documentation for more information about how to get started. If you’re brand new to Datadog, you can sign up for a free trial of our Azure OpenAI Service integration package here.