Tom Sobolik

Shri Subramanian

Evaluating the functional performance of LLM applications is paramount to ensuring they continue to work well over time amid changing trends in your production environment. But producing effective metrics for evaluating LLMs poses significant challenges. When models are deployed to answer customer questions, evaluate support interactions, or generate data insights and other content, it can be difficult to obtain a stable ground truth to evaluate the application with. Further, evaluations must be tailored to the application’s specific use case in order to properly measure qualities like accuracy, relevancy, coherence, toxicity, and sentiment in LLM inputs and outputs.

A number of evaluation approaches, including code-based, LLM-as-a-judge, and human-in-the-loop methods, can be considered as you build your evaluation framework. In this post, we’ll explore some of the most important considerations when choosing how to evaluate your LLM application within a comprehensive monitoring framework. We’ll also discuss how to approach obtaining evaluation metrics and monitoring them in your production environment.

Choose the right metrics for your use case

In order for your LLM application to succeed in production, outputs need to be factually accurate, adhere to your organization’s brand voice and security and safety policies, and remain within the scope of your application’s intended domain. An effective LLM evaluation framework will include metrics to characterize both prompts and responses—as well as internal inputs and outputs in agentic or chain-based LLM applications—for insights into application performance across all these dimensions. Aside from operational performance metrics that can be obtained via traces, such as request latency, application error rates, and throughput, let’s discuss the primary types of evaluation metrics for measuring an LLM app’s functional performance:

Context-specific evaluation

When your model is fine-tuned for specific tasks or searching through data stores at request time to produce responses, its output needs to be grounded within the established context. For instance, if your model is responding to a user prompt by leveraging retrieved context from a Retrieval-Augmented Generation (RAG) pipeline, but its answer contains information that can’t be gleaned from that context, the model may have hallucinated. Context-specific evaluations can help you gauge your application’s ability to retrieve relevant context and infer from it appropriately to produce outputs. The needle-in-the-haystack test is a common code-based approach for evaluating context retrieval, and faithfulness evaluations are typically produced to evaluate LLMs’ self-consistency within an LLM-as-a-judge framework.

Needle in the haystack

Put simply, the needle-in-the-haystack test checks how well an LLM is able to retrieve a discrete piece of information from within all the data in its context window. Major foundational models including Google Gemini and Anthropic Claude were developed using this evaluation.

By applying the needle-in-the-haystack evaluation across different depths and context sizes (i.e., adjusting the location of the needle within the rest of the context and changing the size of the dataset), you can test whether your chosen model is able to effectively parse the information in your RAG dataset. Typically, a needle-in-the-haystack evaluator will execute the following steps:

- Embed the “needle” by placing a specific statement into the RAG pipeline’s vector store.

- Prompt the model to answer a question solved by the statement using only the provided context.

- Check if the answer semantically matches the information provided by the needle.

- Repeat the test at varying depths and context sizes.

Faithfulness

Faithfulness evaluations use a secondary LLM to test whether an LLM application’s response can be logically inferred from the context the application used to create it—typically with a RAG pipeline. A response is considered faithful if all its claims can be supported by the retrieved context, while a low faithfulness score can indicate the prevalence of hallucinations in your RAG-based LLM app’s responses. Open source providers like Ragas and DeepEval offer out-of-the-box faithfulness evaluators you can integrate into your experimentation and monitoring. Typically, a faithfulness evaluator will execute the following steps:

- Break down the response into discrete claims.

- Ask an LLM whether each claim can be inferred from the provided context.

- Determine the fraction of claims that were correctly inferred.

- Produce a score between 0 and 1 from this fraction.

User experience evaluation

When monitoring your LLM application in production, it can be difficult to evaluate the truthfulness and effectiveness of responses without resorting to long and costly human-in-the-loop methods that require your organization to employ people to manually evaluate and label model outputs. By creating evaluations that leverage user experience data from your application’s inputs and outputs, you can form cheaper heuristics that can still signal when your application is producing incorrect outputs, straying from its response guardrails, and other issues. Topic relevancy and negative sentiment evaluations are two common LLM-as-a-judge approaches for measuring the effectiveness of an LLM app with user experience data.

Topic relevancy

In an LLM conversation, topic relevancy is a binary measurement describing the relevance of a question or answer to the LLM application’s established domain. By measuring the relevancy of LLM conversations, you can characterize how your application responds when it is asked something off topic and ensure that proper prompt guardrails are in place to prevent hallucinations, security breaches, and other risks. Topic relevancy can be improved by setting guardrails that dictate more precise boundaries for what the application is allowed to discuss. And in agentic applications, a reflection system can be created to iterate the response through a cycle of relevancy evaluation during the agent execution.

Topic relevancy is usually calculated by prompting a secondary LLM to ask if the question falls within a defined domain boundary. This boundary is defined within the secondary model’s prompt template. For example, let’s say your application answers customer support questions about commercial air travel. To evaluate relevancy for this topic, your prompt template could include the following criteria (adapted from these templates provided by Google Cloud):

# InstructionYou are an expert evaluator. Your task is to evaluate the quality of the responses generated by AI models.We will provide you with the user input and an AI-generated response.You should first read the user input carefully for analyzing the task, and then evaluate the quality of the responses based on the Criteria provided in the Evaluation section below.

# Evaluation## Metric DefinitionYou will be assessing topic relevancy, which measures whether or not the response is within the relevant domain boundary.

## CriteriaTopic Relevancy: the response contains only information relevant to the airline, including (but not limited to) pricing, scheduling, refunds, re-booking, airline policies, visas and international travel guidelines, etc. The response does not contain any information related to other topics.Negative sentiment

Sentiment analysis in LLMs describes evaluations that are used to characterize the tone of conversations in an LLM app to detect negative user experiences. By detecting negative sentiment in your app’s user sessions, you can flag potential instances of user frustration or application misuse and spot when fine-tuning your model or addressing application issues might be needed. Negative sentiment evaluations are typically performed using the following steps:

- Break down the response into discrete statements.

- For each statement, ask a secondary model if that statement is negative, neutral, or positive.

- Calculate the fraction of negative to neutral or positive statements.

- Assign a score between 0 and 1 based on that fraction.

Security and safety evaluation

Monitoring your LLM application’s inputs and outputs for security and safety breaches is paramount to preventing malicious actors from compromising the functionality of your application and causing reputational harm to your organization. You can perform evaluations in pre-production that test how your application responds to attempts to elicit biased or inappropriate responses or convince the model to take unauthorized actions within your application. Then, in post-production, you can use these evaluations to flag toxicity in inputs and outputs and track prompt injection attack attempts.

A simple toxicity evaluation can be performed by flagging matches in model inputs and outputs with a set of reserved words. However, this approach may not conform to your organization’s standards of toxicity, and it can be difficult to maintain a master list of every banned term. By using an LLM-as-a-judge approach, your evaluation can capture subtler examples of toxicity and avoid reliance on a hard-coded rubric that would have to be maintained over time. Open source models tuned for toxicity detection like this one from Meta can be employed for your evaluator. Your prompt template should provide a clear definition of toxicity (e.g., “abusive speech targeting specific group characteristics, such as ethnic origin, religion, gender, or sexual orientation.”). It should then ask the model to evaluate inputs and outputs against this definition on a Likert scale, so that a numerical score can be produced for metric collection. You can experiment with chunking inputs and outputs into discrete statements to get a potentially more accurate evaluation.

Toxicity in LLM application outputs is often caused by direct prompt injection (also known as jailbreaking), which describes attacks on an LLM application through prompts that are designed to subvert its security and safety guardrails. JailbreakEval is an open source jailbreak evaluation toolkit that collates various LLM-as-a-judge jailbreaking evaluators. For more information about monitoring prompt injections, see our blog post.

Create and collect evaluations in pre-production

LLM evaluation typically happens during both pre-production experimentation and post-production monitoring. Pre-production evaluations on factors like contextual awareness, topic relevancy, and security can help your teams fine-tune model parameters, improve guardrails, and optimize information retrieval systems. Before pushing an LLM app to production, teams typically build out an annotated “golden” dataset for experimentation. The data is annotated with ground truth labels to facilitate code-based and LLM-as-a-judge evaluations. This dataset is then fed into the application to run experiments. Broadly, pre-production evaluations typically involve the following steps:

- Create questions from existing datasets

- Provide corresponding ground truth answers

- Compare generated responses against expected answers

Create questions from existing datasets

Systems must be put in place for enriching production data to create a ground truth-annotated “golden” dataset that can be used to compute evaluations—this can be difficult to do on a quick cadence to get timely evaluations, and is heavily use-case dependent. There are a number of popular open source test datasets for different LLM use cases, but many teams still opt to build on these and create their own custom test data. Depending on the function of the application and the nature of its output, ground truth labels need to be obtained with different methods (if they can be obtained at all). This provides the evaluation system with something to compare the app’s responses against. It’s important for tests to cover a broad range of cases, including:

- “Happy path” cases: expected and common inputs

- Edge cases: atypical, ambiguous, or complex inputs

- Adversarial cases: malicious or tricky inputs designed to test safety and error tolerance

As you develop your test set, you will make decisions about what a “good” response looks like. For example, in an e-commerce chatbot, if the user asks about competitors, your chatbot could provide a fact-based competitor comparison as long as it sticks to your knowledge base, or it could decline to answer.

Provide corresponding ground truth answers

Creating ground truth labels can be time consuming and usually requires a human-in-the-loop element. It’s possible to use an LLM to generate responses (that humans in the loop can review and edit if necessary) in cases where correctness is easy to verify. Your test set will consist of database tables with prompts and ground truth responses, enabling you to load in test data via a pipeline and run evaluations on each row to form an aggregate picture of your application’s performance.

Compare generated responses against expected answers

Once you have a completed dataset, you can run a service that runs each prompt with your LLM application and performs an evaluation comparing the response to the provided ground truth answer. To get an even more accurate evaluation, you can run multiple experiments and compare aggregate evaluations of one experiment to another experiment.

Monitor your evaluations in production

To monitor evaluations in production, teams can use a pipeline to log live LLM requests including prompts, responses, and metadata such as user feedback and session ID. They can then ingest this data into a service that performs the evaluations and assigns scores or Boolean flags for each evaluation before sending it to a monitoring service where it can be rolled up into metrics.

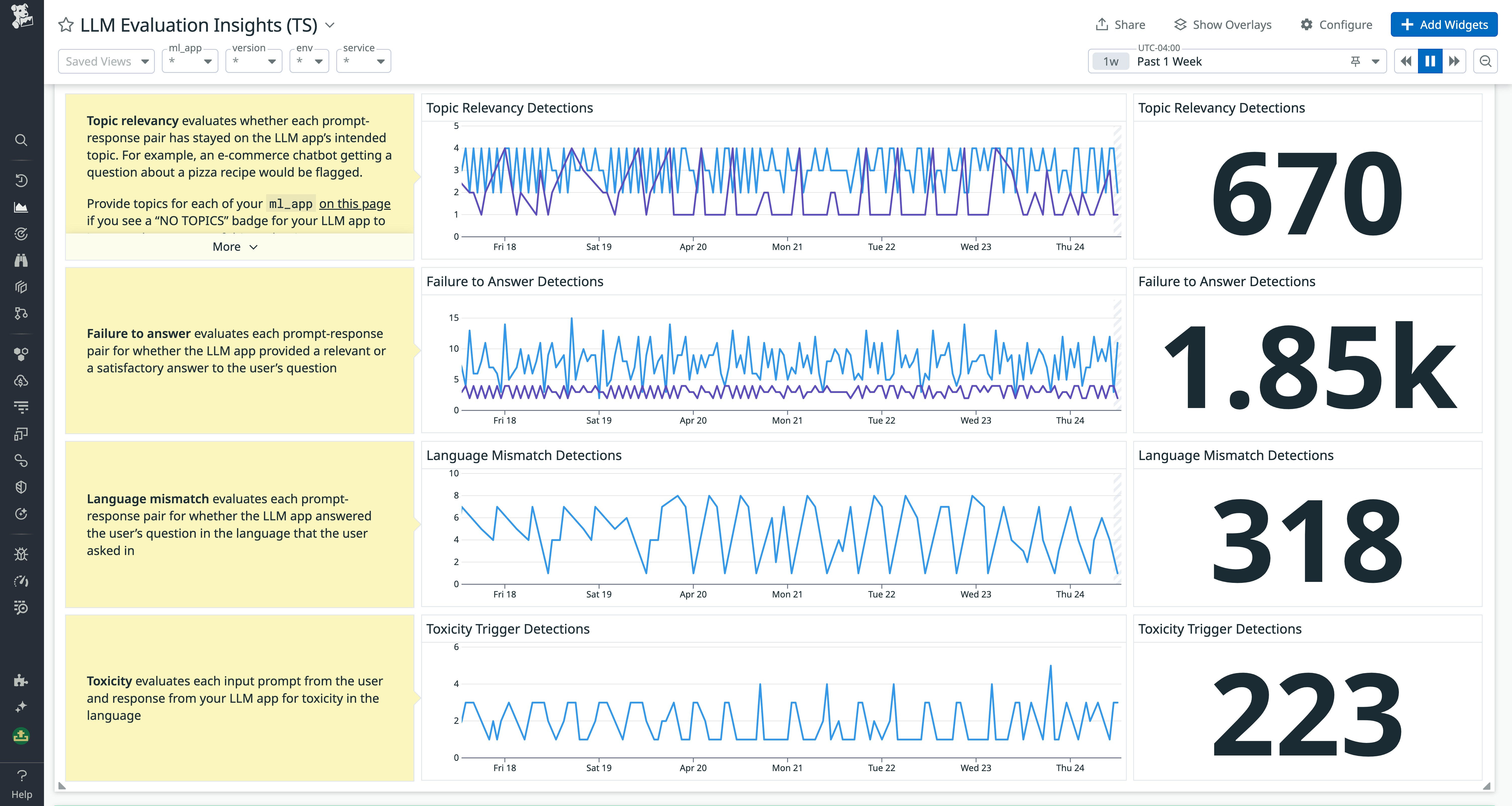

By ingesting metrics for dashboards and alerts, you can use filters and visualizations to help create insights and inform investigations. A dedicated “Quality Evaluations” dashboard like the one shown below can help your team track the functional performance of your application over time and spot trends in key performance indicators (such as the “Failure to answer” evaluator shown).

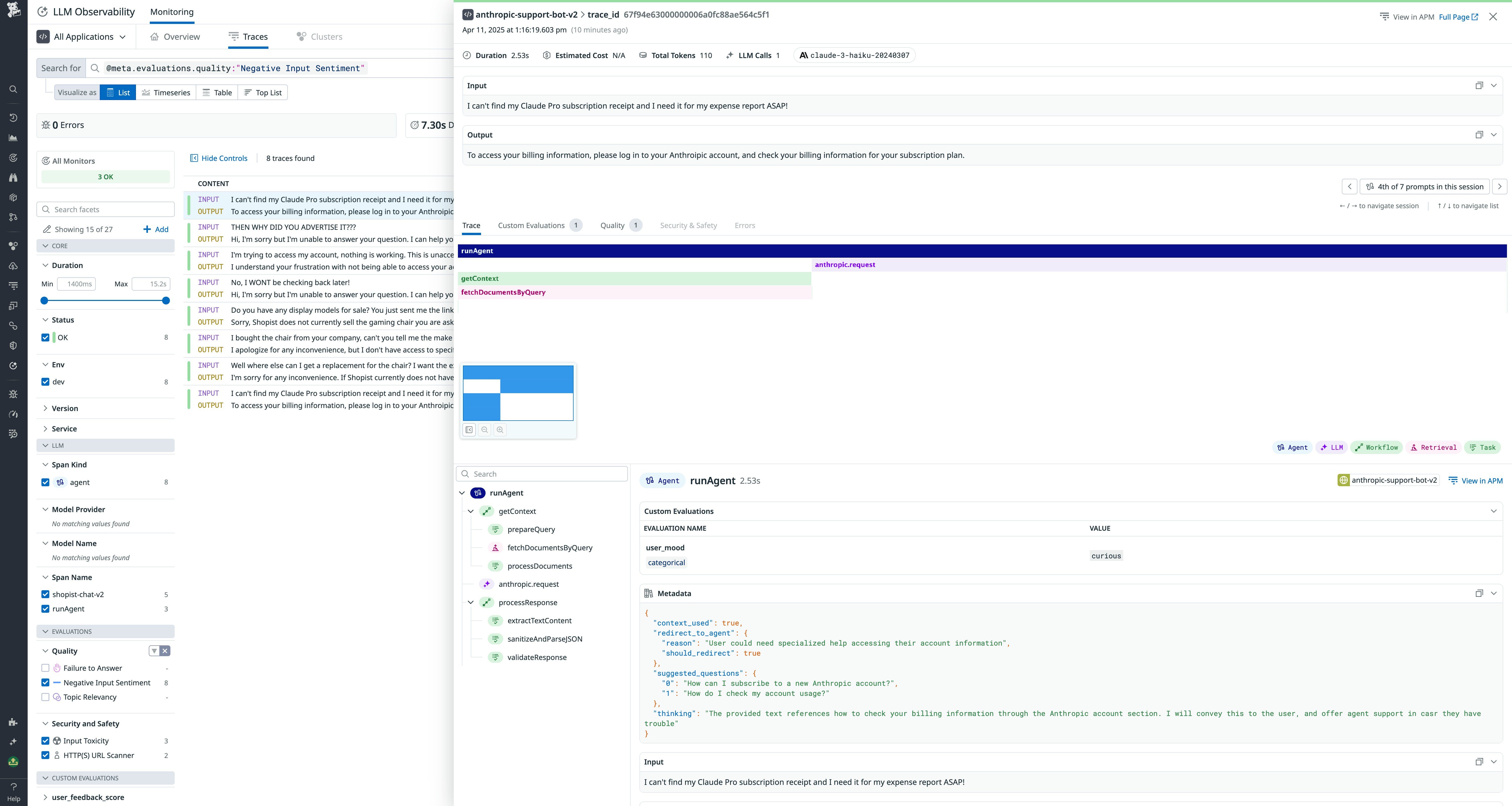

If you are instrumenting your application for distributed tracing, tagging your traces with evaluation scores can provide even more granular context for your application’s behavior. For example, the following screenshot shows the trace for a prompt that triggered a negative sentiment evaluation warning.

The trace details show the offending prompt and how the application responded. For more clues about the application’s behavior, you can dive into the agent execution and look at the system prompt, for instance, to better understand the final output.

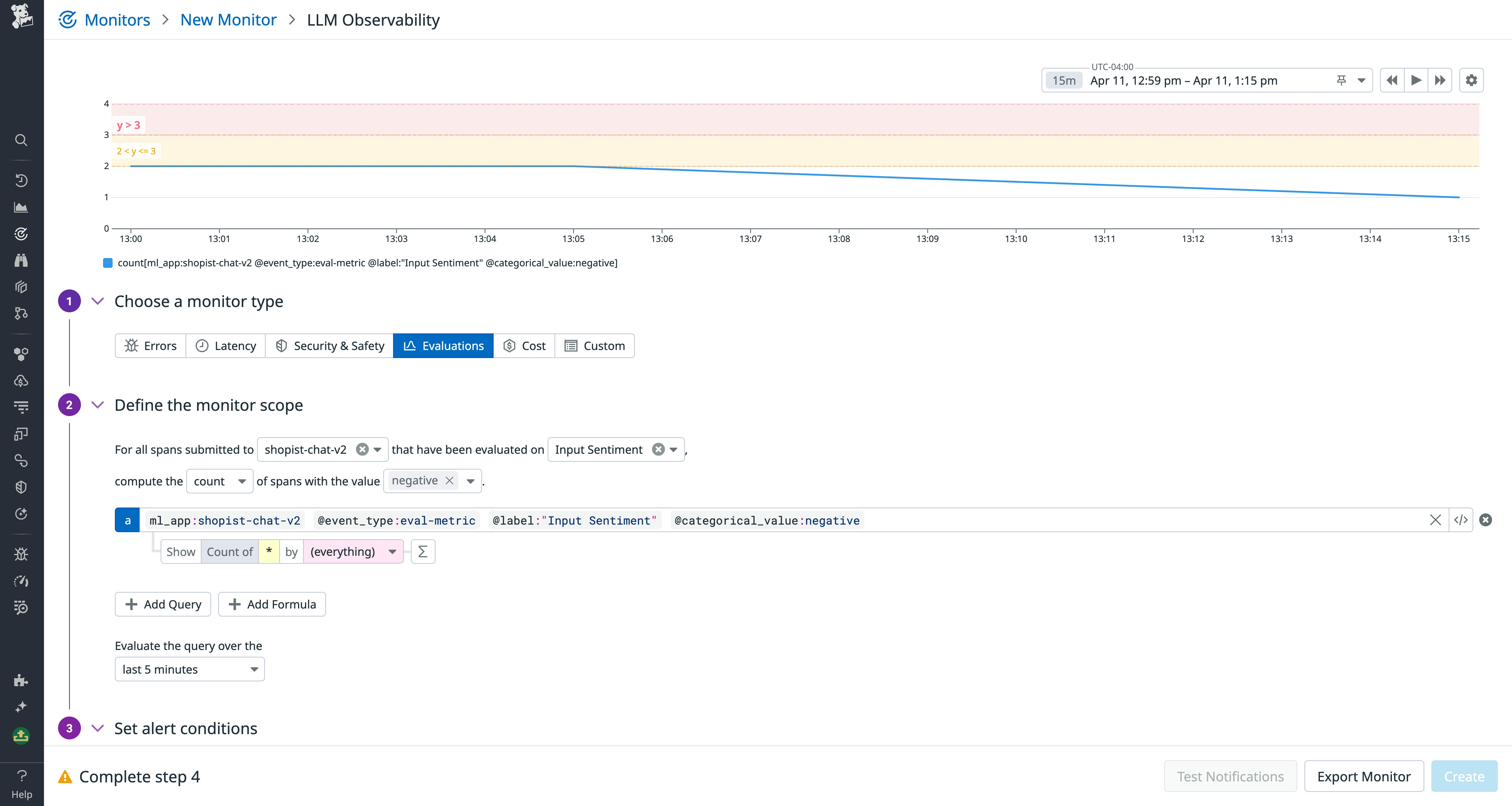

You can use monitors to alert engineers on your team when evaluations fire that could indicate pressing issues, such as high rates of output toxicity or low relevancy for a new popular query, in your application. Continuing the previous example, by setting monitors on high rates of negative sentiment in your traces, you can alert engineers when your application’s behavior is significantly deteriorating or users are becoming frustrated.

Optimize your LLM application’s functional performance

By establishing evaluations for your LLM application in production, you can get continuous visibility into its performance in a number of key areas—including factual accuracy, hallucination, topic relevancy, and user experience. Without these insights, it’s difficult to completely understand the functional performance of your application and understand the source of user problems.

Datadog LLM Observability lets you ingest and monitor traces with associated evaluations, so you can troubleshoot issues and analyze performance in a consolidated view alongside application health, cost, and other telemetry. For more information about LLM Observability, see our documentation. If you’re brand new to Datadog, sign up for a free trial.