Identifying and evaluating security vulnerabilities is essential at every stage of software development and system management. New vulnerabilities surface all the time, demanding ongoing vigilance as well as effective methods of assessment and response. And recent data shows that this is increasingly the case. While analyses of this data indicate that the vast majority of publicly identified vulnerabilities represent less-than-critical security threats, they also reveal an alarming pattern: over the past decade, the occurrence of zero-day attacks—in which attackers exploit previously unidentified vulnerabilities (giving their targets zero days in which to remediate them)—has trended steadily upwards. Vigilance and effective methods of triage are more important than ever.

In this post, we’ll guide you through some industry-defined best practices for evaluating and responding to emerging vulnerabilities. We’ll provide:

- An overview of vulnerabilities and their classification

- A guide to thorough risk assessment and effective handling of emerging vulnerabilities

Along the way, we’ll explore the uses and shortcomings of the primary benchmarks for vulnerabilities, as well as provide some tips on how Datadog can help you refine the insights those benchmarks provide.

A primer on vulnerabilities

In the most basic terms, a vulnerability is any flaw in a computer system that undermines the security of that system. Vulnerabilities often originate in and proliferate through insecure code, faulty configurations, unsecured APIs, and outdated or unpatched software. In recent years, they have permitted a variety of high-impact cyberattacks, including those enabled by the Log4Shell vulnerability in 2021, and large-scale ransomware attacks such as WannaCry and the deployment of REvil on Kaseya.

Emerging vulnerabilities are assessed in terms of their security ramifications and attack surfaces, as well as the hype or news coverage they generate. Their early identification and remediation is a constant top priority for security specialists. To facilitate and underscore the importance of actively monitoring for vulnerabilities, multiple systems have been put in place with federal guidance and support. These include the Common Vulnerabilities and Exposures (CVE) Program, which identifies and catalogs publicly disclosed vulnerabilities under the sponsorship of the US Department of Homeland Security Cybersecurity and Infrastructure Security Agency, and the Common Vulnerability Scoring System (CVSS), which was developed by the US National Institute of Standards and Technology (NIST) and provides a system for gauging their severity.

The CVSS uses a range of metrics to score the severity of vulnerabilities on a scale from 0.0 (None) to 10.0 (Critical). Scores are derived from three categories of metrics:

Base metrics are required and measure factors that are considered constant. These factors fall into two categories: exploitability and impact. Exploitability refers to the ease with which attackers can take advantage of a vulnerability. Impact refers to potential effects on confidentiality (the exposure of sensitive data), integrity (the alteration or destruction of data), and availability (the impairment of systems or services).

Temporal metrics are optional and measure the current status of a vulnerability—for example, whether it is purely theoretical or proven, whether it has an official fix, and whether its root cause has been positively identified.

Environmental metrics are optional and measure the vulnerability’s impact on a specific system, such as an individual organization’s network.

Identify emerging vulnerabilities and enrich their scoring

When it comes to vulnerabilities, the primary task of security teams is to detect them as early as possible. To stay informed, it’s essential to continually monitor the CVE and the NIST National Vulnerability Database for vulnerabilities in components used within your organization and its products and services. Awareness of a vulnerability’s specific implications for your systems and users is key, which is one of the major reasons that CVSS Base scores demand enrichment.

To stay ahead of emerging vulnerabilities, it’s important to make continual use of CVE and CVSS data while recognizing its limitations. These are indispensable tools, but not comprehensive solutions: the severity of vulnerabilities is often determined by factors they underrate or overlook. These factors are not limited to Environmental metrics for specific systems; just as decisive are Temporal metrics such as the existence (or nonexistence) of proof-of-concept code. Other factors may be subjective or difficult to quantify but nonetheless decisive, such as hype or “trendiness.” Additionally, CVSS Base metrics do not directly measure whether or not vulnerabilities permit remote code execution (RCE) attacks, in which attackers are able to execute any code via remote connection to vulnerable components. But the potential for RCE is a major determinant of severity.

Given these limitations, it’s important to take additional steps to build upon the context provided by these systems in assessing how—and with how much urgency—to handle vulnerabilities. We’ll discuss those steps next.

Establish a Base score and evaluate your attack surface

Once you’ve identified an emerging vulnerability, determine its Base score and assess your attack surface: the scope of the vulnerability within your own systems. Base scores can be found under vulnerabilities’ CVE listings (along with detailed summaries of affected components, potential impacts, and more), or computed using the CVSS calculator. But until you’ve sussed out your attack surface, the scope of the vulnerability’s potential impact, and any existing safeguards against it, this score remains purely theoretical.

Code scanning, software bills of materials (SBOMs), and tools such as Datadog’s Service Catalog can be instrumental in determining your attack surface. Code scanners, such as the one provided by GitHub, analyze your code and flag potential vulnerabilities, while SBOMs help you keep track of the components of your systems and services. Datadog’s Service Catalog provides a centralized point of reference for your organization’s services, pointing you to any known vulnerabilities detected in their libraries alongside a host of other information. It’s also important to check whether you already have relevant mitigations in place, such as threat models or runbooks.

Assess the hype and search for proof-of-concept code

Once you’ve determined a Base score and gauged your attack surface, pinpoint the current status of the vulnerability to the extent that you can. Consult social media and GitHub to assess “trendiness,” discover any existing approaches to remediation, and determine whether proof-of-concept code is in circulation.

The CVSS only optionally takes the existence of proof-of-concept code into account, meaning that CVSS scores don’t necessarily reflect the immediate, real-world viability of exploits. Furthermore, proof-of-concept code may indicate a vulnerability’s status as an RCE event—a major determinant of severity, and one that the CVSS may or may not reflect.

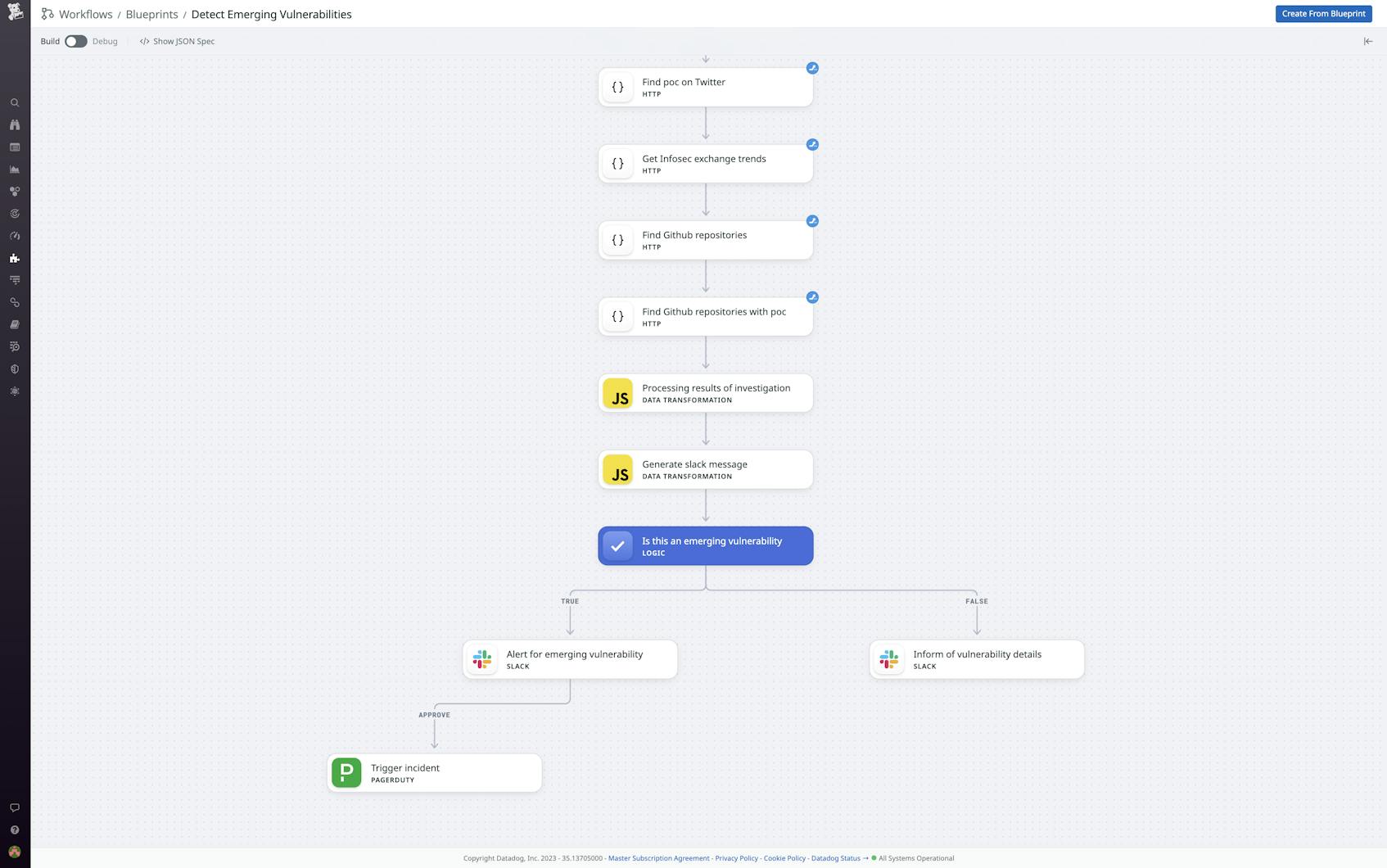

One that it certainly doesn’t reflect is trendiness. But if a vulnerability is generating “buzz” in infosec circles or getting news coverage, that may be an important index of urgency. It may also be difficult to quantify, however. Datadog Workflows can help you systematically assess the status of a vulnerability in terms of both its trendiness and current exploitability. Our Workflows blueprint for emerging vulnerabilities takes a CVE number or popular name (such as “Log4Shell”) as input and queries Twitter, the Infosec Exchange Mastodon community, and GitHub in order to measure the number of posts per day and the number of repositories referencing a vulnerability, as well as to help determine its zero-day status and identify potential proof-of-concept code. When provided a CVE number, the Workflow will also scan the vulnerability’s CVE listing for mentions of an exploit and details of the impacted versions of vulnerable software.

Compute an enriched score and determine next steps

Knowledge of your attack surface, exploitability, “trendiness,” and any existing remediations enables you to score vulnerabilities in terms of the specific risks they pose to your organization and users.

Datadog Workflows enables you to automate—and drastically expedite—the acquisition and analysis of this data. Using the results of its queries to Twitter, Mastodon, and GitHub, Datadog’s internal Workflow for emerging vulnerabilities analyzes the prevalence of vulnerable components in our own systems and products, the availability of proof-of-concept code and whether it indicates an RCE event, whether a vulnerability is trending on social media or GitHub, and whether the buzz around it indicates a zero-day status. Based on these factors, the Workflow yields an enriched score. For example, it sets an initial score of 2.0 given a CVSS Base score of 9.0 or higher, adds another two points if it detects proof-of-concept code, another two if it is trending, and so on.

Stay prepared for swift remediation

Once you’ve evaluated an emerging vulnerability, your emergency playbook should clearly outline your next steps. If the vulnerability is a credible threat, flag it as an incident and initiate a response. To help expedite this stage of the process, our Workflows blueprint measures a vulnerability’s enriched score against an established threshold. If that threshold is crossed, it triggers an alert that includes information on the vulnerability drawn from the CVE and indicates the action required. At Datadog, we use this Workflow to page dedicated emergency responders via Slack, including detailed information on the vulnerability in the alert and allowing them to create an incident at the click of a button, if necessary.

The process of remediation will inevitably vary from organization to organization. To ensure round-the-clock coverage, many organizations appoint multiple incident commanders. Datadog Incident Management—in conjunction with the Datadog Slack and mobile apps—provides resources for coordinated incident response, triage, centralized alerts, delegation of remediatory tasks, and incident tracking.

Finally, share your findings from your remediation process as necessary in order to notify affected parties and, potentially, expedite remediation of the vulnerability even beyond the scope of your own systems. The final step of Datadog’s remediation process for major vulnerabilities is to publish our findings from the process along with new out-of-the-box detection rules for our customers.

Ensure effective handling of emerging vulnerabilities

In this post, we’ve examined established best practices for evaluating and responding to emerging vulnerabilities. We’ve also examined some of the ways in which Datadog Workflows—as well as other tools such as Service Catalog and Incident Management—can help you streamline this process and improve collaboration among teams.

To get started with Workflows, you can use our documentation. If you’re new to Datadog, sign up for a 14-day free trial.