Othmane Abou-Amal

Emaad Khwaja

Ben Cohen

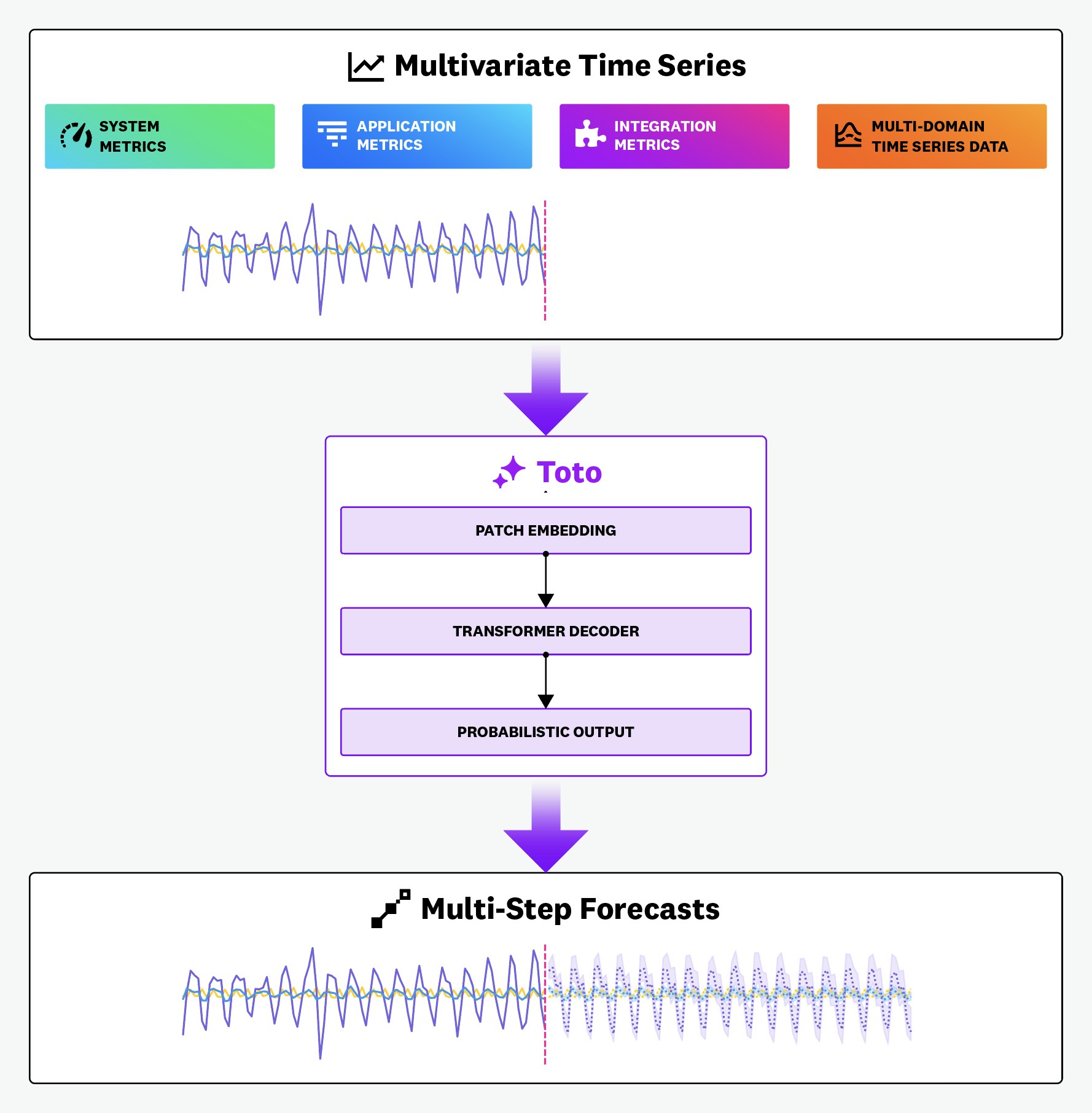

Foundation models, or large AI models, are the driving force behind the advancement of generative AI applications that cover an ever-growing list of use cases including chatbots, code completion, autonomous agents, image generation and more. However, when it comes to understanding observability metrics, current large language models (LLMs) are not optimal. LLMs are typically trained on text, images, and/or audiovisual data and do not natively understand structured data modalities such as time series metrics. In order to effectively process, analyze, and make predictions on the numerical time series data most relevant to monitoring, a foundation model needs to:

- Accurately identify and predict trends and seasonality patterns to detect anomalies, which requires processing a long historical window

- Be trained on data at a very high frequency, on the scale of seconds or minutes

- Parse data that represents very high-cardinality and dynamic groups, such as ephemeral infrastructure

Dedicated foundation models for time series and other structured data have the potential to complement and augment the reasoning and natural-language capabilities of general-purpose LLMs. Existing examples such as Salesforce’s Moirai or Google’s TimesFM achieve excellent performance on time series forecasting. However, in our testing they struggle to generalize effectively to observability data, which have characteristics that make them especially challenging to forecast (e.g., high time resolution, sparsity, extreme right skew, historical anomalies).

To overcome this, we developed Time Series Optimized Transformer for Observability (Toto), a state-of-the-art time series forecasting foundation model optimized for observability data, built by Datadog. Toto achieves top performance on several open time series benchmarks, consistently outperforming existing models in key accuracy metrics. By excelling in these measures, Toto ensures reliable and precise forecasting capabilities, advancing the field of time series analysis.

A state-of-the-art foundation model for time series forecasting

Toto is trained on nearly a trillion data points, by far the largest dataset among all currently published time series models. This dataset includes a collection of 750 billion fully anonymous numerical metric data points from the Datadog platform, and time series datasets from Large-scale Open Time Series Archive (LOTSA). This project compiles publicly available time series datasets, which helps make our model generalizable to other time series domains.

What makes a foundation model different from a traditional time series model?

Traditional time series models have to be trained individually for each metric that users want to analyze. This works well, but it is challenging to scale this process to observability data, where we need to analyze a vast number of individual metrics in near real time. This scaling limitation has hindered the adoption of deep learning–based methods for time series analysis.

Large foundation models for time series are a recent development made possible by the same advances powering large language models. Unlike traditional methods, these are not trained on any one time series or domain. Rather, they are trained on a massive dataset of diverse time-series data, meaning they have observed trends across many datasets. They are more able to perform “zero-shot” predictions on new time series inputs that were not seen in their training data, while matching or even exceeding the state-of-the-art performance of full-shot models that were trained on the individual target datasets.

While several time series foundation models have been released in the past year, ours is the first one to include a significant quantity of observability data in its pre-training corpus.

Performance

Datadog’s foundation model for time series matches or beats the state of the art for zero-shot forecasting on standard time series benchmark datasets. In addition, it significantly improves on the state of the art for forecasting observability metrics.

Public benchmarks

To assess general-purpose time series forecasting performance, we use the popular Long Sequence Forecasting repository to benchmark the ETTh1, ETTh2, ETTm1, ETTm2, Electricity, and Weather datasets. We evaluate with forecast lengths of 96, 192, 336, and 720 time steps, in sliding windows with stride 512, and average the results. Following standard practice, we report normalized MAE and MSE in order to be able to compare performance across different datasets.

We compared Toto’s average performance with that of other recent zero-shot foundation models and full-shot time series models:

| Zero Shot | Full Shot | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Toto | MoiraiSmall | MoiraiBase | MoiraiLarge | iTransformer | TimesNet | PatchTST | Crossformer | TiDE | DLinear | SCINet | FEDformer | |||

| Mean | MAE | 0.312 | 0.357 | 0.341 | 0.350 | 0.357 | 0.378 | 0.362 | 0.493 | 0.424 | 0.399 | 0.519 | 0.400 | |

| MSE | 0.265 | 0.328 | 0.315 | 0.330 | 0.328 | 0.336 | 0.333 | 0.541 | 0.409 | 0.374 | 0.533 | 0.359 | ||

Datadog observability benchmark

We constructed a benchmark of anonymized Datadog data to measure performance across different observability metrics. In order to obtain a representative and realistic sample of the types of time series found in observability data, we sampled based on quality and relevance signals from metrics usage in dashboards, monitors, and notebooks. The benchmark consists of 983,994 data points from 82 distinct multivariate time series with 1,122 total individual series.

We tested Toto alongside other foundation models. Because observability data can have extreme variation in both magnitude and dispersion, we select symmetric mean absolute percentage error (sMAPE) as a scale-invariant performance metric. We also report symmetric median absolute percentage error (sMdAPE), a robust version of sMAPE that minimizes the influence of the extreme outliers present in observability data. With the lowest sMAPE of 0.672 and sMdAPE of 0.318, Toto proves to be the most accurate for forecasting observability time series data.

| Toto | Chronos-T5Tiny | Chronos-T5Mini | Chronos-T5Small | Chronos-T5Base | Chronos-T5Large | MoiraiSmall | MoiraiBase | MoiraiLarge | TimesFM | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Datadog observability benchmark | sMAPE | 0.672 | 0.809 | 0.788 | 0.800 | 0.796 | 0.805 | 0.808 | 0.742 | 0.736 | 1.246 |

| sMdAPE | 0.318 | 0.406 | 0.391 | 0.401 | 0.393 | 0.396 | 0.418 | 0.370 | 0.365 | 0.639 |

Toto outperforms all zero-shot foundation models and full-shot models not only across the open benchmarks, but also on Datadog’s observability benchmark. You can find more details on the model and our evaluation approach in this technical report.

What this means for our customers

Our research on Toto is aimed at improving the AI, ML, anomaly detection, and forecasting algorithms already in use within the Datadog platform and powering products such as Watchdog and Bits AI.

Toto is still early in its development and isn’t currently deployed in any production systems. We have identified several potential improvements and are currently focused on thorough testing and product integration.

With models such as Toto, we are looking forward to delivering new capabilities and improving our existing ones, detecting and predicting more of your issues before they lead to customer-facing impact, and ultimately allowing you to automate more of your incident resolution.