Bowen Chen

Bryan Lee

Flaky tests are bad—this is a fact implicitly understood by developers, platform and DevOps engineers, and SREs alike. When tests flake (i.e., generate conflicting results across test runs, without any changes to the code or test), they can arbitrarily fail builds, requiring developers to re-run the test or the full pipeline. This process can take hours—especially for large or monolithic repositories—and slow down the software delivery cycle.

However, the cost of letting flaky tests permeate your code base can compound even beyond pipeline slowdowns and occasional retries. Flaky tests require time and effort to fix, erode developer trust in testing and CI, and contribute to developer frustration which ultimately harms job satisfaction.

In this post, we’ll cover how flakiness affects different testing costs, the consequences of eroding developer trust, and some tools and best practices to help you combat flakiness in your systems.

How flakiness compounds the costs of testing

Developers test their code to ensure that the changes they introduce meet standards for performance, reliability, and intended behavior. Today, it’s difficult to imagine developing code without robust testing. However, implementing and maintaining tests carries associated costs—and flakiness compounds these costs.

Creation cost refers to the time and effort required for a developer on your team to write tests and ensure that your system is testable. When your tests flake, you need to investigate whether the flakiness results from the test code or an issue with the system itself, such as unstable test environments or overlooked race conditions in your application code.

Execution cost consists of the time it takes to run your tests and the resources spent to ensure that your CI runs smoothly. Running your test suite can take a very long time, particularly for monolithic repositories. If a flaky test fails your build, you’ll need to retry and do it all over again. Time is a valuable resource—the time developers spend retrying their build is time spent away from development, which slows down delivery cycles.

In order to maintain a healthy CI infrastructure that scales with your product, your organization may need to invest in a dedicated platform team, which also adds overhead costs.

Fixing cost refers to the time spent debugging flaky tests so that they no longer flake, or greatly reducing the frequency at which they flake.

Let’s say you encounter a flaky test that fails your build and make a mental note to investigate and fix it. However, once you retry your build and it passes, you don’t return to fix the test—despite knowing that the test will likely flake again in the future.

This scenario is not uncommon for developers. Fixing flaky tests often requires deep knowledge of the code base and/or test, and it can take away hours from your workweek—often with varying or uncertain results. It can be complex to pinpoint the cause of flakiness, especially if your testing environment relies on multiple dependencies and you lack the necessary tools and visibility needed to direct your investigation. These factors can ultimately deter developers from addressing their flaky tests, as they feel that the fixing cost is too high relative to the payoff.

Psychological cost refers to the mental toll flakiness takes on a developer’s belief in testing, CI, the code that they own, and their own responsibilities as a developer.

If developers begin to feel that the fixing cost is too high to act upon when encountering a flaky test, it not only compounds execution costs (since the test will continue to flake and fail builds) but also psychological costs. In the next section, we’ll discuss how these psychological costs can erode developer trust in testing—and in their work as a whole—over time.

The consequences of eroding developer trust

As developers, we understand that software testing should serve as a representative measure of the correctness and performance of code. If CI is passing, developers should have high confidence that their change will not break anything after their PR is merged. Vice versa, if CI is failing, developers should have high confidence that something is broken in their code that requires troubleshooting.

When flaky tests are left to grow unchecked in your repositories, CI no longer functions as a a reliable indicator of whether the tested code is correct and performant. This is an obvious problem—as CI is not fulfilling its intended goals—that can create even deeper negative impacts.

If a commit fails on a known flaky test, it can become a habit for developers to retry the test until the commit passes. At this point, the test is no longer a tool to verify the integrity of the code—it’s a pain point hindering the delivery cycle. This leads to the erosion of a developer’s trust in testing and CI. If nothing changes, this lack of trust can trickle up and impact their trust in their team, organization, and job satisfaction.

Tests validate code; code is built by developers; developers form teams; teams make the organization. Over time, a developer’s view may shift from one that acknowledges the occasional occurrence of flaky tests to one where they expect flaky tests. This reinforces the mindset of a low-velocity, underperforming development environment—the opposite of what most developers aspire to take part in.

Tools and best practices to address flakiness

Because flaky tests can compound different testing costs, it’s clear they’re often more damaging than developers may initially think. However, how do we go beyond fixing a single flaky test to addressing the issue at scale?

Before we dive into potential solutions, there are two main considerations to keep in mind:

-

Flakiness is inevitable: Regardless of how many best practices for software testing you choose to implement, you cannot completely eliminate flakiness. Even if your services verge on 100 percent stability, the chance that your system flakes compounds relative to the number of dependencies. For microservice architectures with dozens of dependencies, this will always amount to a nontrivial chance that a test flakes following a commit.

-

Combating flakiness requires systems-level change: An individual engineer or a single team alone can’t address the flaky test problem in its entirety. Individuals can do their best to fix known flaky tests, but without the proper systems in place, these efforts are likely to prove unsustainable at scale and may not drive similar action across your organization.

Reacting to each flaky test you encounter can help you improve the issue at hand. However, if you want your CI to be more resilient to flakiness and not deteriorate over time, you’ll need to create workflows and implement tooling to help developers detect flaky tests, re-run them when they flake, and track their prevalence across your codebase. In this section, we’ll discuss some of these tools and best practices that can help you maintain healthy CI and quickly deal with tests when they flake.

Detect flaky tests sooner with automatic flaky test detection

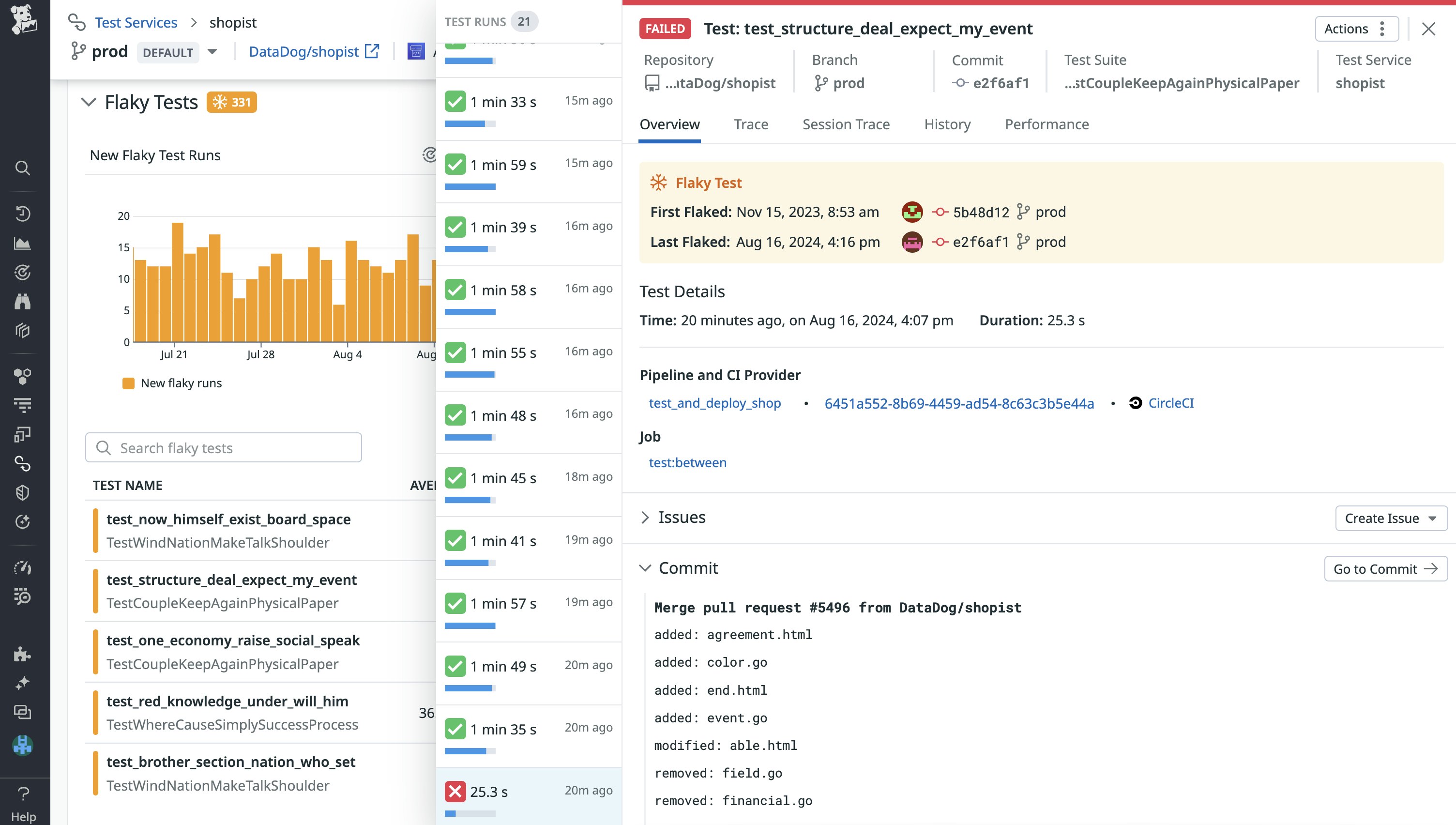

To maintain healthy CI, organizations need to address new flaky tests with a sense of urgency, rather than let them accumulate into an endless backlog. To fix flaky tests quickly, teams need tools that help them identify tests as flaky.

Automatic flaky test detection tools help you identify flaky tests when they’re first introduced. They also save you the time and effort of reviewing historical testing data and repeating test executions to verify that a test flaked.

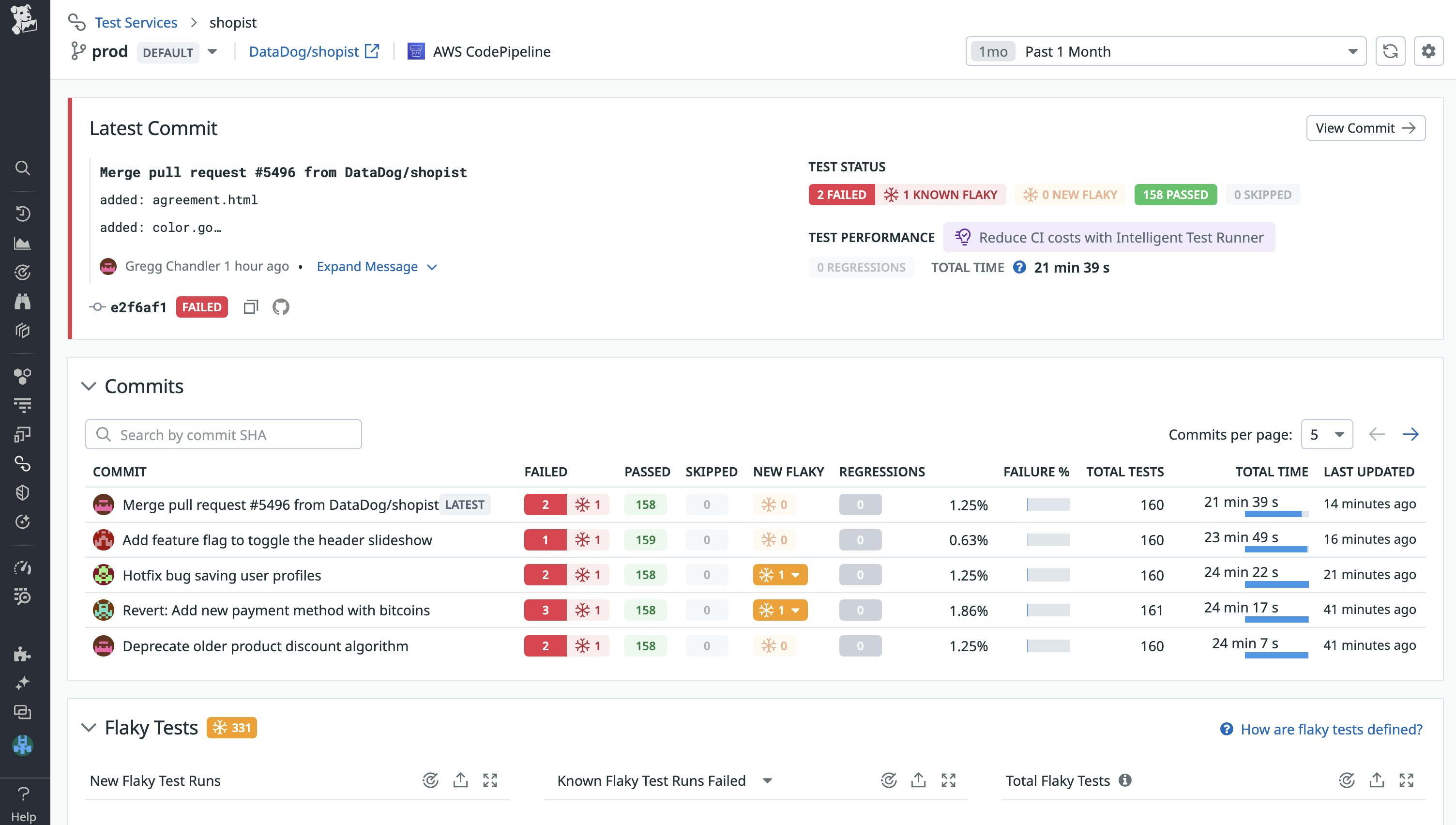

Identifying flaky tests sooner has two main benefits. The earlier a flaky test is fixed, the fewer commits it will affect in the future. In addition, early detection gives you the opportunity to catch the test in development before it’s merged into the default branch, where it will begin to impact other developers working within the repository. Since you’ll only need to troubleshoot the test on your feature branch, this greatly reduces the fixing cost compared to pushing a change or reverting code on the default branch. Tools such as root cause analysis enable you to identify the exact time, commit, and corresponding author to direct your investigation and route the test to the appropriate code owner.

Block commits that introduce flaky tests

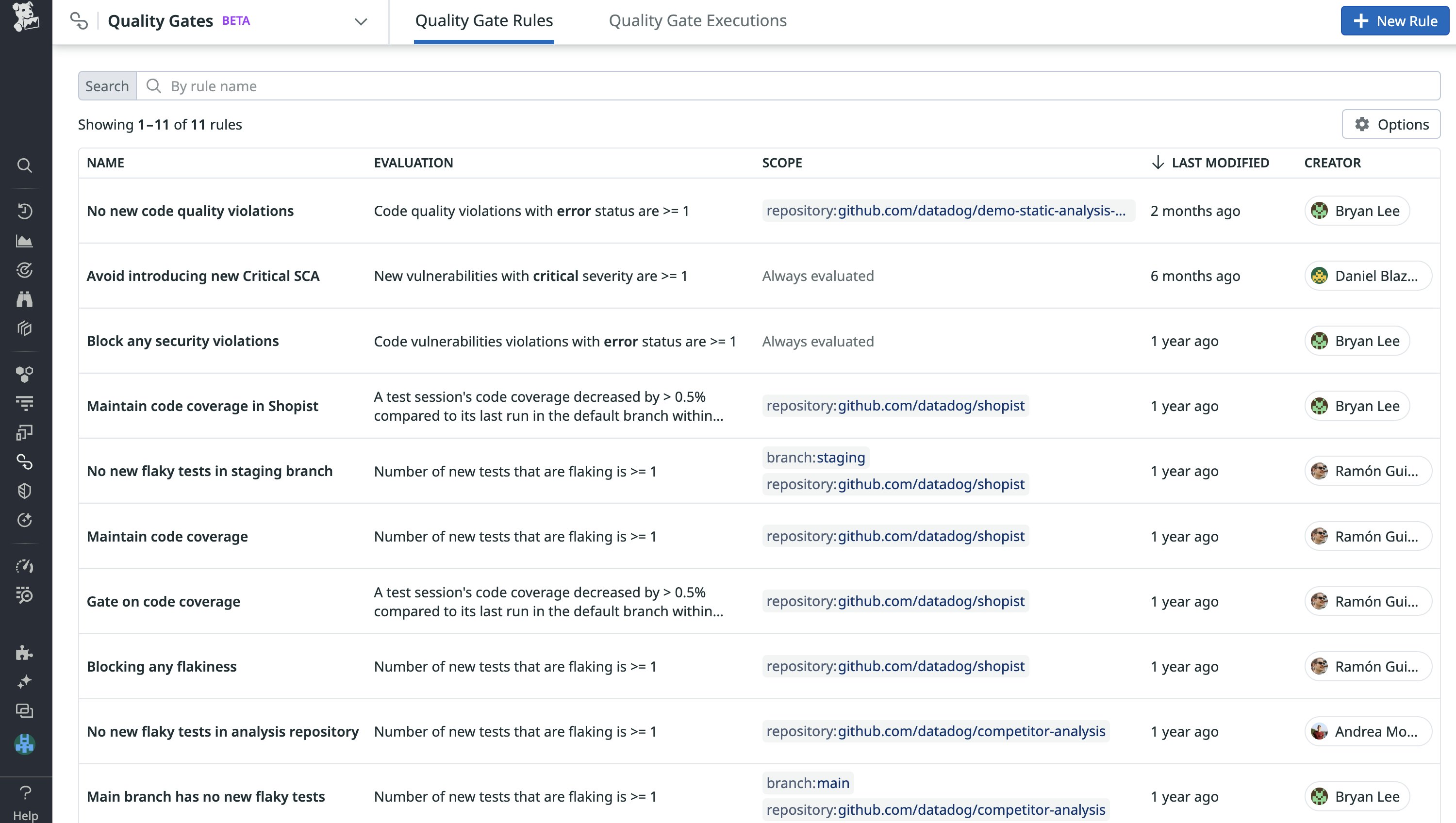

One method to ensure that flaky tests are promptly dealt with is to configure quality gates to block commits that introduce new flaky tests from being merged. Enabling these quality gates in your feature branches enforces action early on in the development cycle. As a result, fewer flaky tests make it into your default branch and your commits are less likely to flake when pushing time-sensitive commits to production.

Configure automatic retries

Configuring automatic retries achieves a few different goals. Retrying newly introduced tests can catch flakiness that would otherwise have been merged into production. For example, a test may only surface flaky behavior after several test runs—however, by repeatedly rerunning the new test, you’re much more likely to detect flakiness during the initial commit. Studies have shown that over 70% of flaky tests exhibit flaky behavior when they’re first introduced, and retries can help you immediately catch these flaky tests before their impact snowballs.

Automatically retrying failed tests also shortens the amount of manual interaction and wait time if the failure was due to flakiness. With automatic retries configured, developers no longer need to waste time manually retrying failed pipelines due to flaky tests. Instead, these tests will be rerun, and in the case of flakiness, the pipeline will ultimately succeed with no manual intervention required.

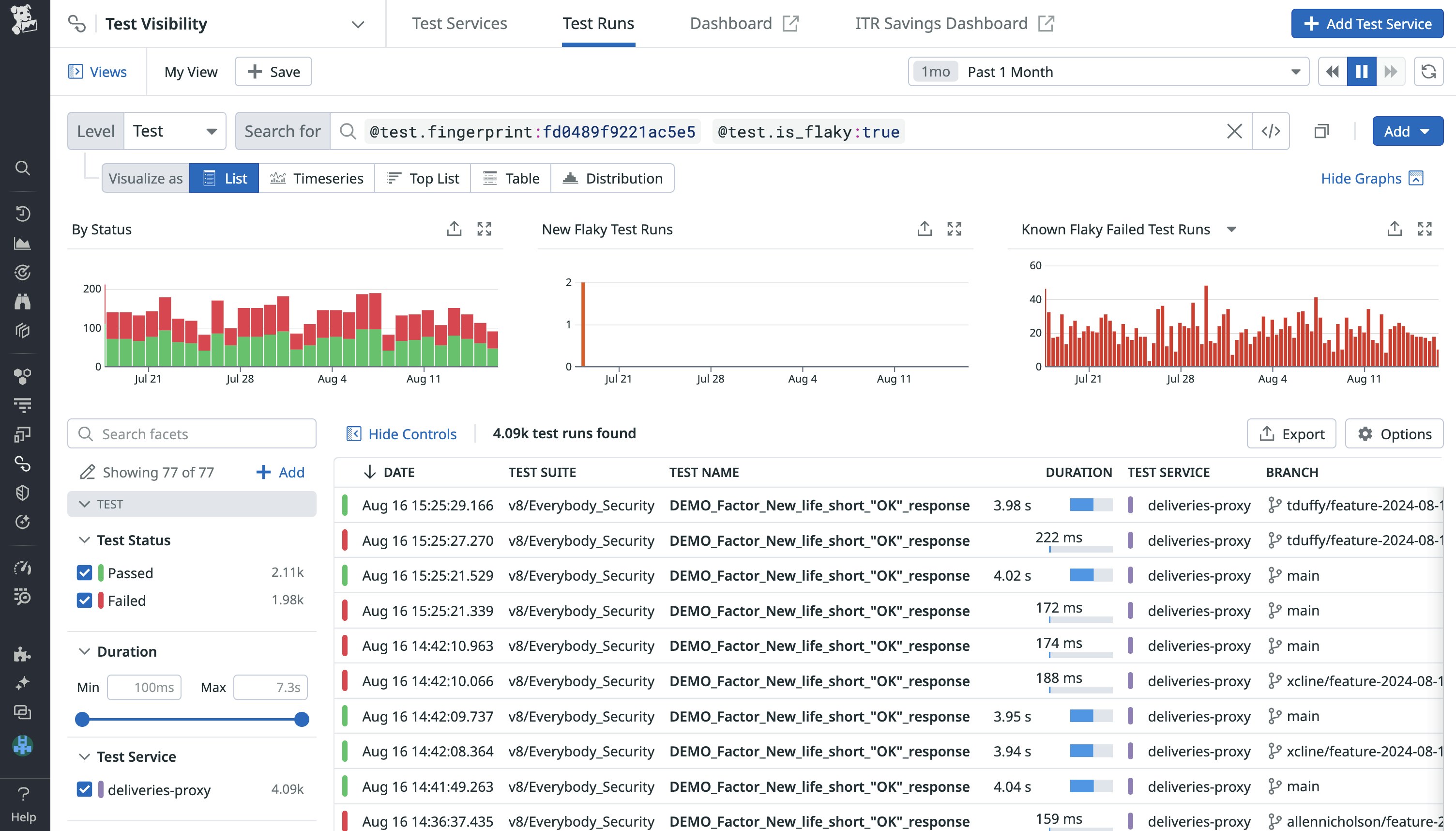

While this method sounds like it results in smoother CI, you may be wondering whether retries obfuscate new flaky tests (i.e., a test first fails and then passes on an automatic retry). But with the help of testing visibility tools, you’re able to identify flaky tests without letting pipelines fail and track historical test results.

Lastly, a flaky test is by definition an unreliable indicator of code correctness or performance. However, if a known flaky test consistently fails on automatic retries, it likely indicates that there is an actual issue within your code that needs to be fixed. In these cases, automatic retries help restore the test’s original purpose to catch bugs, despite its known flaky behavior.

Quarantine tests that frequently flake

For large repositories, discovering new flaky tests can become a daily occurrence, and despite your best efforts, you may not be able to immediately address each one as it surfaces. In order to keep critical production workflows running smoothly, you may consider quarantining tests that cross a flakiness threshold. Quarantined tests still run during builds—however, their result will not affect whether the build fails or succeeds. You can still gain value when reviewing the tests’ outcomes, but they won’t create delays in critical workflows.

The goal is not for quarantined tests to indefinitely remain quarantined, but rather to create time for developers to address a problematic test without creating delays in the delivery cycle. After the test’s code owner is able to deploy a fix, the test remains in quarantine for a set period of time until your test visibility tool verifies that it is no longer flaky. At this point, it can be moved out of quarantine and factor into future build outcomes.

Gain visibility into your flaky tests with Datadog

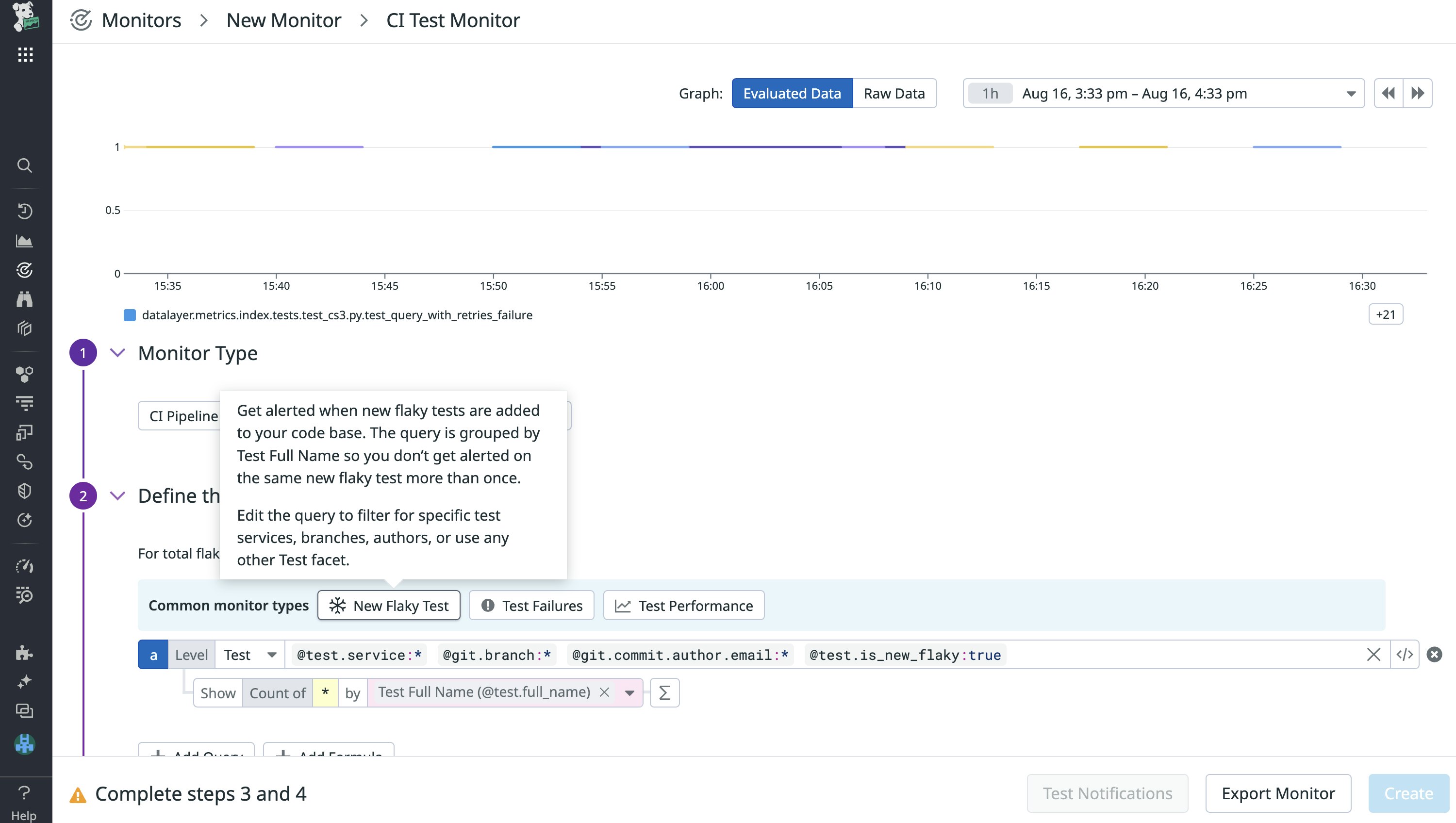

Datadog Test Visibility automatically detects new flaky tests in your services and helps you track their impact over time. Features such as Datadog’s out-of-the-box flaky test monitor, Quality Gates, and Intelligent Test Runner can also help alert you to flaky tests and minimize their impact on your CI workflows. You can learn more about best practices to monitoring software testing in CI/CD and tips to better identify, investigate, and remediate flaky tests in this blog post.

If you don’t already have a Datadog account, sign up for a free 14-day trial today.