Azure App Service is a platform-as-a-service (PaaS) offering for deploying applications to the cloud without worrying about infrastructure. App Service’s “serverless” approach removes the need to provision or manage the servers that run your applications, which provides flexibility, scalability, and ease of use. However, App Service also introduces infrastructure-like considerations that can impact performance and costs. This means that understanding the relationships between App Service resources (e.g., App Service plans and the apps that run on them) is critical for troubleshooting performance issues and optimizing costs.

That’s why we’re excited to release support for App Service in the Datadog Serverless view. You can use this view to:

- Quickly get an overview of App Service, then filter down to the resources you care about

- Map relationships between resources in context with monitoring data

- Investigate apps that exhibit high latency or errors

- Identify underutilized and overloaded App Service plans

- Understand which apps and plans are driving your costs

Visualize all of your Azure App Service resources

In Azure App Service there are three primary resource types that can interact together:

- App Service plans

- Web apps

- Function apps

There are different runtimes and options within each resource type, as well as various ways to establish relationships between them. You can host one or many apps on a given plan, and even mix web apps and function apps in the same plan (as long as they run on the same OS). The tier of an App Service plan determines what types of features are available to the plan (e.g., staging environments), as well as its horizontal scaling limit (i.e., the maximum number of instances that can run in the plan).

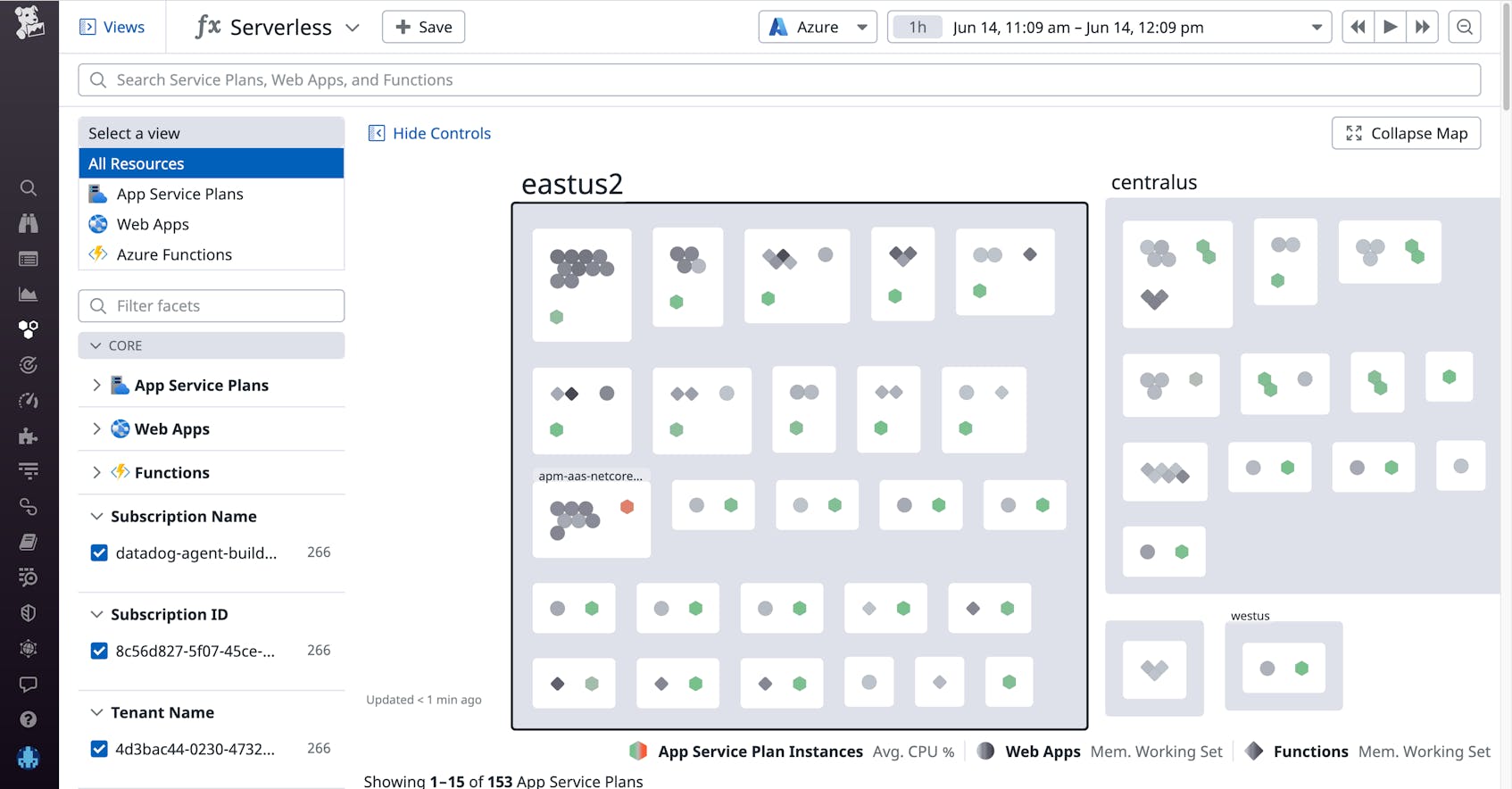

Datadog’s Serverless view was specifically designed with these considerations in mind, and allows you to get a holistic view of your App Service architecture. The App Service Map view provides a bird’s-eye overview of all of your App Services. This can help you:

- Understand the composition of your App Services at a glance

- Visualize the relationships between App Service plans and the web apps and function apps being hosted on them

- Easily identify overloaded App Service plans

- Quickly compare the resource utilization of different apps and functions

Even if you’re running hundreds or thousands of resources, the Serverless view makes it easy to filter down to inspect any layer of your App Service stack. All tags on your App Service metrics—including default tags added by Datadog, as well as any custom tags you may have added to your resources in Azure—are available automatically as facets. Using these facets in the left side panel, you can quickly filter to inspect the exact subset of resources you care about, no matter how that’s defined.

Understand relationships between apps and App Service plans

As mentioned previously, it’s important to understand the relationships that exist between App Service resources. Knowing where each app is running—and what other apps are sharing resources from the same App Service plan—is important for security, troubleshooting, capacity planning, and compliance reasons. The Serverless view makes this information available in a snap; just click on an App Service plan to open a side panel that indicates the set of apps being hosted on it. In the example below, we can see that the App Service plan, apm-linux-container-plan, is hosting two web apps and one function app.

Quickly spot App Service issues

The Serverless view enables you to navigate your App Service plans at a glance. From this vantage point, you can see key data from your plans, such as instance count and resource usage metrics.

You can also drill down to explore the health of your web apps and function apps and sort them by resource utilization or throughput. Below, you can see that the stevelinuxcontainerwebapp app has the highest average response time across all apps.

From here, you can click on the app to get deeper visibility into app-level metrics, traces, and logs that can be helpful for debugging the source of increased latency.

You can also use the Serverless view as a starting point for investigating issues. For example, if you spot signs of resource saturation on an App Service plan (such as high CPU utilization or rising HTTP queue length), it’s important to see which apps may be causing this—and which apps may be affected.

The Serverless view allows you to easily investigate these kinds of issues by getting immediate context around the relationships between your apps and plans. And if you need a more detailed view of metrics, traces, or logs from any given app, you can easily pivot to other parts of the Datadog platform to continue your investigation without losing context.

Identify underutilized and overloaded App Service plans

While App Service abstracts away the underlying infrastructure, your applications are not really serverless. You’re not responsible for maintaining App Service instances, but you still need visibility into the health of your underlying infrastructure to ensure that it can support the load on your apps.

App Service allows you to automatically scale the number of instances in your plan based on rules (e.g., provision a new instance if a plan’s CPU usage exceeds 70 percent). You can also control the size of the instances and the boundaries of autoscaling with instance limits, or the minimum and maximum number of instances that should run in a plan at all times. The instance count (a unique metric generated by Datadog) is an important indicator of the load on an App Service plan. It is also the dimension by which App Service plans are metered for billing. As such, it’s important to ensure that you are scaling your plans up effectively to meet demand, without overprovisioning them and wasting money when the load is low.

Datadog’s Serverless view helps you understand if a plan is overloaded or underutilized at a glance by displaying each App Service plan’s app count, instance count, and CPU and memory usage. Sorting by CPU utilization, we can see that the apm-junkyard-functions plan is at 90 percent CPU utilization. This also indicates that it may be reaching its scaling limit.

We can also dive deeper to understand more about what may be causing this. Since multiple apps can share the same set of resources on an App Service plan, you could run into a “noisy neighbor” situation, where one app impacts the performance of others on the same plan by consuming an outsized portion of the shared resources.

In this case, you see one web app and two function apps sharing this plan. You can quickly spot that the two function apps appear to be consuming significantly more resources than the web app. With this context, you can determine if it makes sense to move any of these apps to another plan or upgrade the existing plan to avoid running into performance degradations and out-of-memory errors. Each plan belongs to a tier that sets a limit on the number of instances it can support, so you’ll need to be mindful of this restriction when determining how to scale your plans and change your plan’s tier if needed.

Alternatively, you may also want to identify plans that could support more apps. Below, you can see that the appsvc_asp_linux_centralus plan is already running six apps, but because it is only using 8 percent CPU, it still has enough capacity to host even more.

To provide further context for this decision, you can click to view the apps running in that plan. In this case, you can see that it is running four web apps and two function apps. This can help you decide whether or not it makes sense to provision more apps in this particular plan. For example, if you’re planning to release a new feature utilizing one of these apps, you may want to ensure that the plan has enough capacity to support the launch. Or if it looks like one of the web apps has very high response time (as shown below), you may want to hold off on adding more apps to this plan until you’ve had a chance to investigate what may be causing this slowdown.

Get insight into your App Service plans’ APM usage

The Datadog extension for Azure App Service provides automatic APM tracing for your apps. These are metered by Datadog APM based on the instance count of the underlying App Service plan. For example, if you install the extension on an app and it is running on an App Service plan with an instance count of three, this would count as three App Service instances in APM. But these instance counts are dynamic, and App Service plans can host many apps—some of which may be submitting traces and some not.

The Serverless view enables you to understand which App Service plans are contributing to APM usage, and drill down to see exactly which apps are submitting traces. This allows you to ensure the proper deployment of APM within App Service, and even provides a detailed breakdown of any apps that have not been configured properly.

The Data column has a trace icon that indicates whether or not the resource is submitting traces to Datadog. You can sort the list of your App Service plans by this column to pull all of the ones with apps submitting APM traces to the top, or you can use the emitting_traces facet to filter the list.

From here, the Instance Count column shows you the number of billable APM instances. So in this case, Datadog is collecting traces from a total of six App Service instances across three App Service plans. But you can also click to inspect an App Service plan, and see which apps are actually submitting those traces. In the example below, it looks like only one of the 17 apps running on this plan is actually submitting traces, so you might consider deploying the App Service extension on the others as well.

Get started today

If you’re already using the Azure integration, you will automatically be able to explore your App Service resources in the new Serverless view. Otherwise, install Datadog’s Azure integration to start monitoring App Service and all the other services in your stack.

If you don’t yet have a Datadog account, sign up for a 14-day free trial to get complete visibility into the health of your Azure environment.