Anjali Thatte

Curtis Maher

AWS Inferentia and AWS Trainium are purpose-built AI chips that—with the AWS Neuron SDK—are used to build and deploy generative AI models. As models increasingly require a larger number of accelerated compute instances, observability plays a critical role in ML operations, empowering users to improve performance, diagnose and fix failures, and optimize resource utilization.

Datadog provides real-time monitoring for cloud infrastructure and ML operations, offering visibility through LLM Observability and over 1,000 integrations with cloud technologies. Now, with our AWS Neuron integration, users can track the performance of their Inferentia- and Trainium-based instances, helping ensure efficient inference, optimize resource utilization, and prevent service slowdowns.

Comprehensive visibility into AWS Inferentia and AWS Trainium health and performance

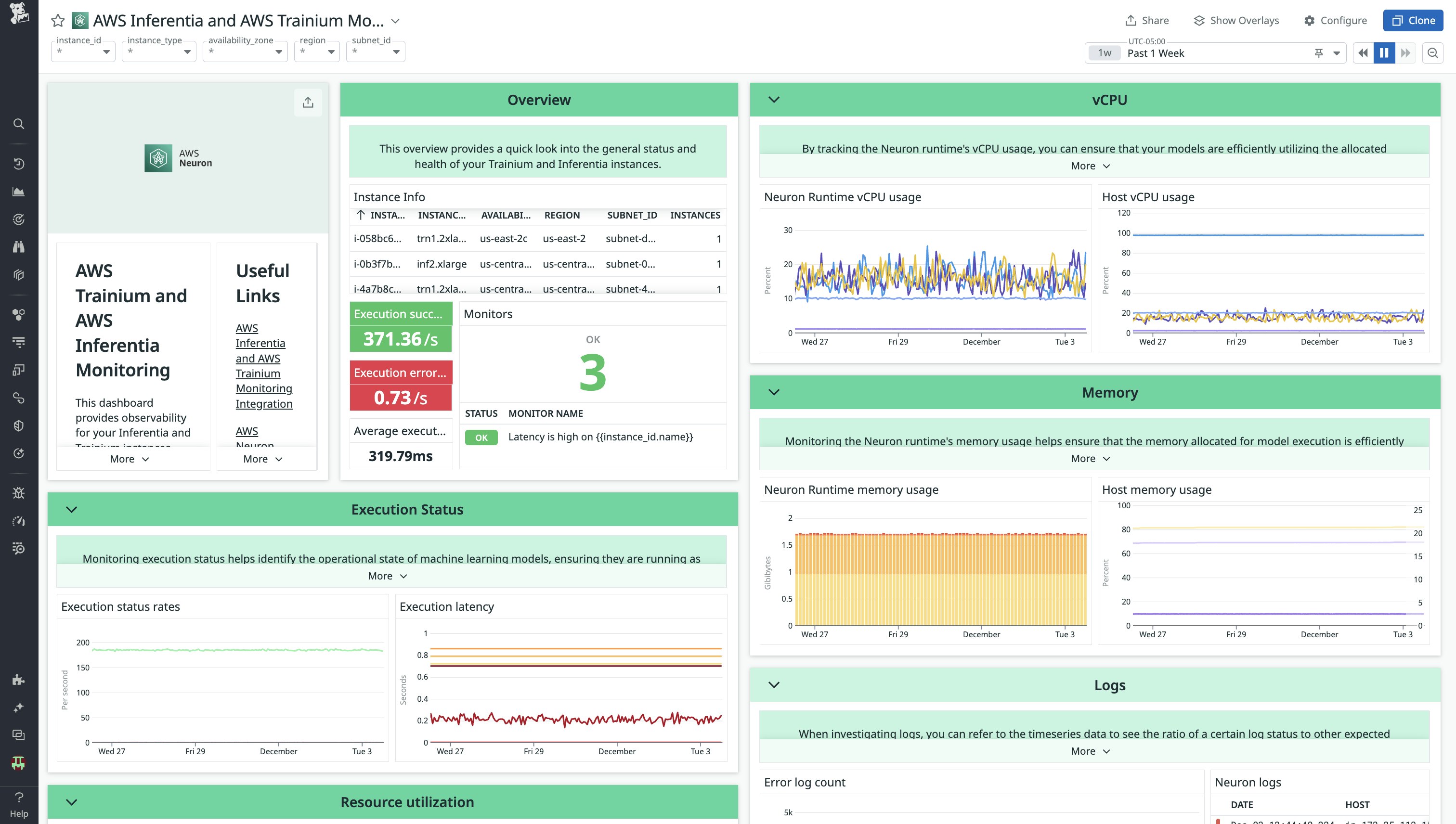

Datadog’s integration with the AWS Neuron SDK automatically collects metrics and logs from Inferentia and Trainium instances and sends them to the Datadog platform. Upon enabling the integration, users can start monitoring immediately with an out-of-the-box (OOTB) dashboard, modify preexisting dashboards and monitors, and add new ones tailored to their specific monitoring requirements.

The OOTB dashboard offers a detailed view of your AWS AI chip performance (Inferentia or Trainium), such as the number of instances, availability, and region. Real-time metrics give an immediate snapshot of infrastructure health, with preconfigured monitors alerting teams to critical issues like latency, resource utilization, and execution errors.

For example, when latency spikes on a specific instance, a monitor will turn red on the dashboard and trigger alerts via Datadog or other paging mechanisms (like Slack or email). High latency may indicate high user demand or inefficient data pipelines, which can slow down response times. By identifying these signals early, teams can quickly respond in real-time to maintain high-quality user experiences.

Datadog’s Neuron integration enables tracking of key performance metrics, providing crucial insights for troubleshooting and optimization:

-

Execution status: Monitor how many model inference runs successfully complete per second, and track failed or incomplete inferences. With this data, you can ensure models are running smoothly and reliably. If failures increase, it may signal issues with data quality or model compatibility that need to be addressed.

-

Resource utilization: Gain a granular view of memory and vCPU usage across NeuronCores. This helps you understand how effectively resources are being used and when it might be time to rebalance workloads or scale resources to prevent bottlenecks from causing service disruptions in your end-user AI applications.

-

vCPU usage: Keep an eye on vCPU utilization to ensure your models are not overburdening the infrastructure. When vCPU usage crosses a certain threshold, you will be alerted to decide whether to redistribute workloads or upgrade instance types to avoid performance slowdowns.

By consolidating these metrics into one view, Datadog provides a powerful tool for maintaining efficient, high-performance Neuron workloads, helping teams identify issues in real-time and optimize infrastructure as needed. Using the Neuron integration combined with Datadog’s LLM Observability capabilities, users can gain comprehensive visibility into their LLM applications.

Get started with monitoring AWS Inferentia and AWS Trainium today

Datadog’s integration with AWS Neuron provides real-time visibility into AWS Inferentia and AWS Trainium, helping customers optimize resource utilization, troubleshoot issues, and ensure seamless performance at scale.

To learn more about how Datadog integrates with Amazon machine learning products, you can check out Datadog’s AWS Neuron documentation or blog posts on monitoring Amazon Bedrock and Amazon SageMaker with Datadog.