Matthieu Jaillais

Aaron Kaplan

Over the past few years, Arm has surged to the forefront of computing. For decades, Arm processors were mainly associated with a handful of specific use cases, such as smartphones, IoT devices, and the Raspberry Pi. But the introduction of AWS Graviton2 in 2019 and the adoption of Arm-based hardware platforms by Apple and others helped bring about a dramatic shift, and Arm is now the most widely used processor architecture in the world.

In 2023, with the stage set by Arm’s growing adoption, widening software support, and increasingly impressive cost and energy efficiency, Datadog undertook a massive project: in a concerted effort by dozens of teams, we migrated nearly our entire Kubernetes fleet on AWS—comprising hundreds of applications and thousands of microservices—to Graviton-powered EC2 instances.

In this post, we’ll provide a detailed overview of Datadog’s migration to Arm. We’ll explore the evolution of our CI/CD system and show you how we created visibility into the migration that helped us make informed decisions, understand our progress, and coordinate our efforts at scale. Finally, we’ll highlight what we ultimately accomplished with the migration, from greater cost and energy efficiency to improved resilience and portability.

Before the migration

We first started experimenting with Arm in 2017, when we introduced the first Arm-compatible Datadog Agent (the “Puppy Agent”—a stripped-down version lightweight enough to run on a Raspberry Pi). Over the next few years, as software support for Arm became increasingly widespread—and with the introduction of Graviton2 in 2019, which brought a major leap in Arm-based performance and savings—there was a clear opportunity to adapt our applications and services to Arm without compromising on performance.

Arm promised growing cost and energy savings. In 2022, we stood to reduce our EC2 costs by as much as 20 percent by using Graviton-based instances instead of x86-64 ones. Moreover, the tide was clearly turning toward Arm, and we wanted to ensure the ongoing adaptability of our infrastructure.

So, in 2022, we started migrating services to Arm on a piecemeal basis. By April 2023, about 20 percent of our Kubernetes fleet on AWS was running on Graviton-based EC2 instances. At that point, the timing was right for a concerted push, and we hatched a plan to migrate our entire fleet to Arm by the end of the year.

Planning the migration

Our Kubernetes fleet on AWS comprises thousands of separate containerized services. At a high level, planning the migration meant determining which of these services could be migrated to Arm and which ones we would benefit the most from migrating.

Our first step was to benchmark performance and costs for each of our services. This meant determining standard CPU, memory, and network usage while working with our FinOps team to analyze our current costs and estimate our potential savings with Datadog Cloud Cost Management. To achieve this, we used DDSQL to pull a list of all of our EC2 instances. We then joined this data to a reference table that mapped the most likely paths of migration by correlating our currently used instance types with the closest Graviton equivalents—for example, we assumed that an m5.2xlarge instance would be replaced by an m6g.2xlarge instance. Then, we used the costs per core of each of these instance types to calculate conservative savings estimates (in percentages) for migrating each instance type. This would serve as an important guide in setting priorities throughout the course of the migration.

In order to maximize our savings potential, we identified our 25 most expensive workloads (using Cloud Cost Management) along with those that would be relatively quick to migrate. Our container registry was an important resource when it came to the latter, as the manifest for each image in the registry includes information on the supported architectures for that image. Services using Arm-compatible images—especially those built with interpreted rather than compiled languages—had the greatest potential to be migrated quickly. For services using images that did not support Arm, the technical program manager in charge of the migration worked with our Developer Experience team to find ways forward by creating alternate, Arm-compatible images or designating those services as exceptions.

Later in this post, we’ll go into more depth on how we tracked and communicated priorities at scale during the migration. But first, we should define the migration process itself; creating guidelines for this process was another key part of preparing and managing the migration at scale.

The migration pipeline

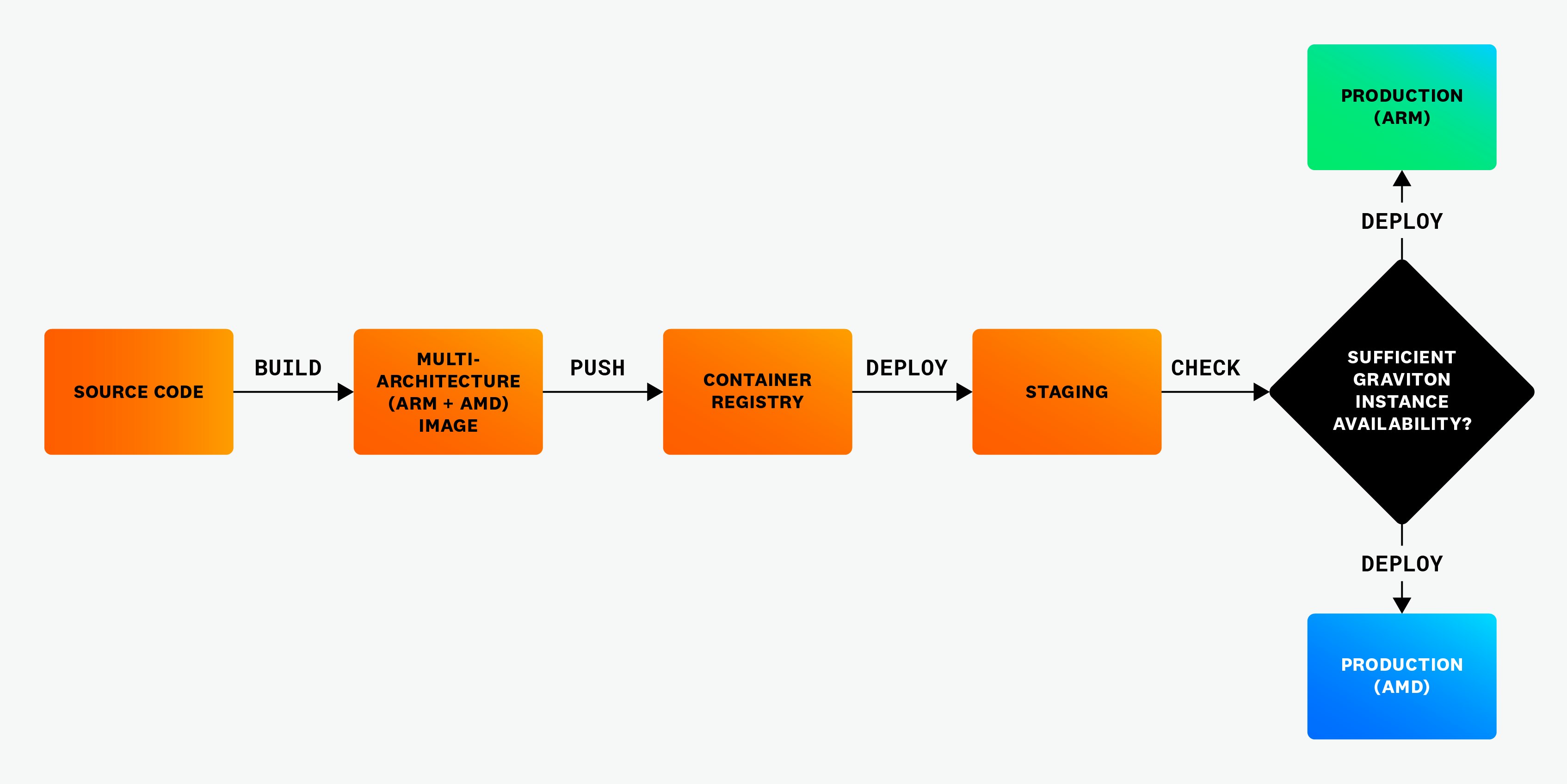

Migrating to Arm required major changes throughout our entire CI/CD pipeline. In this pipeline, each service gets built into a container image, which is then stored in our container registry. From there, the service is deployed into staging and then into production. In the pipeline for migrating each of our services running on Kubernetes in AWS to Arm, we created a multi-architecture build of each service (compatible with both x86 and Arm), ran tests in staging (rebuilding as needed), and then, having verified sufficient capacity for Arm-based EC2 instances, deployed to Kubernetes in production.

Next, we’ll take a closer look at each stage of the migration pipeline.

Multi-architecture builds: Packaging our applications and services for Arm

At Datadog, we run many different languages. When it came to building our applications and services for Arm, services built with interpreted languages—like Python and JavaScript—presented relatively few difficulties. The main challenges were for compiled languages; many of our services are written in Go, for example. Building these in Arm meant ensuring the compatibility of all of their dependencies, including links against native libraries that often weren’t built for Arm.

Beyond these compatibility issues, we also faced performance issues when it came to build time. In our CI/CD pipeline, we started out using x86 GitLab runners capable of emulating Arm through QEMU. This solution helped us cut down on the significant refactoring that creating multi-architecture images entails. But we soon encountered problems when it came to building more complex images: builds that had taken seconds for x86 were taking minutes, and those that had taken minutes were taking hours:

| Image | Emulated Build | Native Build |

|---|---|---|

| hello-world | 1 min 4 sec | 23 sec |

| toolbox | 92 min | 16 min |

Clearly, this wasn’t sustainable. Ultimately we found a solution with Docker BuildKit, which enabled us to build images in Kubernetes by flexibly incorporating Arm nodes alongside x86 ones in our pipeline.

Staging: Ensuring optimal performance

Once each service was deployed in staging, we had to ensure that running the service on Arm would not compromise its performance to an extent that would affect our customers or cancel out potential savings. If the new, multi-architecture build failed on this count, we would investigate the potential causes, make adjustments, and rebuild as needed. In some cases, there were no clear workarounds, and the service was excluded from the scope of the migration.

Verifying instance availability and deploying to production

To migrate our most compute-intensive services, we sometimes required cutting-edge instance types. Graviton3-based instances had just been introduced at the time of the migration and were in high demand. Where Graviton3 was needed, we had to check instance availability before deploying and use x86 instances as a fallback. We used a canary strategy for deploying to production, testing along the way.

In summary, migrating individual services to Arm was a complex process that required ensuring the compatibility of dependencies, benchmarking performance, monitoring deployments in staging and production, and iterating as necessary in order to optimize. Given the complexity of the migration process and the scale at which we were conducting it—with many separate teams working in parallel—establishing observability into the migration pipeline was essential.

Tracking the migration

To go about tracking the migration, we defined four KPIs:

-

Arm adoption rate in production: By monitoring our EC2 infrastructure with Datadog, we were able to count the quantity of Graviton cores in our production infrastructure and the portion of CPU they accounted for. This was our primary KPI for quantifying our progress, and all other KPIs were weighted accordingly.

-

Baseline Arm-readiness: As mentioned earlier in this post, the manifest for each image in our container registry includes information on the supported architectures for that image. By calling the registry, we were able to check the Arm compatibility of each image used in production based on tags on our running cores. This helped us continuously measure the possible scope of the migration.

-

Share of exceptions: As mentioned, there were some cases in which migrating to Arm was not feasible. Tracking exceptions was important for refining our numbers when measuring our progress and understanding the impediments to the migration. We tracked exceptions in three separate categories:

- Services that were deprecated or being decommissioned.

- Services that exhibited degraded performance on Arm to an extent that might affect our customers or negate cost benefits.

- Services that required a special Arm instance type (with high-performance local storage or high network bandwidth) due to performance issues.

-

Jira tracking coverage: Technical program managers supplied engineering teams with a process for tracking their migration progress in Jira. Jira tracking was an important tool for creating shared visibility into the migration across our entire fleet, especially for prolonged service migrations that took place over multiple fiscal quarters.

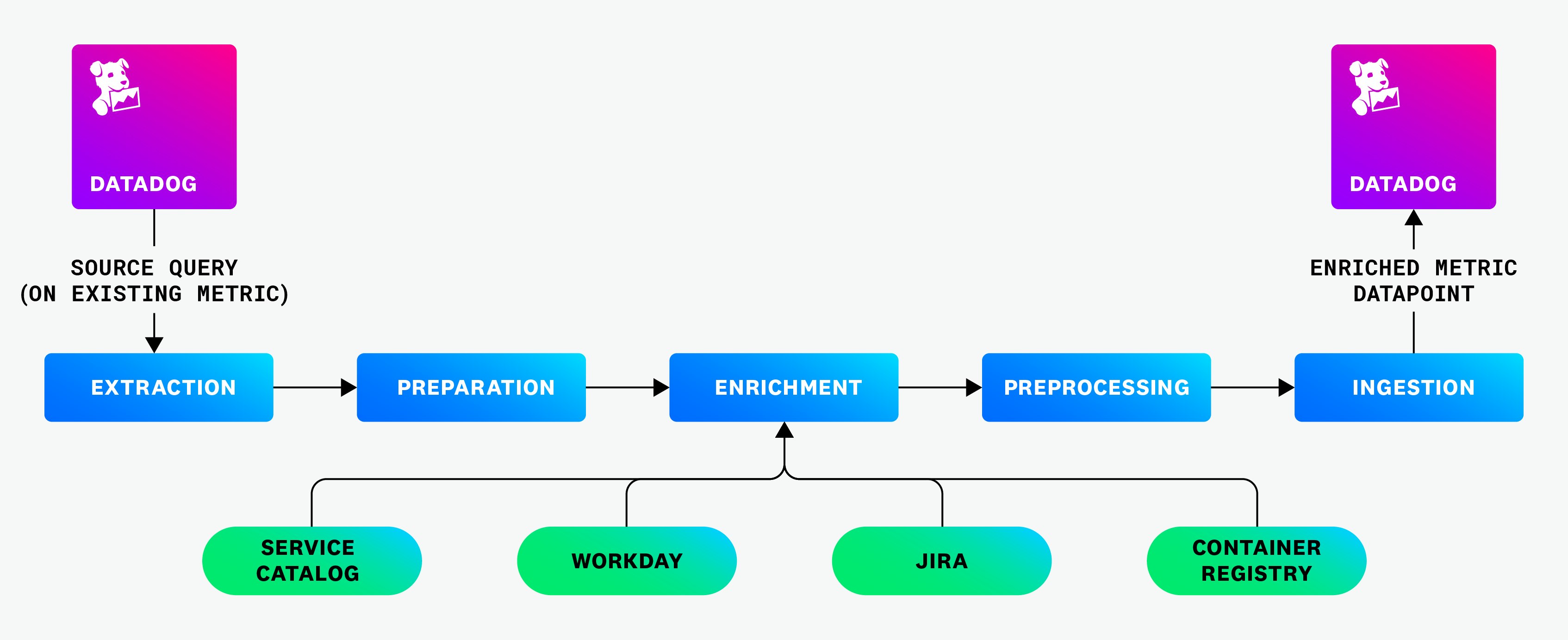

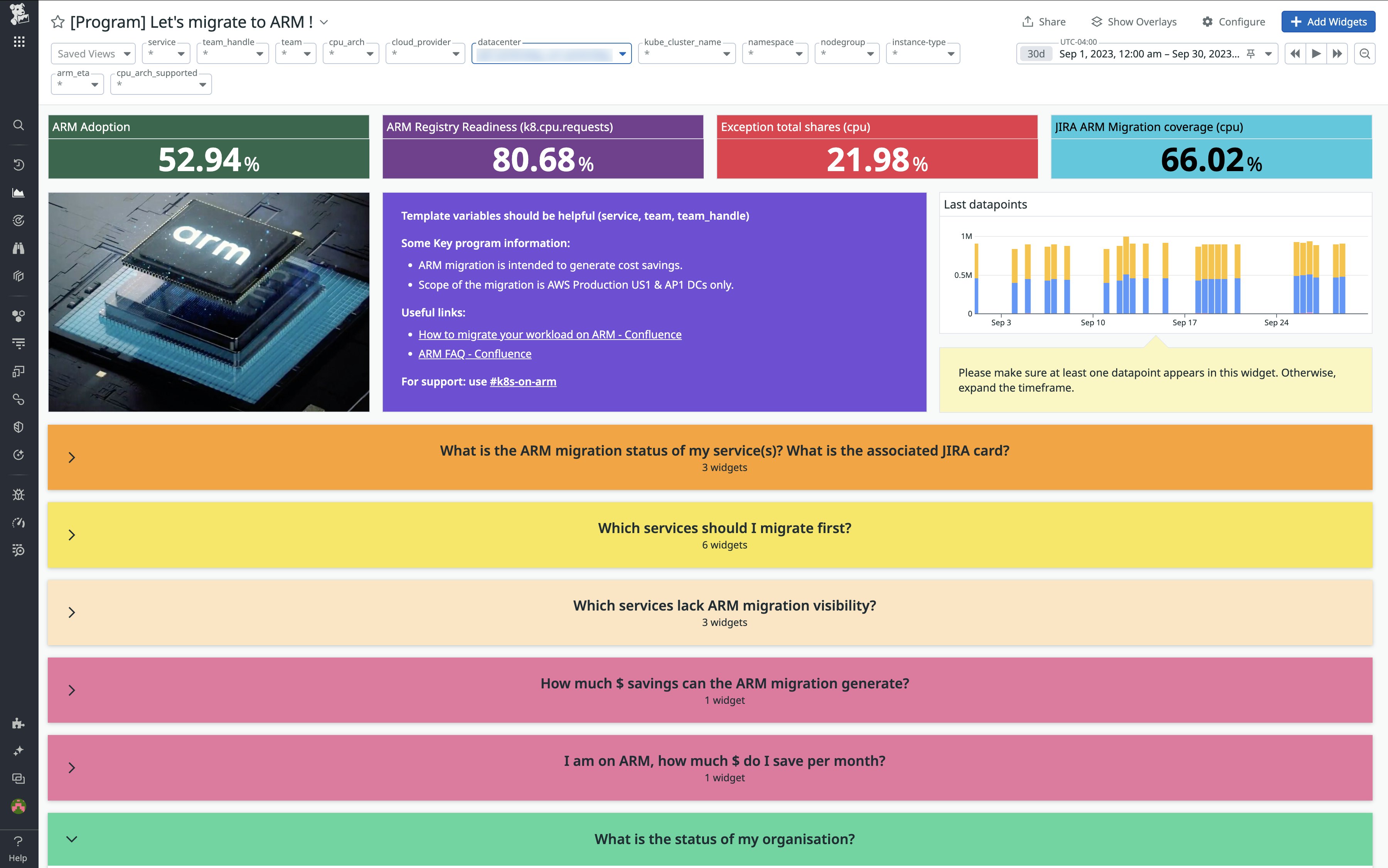

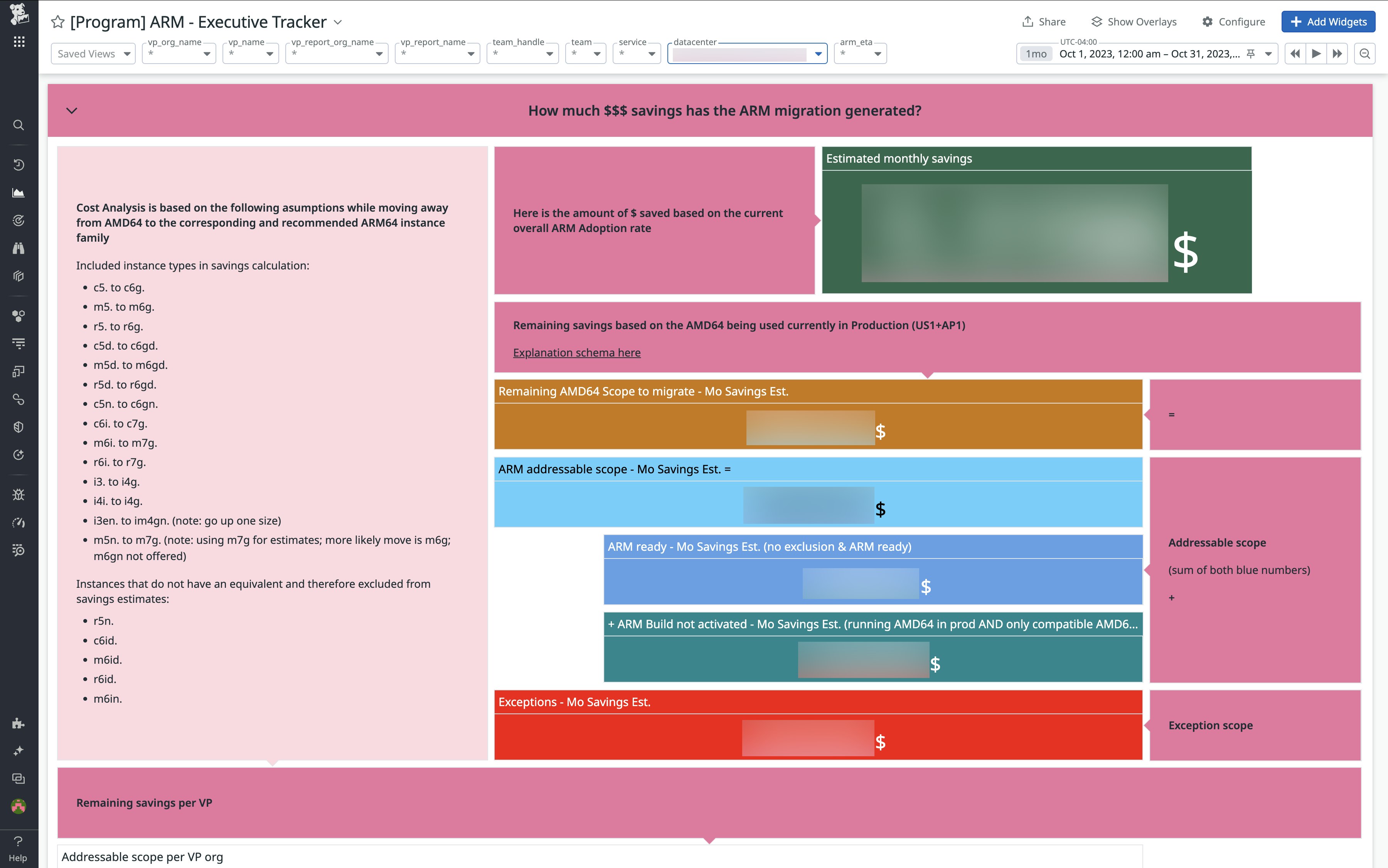

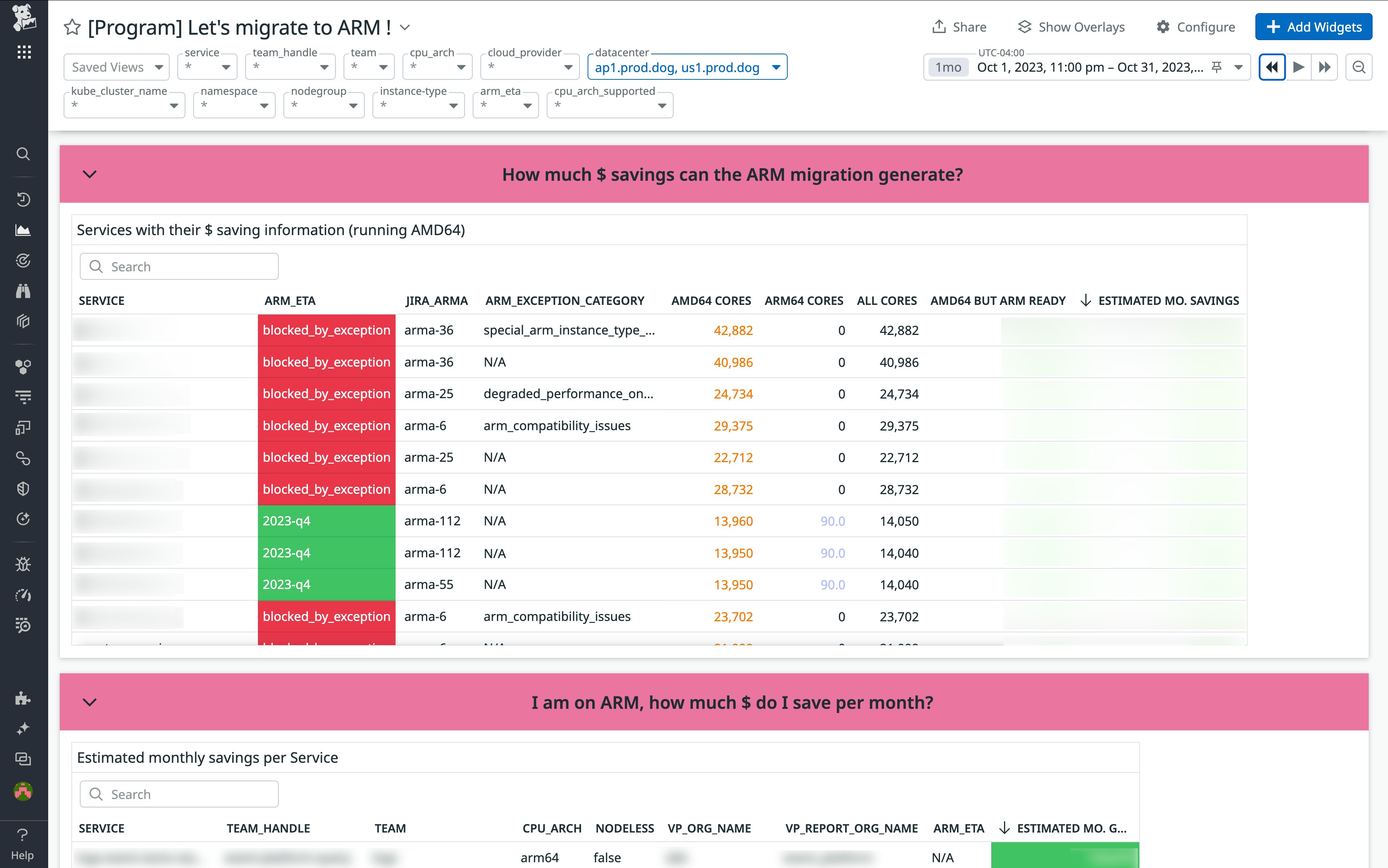

With these KPIs defined, we had the foundations in place for tracking the migration. These KPIs became the basis for two primary dashboards: one designed for engineers and another for executives. These dashboards were built around metrics that were enriched with information pulled from the Datadog Service Catalog, Workday, Jira, and our container registry to provide the right level of information to different types of stakeholders.

The engineering dashboard provided a high-level overview of the migration’s status. As shown below, the four primary KPIs for the migration were tracked at the top:

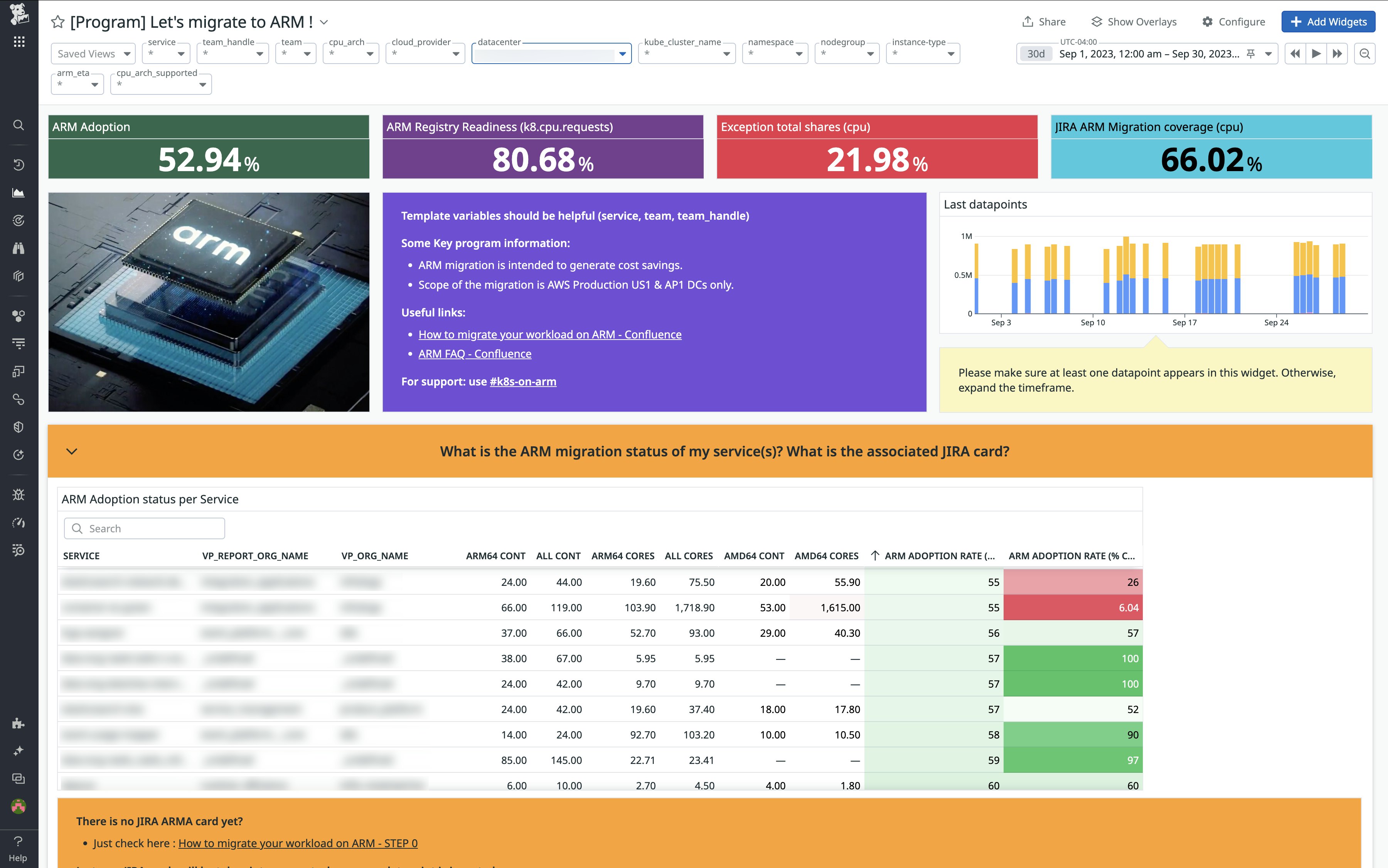

This dashboard also enabled service owners to check on the migration status of their individual services:

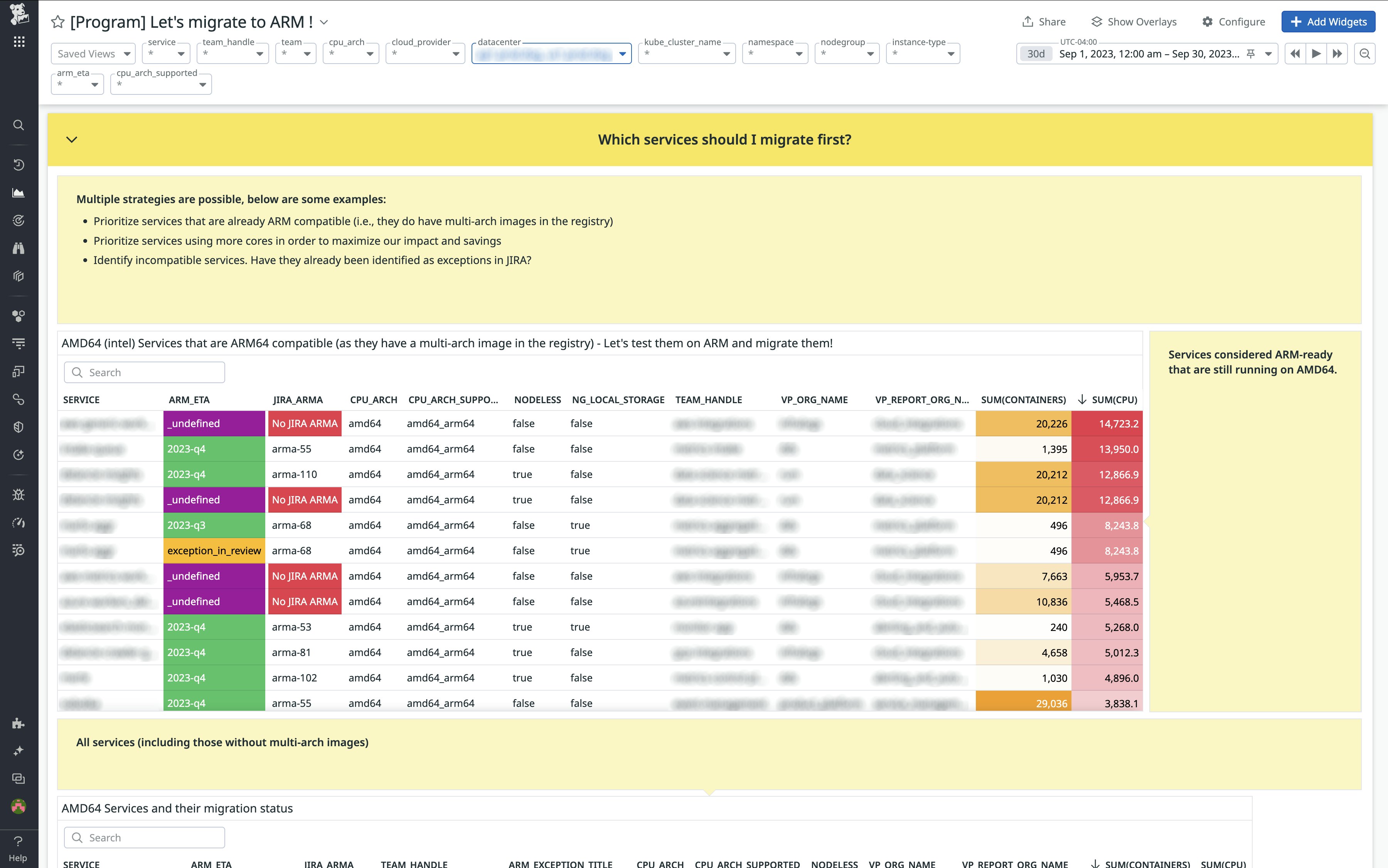

It also helped them identify services to prioritize for the migration:

These dashboards also played an important role in helping us measure results as the migration progressed. Next, we’ll look at how we used Datadog Cloud Cost Management to put precise numbers on the cost savings accomplished in the migration.

Pinpointing our savings

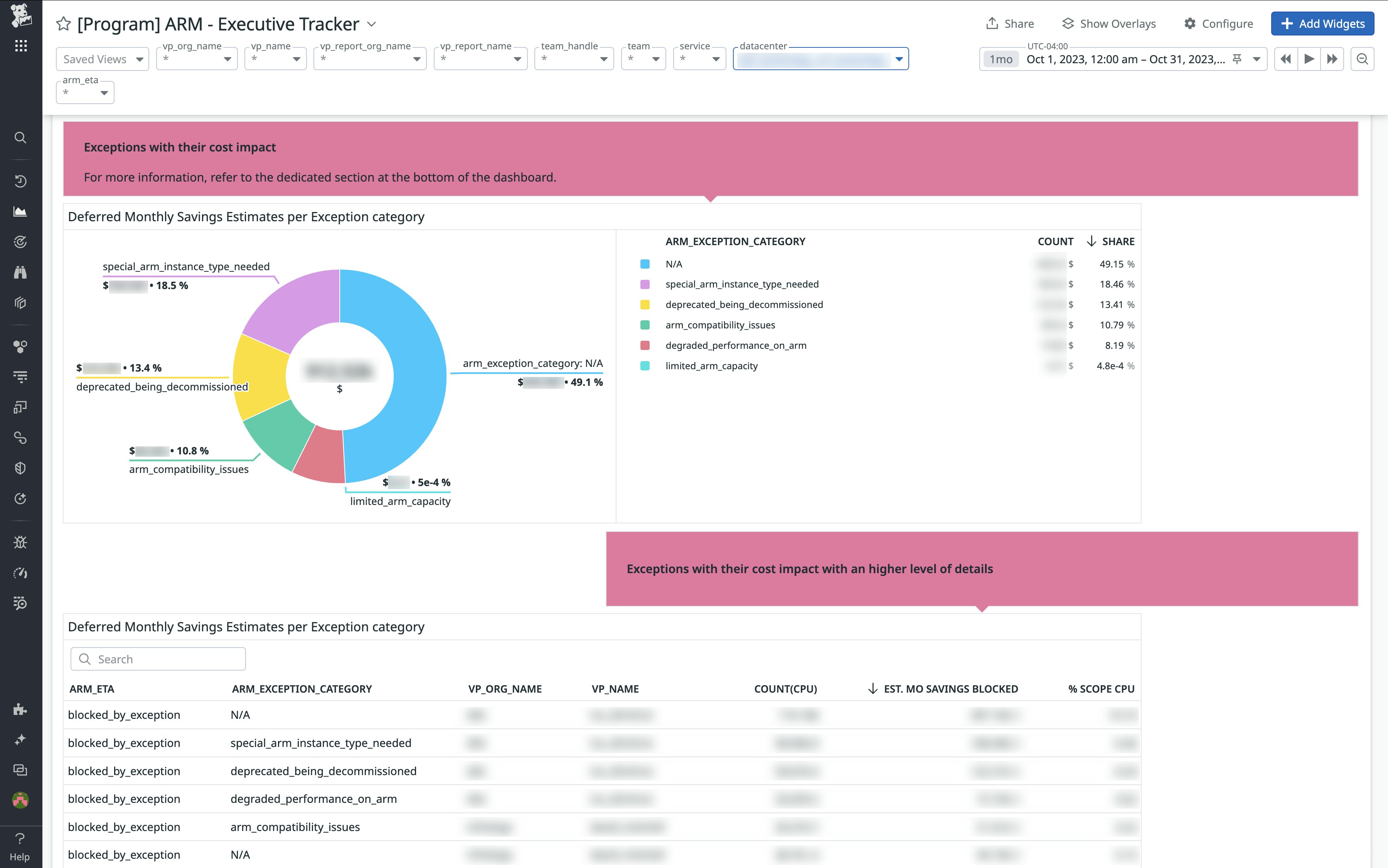

Cloud Cost Management played a key role at every stage of the migration by allowing us to quantify the actual and potential savings of migrating our services to Arm. Our executive dashboard (shown below) broke down the savings associated with the addressable scope of the migration and those associated with services that had been designated as exceptions.

This dashboard also broke down these numbers even further—for example, by type of exception:

We also used Cloud Cost Management to give service owners and engineers visibility into the actual and potential savings associated with their services on our engineering dashboard. We calculated conservative savings estimates for migrating each service by using the reference table mentioned earlier in this post with a custom metric for the number of CPU requests per Kubernetes workload.

These estimates were key in motivating engineering teams and helping them set priorities for the migration. They enabled important decision-making throughout the course of the migration, such as weighing the potential savings associated with services that had been designated out of scope against the overhead associated with migrating them. Alongside our other KPIs, this visibility was integral to the success of the migration.

In the end, the scope of the migration encompassed roughly 70 percent of our AWS workloads—totaling around 2000 separate services—which we succeeded in migrating to Arm by the end of 2023. Datadog Cloud Cost Management and Service Catalog played critical roles in this effort, providing indispensable visibility to both engineers and executives that enabled us to set priorities and stay on track at scale.

Within Arm’s reach: Passing on our savings and fortifying our stack

Migrating our Kubernetes fleet on AWS to Arm has had major benefits for Datadog. The migration helped us achieve major savings—a 10 percent reduction in our AWS bill—allowing us to keep costs constant as we’ve added a wealth of new features. What’s more, by giving us the ability to run the majority of our stack on different architectures, the migration provided important flexibility and improved the durability of our systems. Since we can now use x86 and Arm infrastructure interchangeably in many cases, our options for failover and disaster recovery have multiplied. And, as Arm’s footprint continues to grow, the migration has opened the door to new opportunities for going multi-architecture with other cloud providers.

You can learn more about Datadog Cloud Cost Management, using Datadog with Arm, and much more in our other blog posts.